Join tens of thousands of data leaders, engineers, scientists and architects from around the world at Moscone Center in San Francisco, June 10–13. Explore the latest advances in Apache Spark™, Delta Lake, MLflow, LangChain, PyTorch, dbt, Prest...

- 7641 Views

- 1 replies

- 4 kudos

02-12-2024

Get your first look at DBRX April 25, 2024 | 8 AM PT If you’re using off-the-shelf LLMs to build GenAI applications, you’re probably struggling with quality, privacy and governance issues. What you need is a way to cost-effectively build a custom LLM...

- 2298 Views

- 3 replies

- 2 kudos

2 weeks ago

AI has the power to address the data warehouse’s biggest challenges — performance, governance and usability — thanks to its deeper understanding of your data and how it’s used. This is data intelligence and it’s revolutionizing the way you query, man...

- 2983 Views

- 5 replies

- 1 kudos

2 weeks ago

Community Activity

Latest from our Blog

MLOps Gym - Beginners Guide to Cluster Configuration for MLOps

When setting up compute, there are many options and knobs to tweak and tune, and it can get quite overwhelming very quickly. To help you with optimally configuring your clusters, we have broken dow...

Why Serverless Databricks SQL is the best for BI workloads: Part IV - Remote Query Result Cache

Authors: Andrey Mirskiy (@AndreyMirskiy) and Marco Scagliola (@MarcoScagliola) Welcome to the fourth part (#4) of our blog series on “Why Databricks SQL Serverless is the best fit for BI workloads”. I...

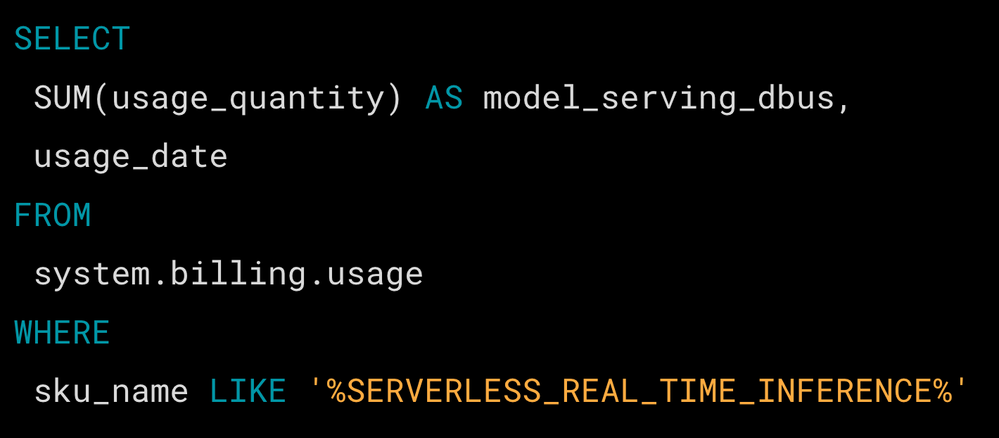

Attributing Costs in Databricks Model Serving

Databricks Model Serving provides a scalable, low-latency hosting service for AI models. It supports models ranging from small custom models to best-in-class large language models (LLMs). In this blog...

MLOps Gym - Unity Catalog Setup for MLOps

Unity Catalog (UC) is Databricks unified governance solution for all data and AI assets on the Data Intelligence Platform. UC is central to implementing MLOps on Databricks as it is where all your as...