Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- How can we run scala in a jupyter notebook?

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-13-2021 11:58 PM

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-20-2022 01:01 AM

Step1: install the package

pip install spylon-kernelStep2: create a kernel spec

This will allow us to select the Scala kernel in the notebook.

python -m spylon_kernel installStep3: start the jupyter notebook

ipython notebookAnd in the notebook we select

New -> spylon-kernelThis will start our Scala kernel.

Step4: testing the notebook

Let’s write some Scala code:

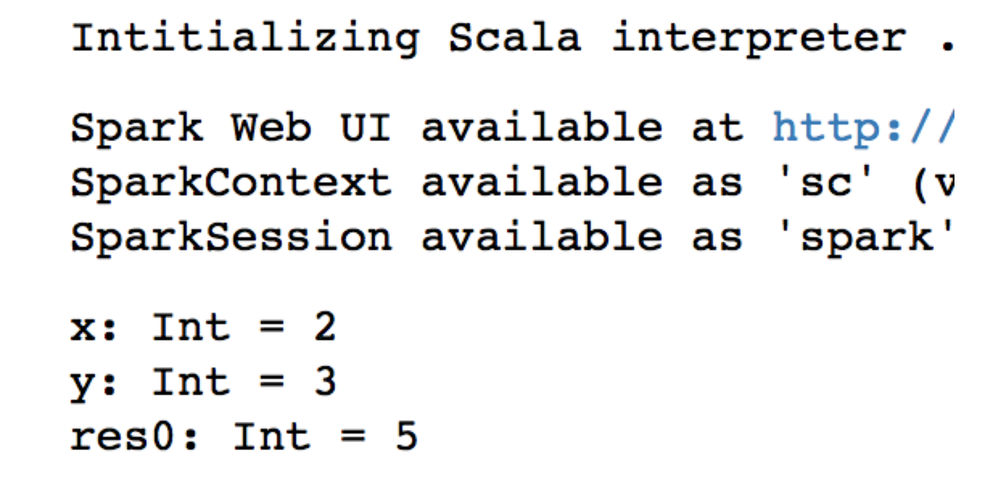

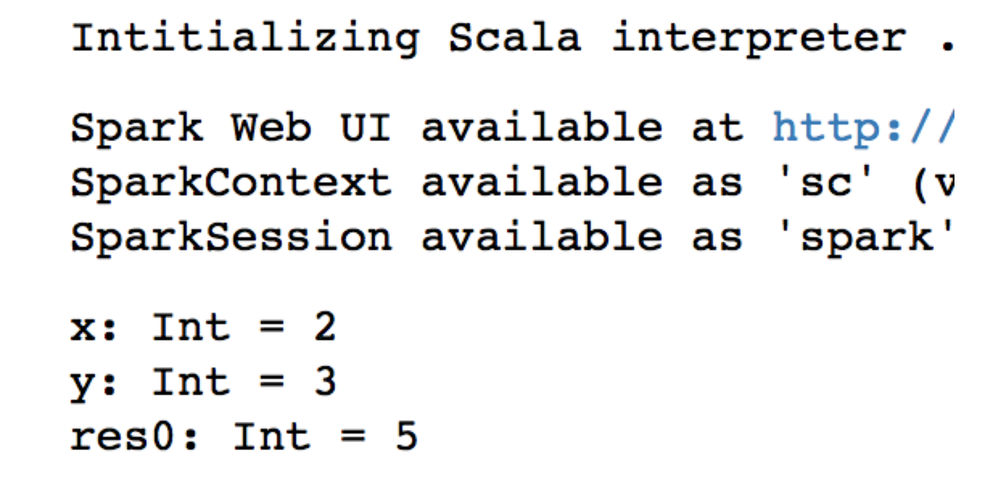

val x = 2

val y = 3

x+y

The output should be something similar to the result in the below image.

Now we can even use spark. Let’s test it by creating a data set:

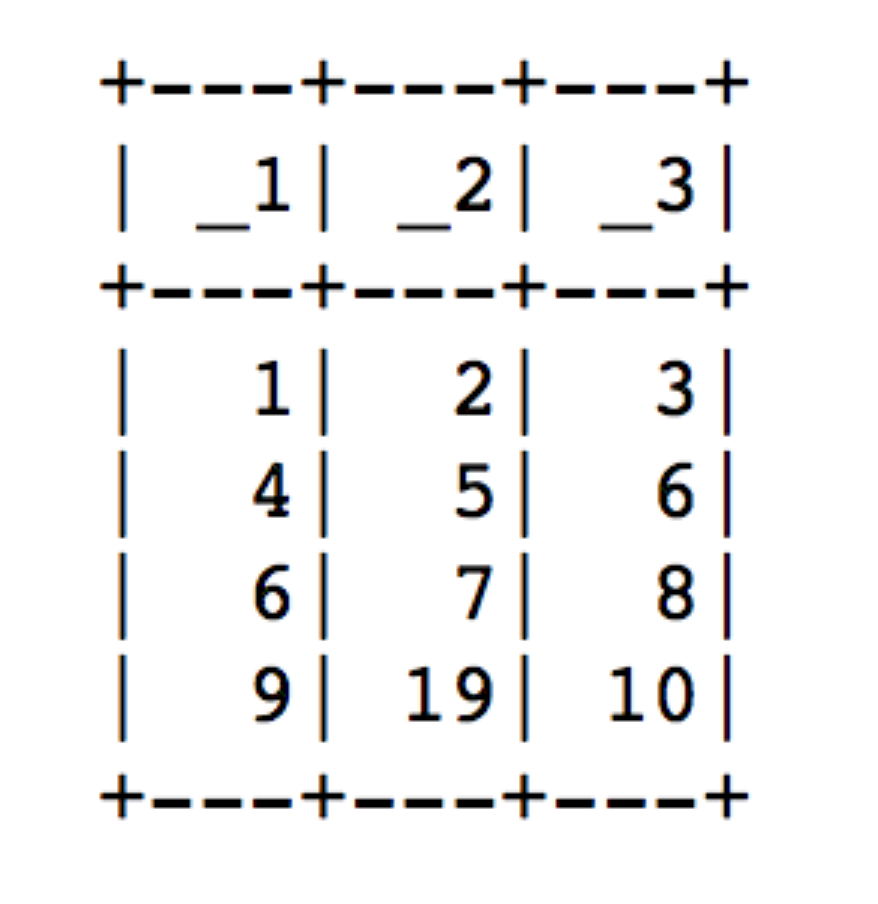

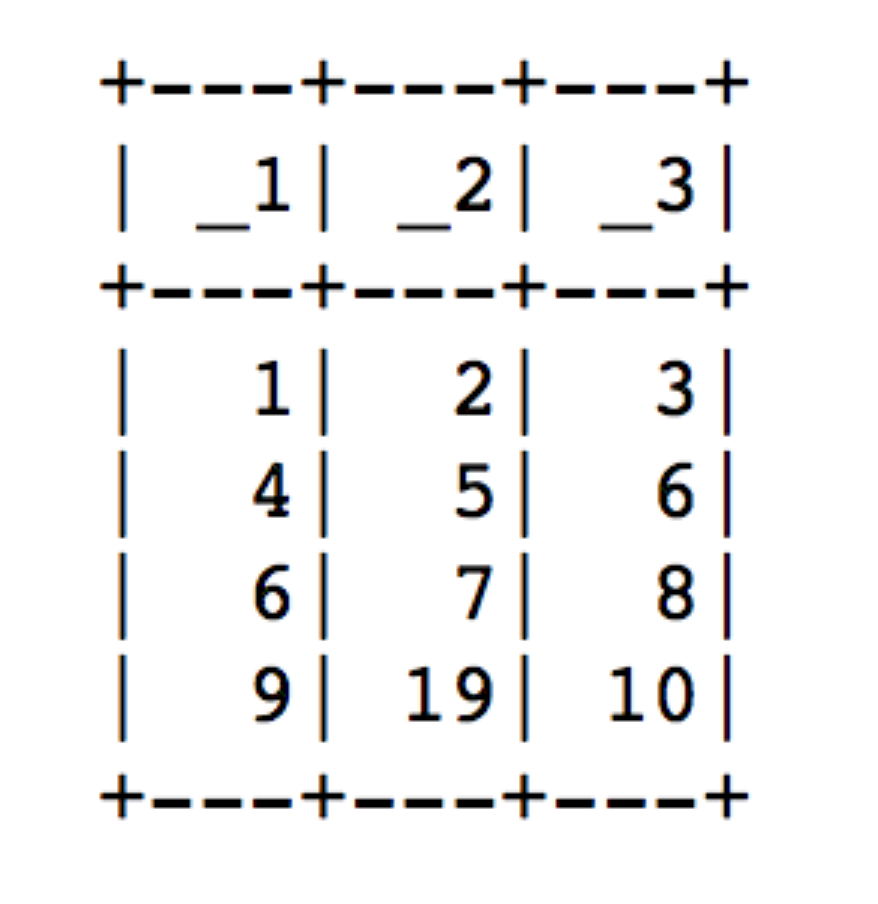

val data = Seq((1,2,3), (4,5,6), (6,7,8), (9,19,10))

val ds = spark.createDataset(data)

ds.show()This should output a simple data frame:

%python

:

%%python

x=2

print(x)For more info, you can visit the spylon-kernel Github page.

The notebook with the code above is available here.

1 REPLY 1

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-20-2022 01:01 AM

Step1: install the package

pip install spylon-kernelStep2: create a kernel spec

This will allow us to select the Scala kernel in the notebook.

python -m spylon_kernel installStep3: start the jupyter notebook

ipython notebookAnd in the notebook we select

New -> spylon-kernelThis will start our Scala kernel.

Step4: testing the notebook

Let’s write some Scala code:

val x = 2

val y = 3

x+y

The output should be something similar to the result in the below image.

Now we can even use spark. Let’s test it by creating a data set:

val data = Seq((1,2,3), (4,5,6), (6,7,8), (9,19,10))

val ds = spark.createDataset(data)

ds.show()This should output a simple data frame:

%python

:

%%python

x=2

print(x)For more info, you can visit the spylon-kernel Github page.

The notebook with the code above is available here.

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- SqlContext in DBR 14.3 in Data Engineering

- Connecting to Serverless Redshift from a Databricks Notebook in Data Engineering

- Module not found, despite it being installed on job cluster? in Data Engineering

- The spark context has stopped and the driver is restarting. Your notebook will be automatically in Data Engineering

- Delta Lake Spark fails to write _delta_log via a Notebook without granting the Notebook data access in Data Engineering