Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Autoloader: How to identify the backlog in RocksDB

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-23-2021 04:25 PM

With S3-SQS it was easier to identify the backlog ( the messages that are fetched from SQS and not consumed by the streaming job)

How to find the same with Auto-loader

Labels:

- Labels:

-

Autoloader

-

Spark streaming

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-23-2021 04:29 PM

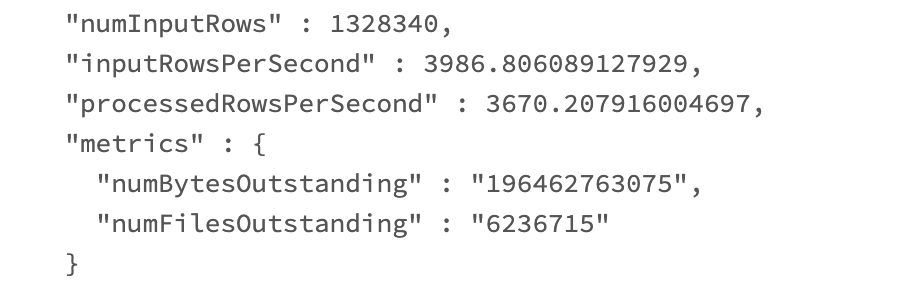

For DBR 8.2 or later, the backlog details are captured in the Streaming metrics

Eg:

2 REPLIES 2

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-23-2021 04:27 PM

For DBR versions prior to 8.2 use the below code snippet:

import org.rocksdb.Options

import org.apache.hadoop.fs.Path

import com.databricks.sql.rocksdb.{CloudRocksDB, PutLogEntry}

val rocksdbPath: String = new Path("/tmp/hari/streaming/auto_loader2/sources/0/", "rocksdb").toString

val rocksDBOptions = new Options()

rocksDBOptions

.setCreateIfMissing(true)

.setMaxTotalWalSize(Long.MaxValue)

.setWalTtlSeconds(Long.MaxValue)

.setWalSizeLimitMB(Long.MaxValue)

val rocksDB = CloudRocksDB.open(

rocksdbPath,

hadoopConf = spark.sessionState.newHadoopConf(),

dbOptions = rocksDBOptions,

opTypePrefix = "autoIngest")

println("Latest offset in RocksDB:" + rocksDB.latestDurableSequenceNumber)

println("Number of files to be processed " + rocksDB.latestDurableSequenceNumber - latestSeqFromQueryProgression)Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-23-2021 04:29 PM

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Avoiding Duplicate Ingestion with Autoloader and Migrated S3 Data in Data Engineering

- Delay when updating Bronze and Silver tables in the same notebook (DBR 13.1) in Data Engineering

- lnkd.in in Data Engineering

- Process batches in a streaming pipeline - identifying deletes in Data Engineering

- Databricks Autoloader is getting stuck and does not pass to the next batch in Data Engineering