Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Is there a way to validate the values of spark con...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-10-2021 02:54 PM

We can set for example:

spark.conf.set('aaa.test.junk.config', 99999) , and then run spark.conf.get("aaa.test.junk.config”) which will return a value.

The problem occurs when incorrectly setting to a similar matching property.

spark.conf.set('spark.sql.shuffle.partition', 999) ==> without the trailing ’s'

Where the actual property is: ‘spark.sql.shuffle.partitions' ==> has a training ’s’

Running spark.conf.get('spark.sql.shuffle.partition’) will return a value ==> without the trailing ’s'

I thought I could run the getAll() as a validation, but the getAll() may not return properties that are explicitly defined in a Notebook session.

Is there a way to check if what I have used as config parameter is actually valid or not? I don't see any error message either

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-17-2021 11:19 AM

2 REPLIES 2

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-17-2021 11:19 AM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-30-2021 02:38 PM

You would solve this just like we solve this problem for all lose string references. Namely, that is to create a constant that represents the key-value you want to ensure doesn't get mistyped.

Naturally, if you type it wrong the first time, it will be wrong everywhere, but that is true for all software development. Beyond that, a simple assert will avoid regressions where someone might change your value.

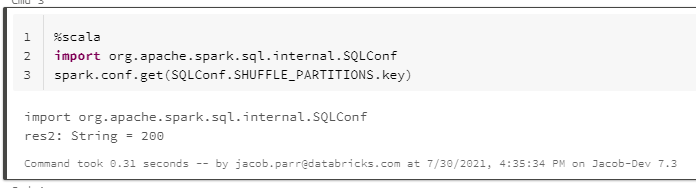

The good news is that this has already been done for you and you could simply include it in your code if you wanted to as seen here:

If you are using Python, I would go ahead and create your own constants and assertions give that integrating with the underlying Scala code just wouldn't be worth it (in my opinion)

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Delta Live table expectations in Data Engineering

- Autolodaer schemaHints convert valid values to null in Data Engineering

- Error deploying DLT DAB: Validation failed for cluster_type, the value must be dlt (is "job") in Data Engineering

- Access Azure KeyVault from all executors in Databricks in Data Engineering

- Trying to mount Azure Data Lake Storage Gen 2 to Azure Databricks in Data Engineering