Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- How to solve column header issues in Spark SQL dat...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to solve column header issues in Spark SQL data frame

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-24-2017 05:52 AM

My code :

val name = sc.textFile("/FileStore/tables/employeenames.csv")

case class x(ID:String,Employee_name:String)

val namePairRDD = name.map(_.split(",")).map(x => (x(0), x(1).trim.toString)).toDF("ID", "Employee_name")

namePairRDD.createOrReplaceTempView("namePairRDD")

val df = sqlContext.sql("SELECT * FROM namePairRDD ")

df.show()

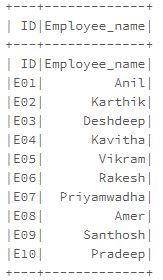

I dont know how ID and Employee_name shows up in the first row.

I tried appending the table by making column as headers, that dint work.

Please help.

Labels:

- Labels:

-

Spark sql

4 REPLIES 4

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-24-2017 08:07 AM

First you are bouncing between the RDD and DataFrames API. If you start with a SparkSession object from the DF API instead you can make the call

spark.read.option("header", "true").csv(path)then you don't have to split the file, convert from RDD to DF and the first column will be read as a header instead of as data.

For more information you can also look at the ~20 other options available to the DataFrameReader (spark.read)

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-24-2017 08:18 AM

I understand we can solve this in multiple ways. I am trying to solve this Question. Hence i am following the flow.

Write the missing Spark SQL queries to join all the three tables, sort the table, and display the output in the given format: ID, Name, Salary, Manager Name.

Instructions:

1. Table Names are highlighted in the following queries

Spark sql

val manager = sc.textFile("/user/EmployeeManagers")

val managerRDD = manager.map(x => (x.split(",")(0), x.split(",")(1)))

val name = sc.textFile("/user/EmployeeNames")

val namePairRDD = name.map(x => (x.split(",")(0), x.split(",")(1)))

val salary = sc.textFile("/user/EmployeeSalary")

val salaryPairRDD = salary.map(x => (x.split(",")(0), x.split(",")(1)))

<Write your code>

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-28-2017 08:43 AM

Fair enough... let's try another solution then.

One option would be to use sc.wholeTextFiles(..) and simply remove the first line of the file. Personally, I don't like this because each file is forced into one partition and if it is a really large file, then I can have issues with running out of memory.

A second (and third) option revolves around how do I filter() out row #1 which contains a header and in this case would require having special knowledge about the data.

In your example above, the first column has values like E01, E02, E03, etc. If we can say definitively that the value "ID" is invalid then we can simply exclude any record with that value. You would do this just after you split the CSV file up, or more specifically, after the call to xxx.map(). It has the disadvantage that you are only checking one column of data and may not be as accurate as you need.

A third option, possibly easier to implement, is to filter() out any line of text that is a specific value - that is the entire line matches your column headers. In this case, the filter accepts any line that does not equal "ID,Employee_name". You would do this just after the call to sc.textFile() and before xxx.map().

And if you really want to get tricky, you can read in just the first line of your file to determine what the header is, and then use it in the filter with option three above.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-19-2019 08:25 PM

Hi, I have the opposite issue. When I run and SQL query through the bulk download as per the standard prc fobasx notebook, the first row of data somehow gets attached to the column headers. When I import the csv file into R using read_csv, R thinks I have 13 columns whenI in fact only have 7. The last column that should appear has a the observation from the first cell in the second row attached to it's header. Why does this happen and how can I fix it. Thanks.

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Spark CSV file read option to read blank/empty value from file as empty value only instead Null in Data Engineering

- When reading a csv file with Spark.read, the data is not loading in the appropriate column while pas in Data Engineering

- Unable to create a record_id column via DLT - Autoloader in Data Engineering

- Partition in Spark in Data Engineering

- Misleading UNBOUND_SQL_PARAMETER even though parameter specified in Warehousing & Analytics