Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Spark: How to simultaneously read from and write t...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Spark: How to simultaneously read from and write to the same parquet file

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-26-2019 03:37 AM

How can I read a DataFrame from a parquet file, do transformations and write this modified DataFrame back to the same same parquet file?

If I attempt to do so, I get an error, understandably because spark reads from the source and one cannot write back to it simultaneously. Let me reproduce the problem -

df = spark.createDataFrame([(1, 10),(2, 20),(3, 30)], ['sex','date'])

# Save as parquet

df.repartition(1).write.format('parquet').mode('overwrite').save('.../temp')

# Load it back

df = spark.read.format('parquet').load('.../temp')

# Save it back - This produces ERROR

df.repartition(1).write.format('parquet').mode('overwrite').save('.../temp')

ERROR:

java.io.FileNotFoundException: Requested file maprfs:///mapr/.../temp/part-00000-f67d5a62-36f2-4dd2-855a-846f422e623f-c000.snappy.parquet does not exist. It is possible the underlying files have been updated. You can explicitly invalidate the cache in Spark by running 'REFRESH TABLE tableName' command in SQL or by recreating the Dataset/DataFrame involved.

One workaround to this problem is to save the DataFrame with a differently named parquet folder -> Delete the old parquet folder -> rename this newly created parquet folder to the old name. But this is very inefficient way of doing it, not to mention those DataFrames which are having billions of rows.

I did some research and found that people are suggesting doing some REFRESH TABLE to refresh the MetaData, as can be seen here and here.

Can anyone suggest how to read and then write back to exactly the same parquet file ?

Labels:

- Labels:

-

Metadata

-

Parquet files

-

Spark

3 REPLIES 3

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-03-2020 09:25 PM

This way

it was working ...

import org.apache.spark.sql.functions._val df = Seq((1, 10), (2, 20), (3, 30)).toDS.toDF("sex", "date") df.show(false) // save it

df.repartition(1).write.format("parquet").mode("overwrite").save(".../temp")

// read back again val df1 = spark.read.format("parquet").load(".../temp") val df2 = df1.withColumn("cleanup" , lit("Quick silver want to cleanup"))

// BELOW 2 ARE IMPORTANT STEPS LIKE

cacheshowdf2.cache // cache to avoid FileNotFoundException df2.show(2) // light action or println(df2.count) also fine

df2.repartition(1).write.format("parquet").mode("overwrite").save(".../temp")

df2.show(false)

will work for sure only thing is you need to cache and perform small action like show(1) this is altermative route thought of proposing to the users who mandatorily need it.

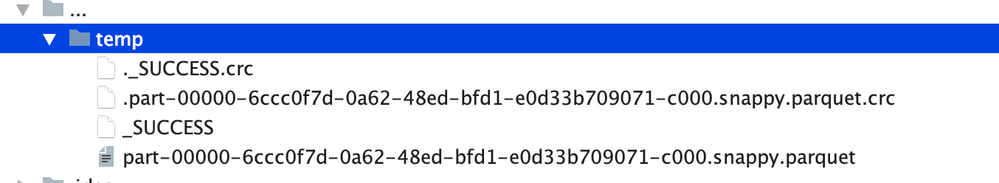

Result ... file : read and overwritten

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-21-2020 11:05 AM

Thanks.. it worked

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-03-2020 03:41 PM

Hi,

You can use insertinto instead of save. It will overwrite the target file no need to cache or persist your dataframe

Df.write.format("parquet").mode("overwrite").insertInto("/file_path")

~Saravanan

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- what config do we use to set row groups fro delta tables on data bricks. in Data Engineering

- How to ignore Writestream UnknownFieldException error in Data Engineering

- Dynamic partition overwrite with Streaming Data in Data Engineering

- AutoLoader - handle spark write transactional (_SUCCESS file) on ADLS in Data Engineering

- Delta Lake Spark fails to write _delta_log via a Notebook without granting the Notebook data access in Data Engineering