Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- How can I export a result of a SQL query from a da...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How can I export a result of a SQL query from a databricks notebook?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-29-2015 04:19 AM

The "Download CSV" button in the notebook seems to work only for results <=1000 entries. How can I export larger result-sets as CSV?

Labels:

- Labels:

-

CSV

12 REPLIES 12

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-31-2015 09:58 AM

Hi,

You can write the results back to S3 and download them from directly from S3. Our DB Guide notebooks should have a sample for you to look at. Please search for Download All SQL and you should see Scala and python examples.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-24-2017 10:13 AM

It woul be really great if you could add a simple option to download a table in the "data" tab. As a data scientist, I can say this is a pretty basic part of the data science process -- for example if you are building documentation or whitepapers for a model, you will need samples of data, correlation matrices, etc.

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-28-2017 11:24 AM

You can also write to dbfs:/FileStore/download.csv, and then download by going to https://community.cloud.databricks.com/files/download.csv

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-29-2019 11:57 PM

Hi doug,

Can you provide more details on this? Not sure how to write to the filestore.

Thanks.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-18-2020 07:27 AM

yes, would you please provide more in detail on this? the link is not working (HTTP ERROR: 404" thank you

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-10-2020 08:12 PM

To write to the filestore you'd do:

df.write.option("header", "true").csv("dbfs:/FileStore/download.csv") and then download from

https://community.cloud.databricks.com/files/download.csv

However, Databricks now has the feature to just click "Download" when displaying a result-set, which the other answers below also mention. It will download the first 1 million rows.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-03-2020 12:16 PM

I have asked this question before since the link is broken.

How do I export sql query into csv and send email out. Also, how can I select a billion records in csv. Databrick allows to export only max a million record but we have 1.5 billion

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-10-2020 08:02 AM

Hello,

I usually use pyspark to do such action. Some example code of how I write it to DBFS from a pyspark data frame:data_frame.coalesce(1).write.format("com.databricks.spark.csv").save("/FileStore/tables/your_file_name.csv")If you are curious how to transfer from straight SQL to pyspark, you can try creating a temporary table within SQL and then reading it into a pyspark data frame to then write it out. Below code is given you have imported the respective libraries.

%python

data_frame = spark.table("your_temp_table")

Please note I normally do not have export data of this size. You may need to research more the coalesce function and may be required to combine multiple csv files.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-10-2020 06:49 PM

After rereading your question, this is quite simple, when downloading a csv from the notebook there will be a down arrow indicator on the right side of the symbol. All you need to do is click that drop down and click download full results (1,000,000 max)

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-10-2020 07:31 PM

Cobly,

I know how to download a csv from a notebook via using a down arrow indicator on the bottom of the query. But I want to know how to create a script to pull the csv directly send out email and/or store directly in the indicate folder like oracle/mysql. Also, the query is about 1.5 billion records, 1 million max is not an option.

Thank you.

Bach-Nga

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-04-2021 11:27 PM

You can compose the outcomes back to S3 and download them from straightforwardly from S3. Our DB Guide note pads ought to have an example for you to take a gander at. If it's not too much trouble, look for Download All SQL and you should see Scala and python models.

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-31-2022 12:21 PM

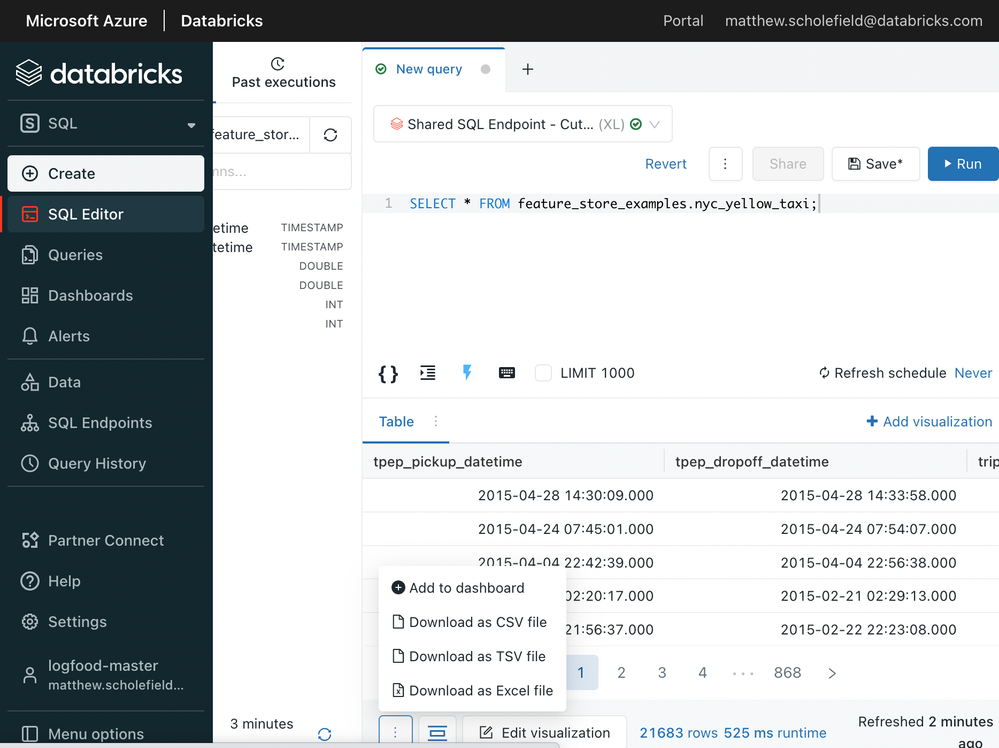

Now within the Databricks SQL interface (within the SQL editor) you can actually download the full results as a csv. Just make sure to uncheck "LIMIT 1000" and then click the download button under "..." in the bottom left:

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Passing Parameters from Azure Synapse in Data Engineering

- Variables passed from ADF to Databricks Notebook Try-Catch are not accessible in Data Engineering

- I am getting NoneType error when running a query from API on cluster in Data Engineering

- Errors When Using R on Unity Catalog Clusters in Data Engineering

- Help - org.apache.spark.SparkException: Job aborted due to stage failure: Task 47 in stage 2842.0 in Machine Learning