Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- How can we join two pyspark dataframes side by sid...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How can we join two pyspark dataframes side by side (without using join,equivalent to pd.concat() in pandas) ? I am trying to join two extremely large dataframes where each is of the order of 50 million.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-15-2021 08:11 AM

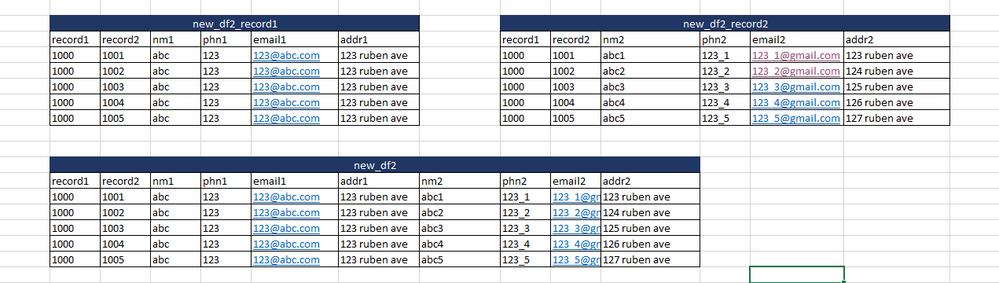

My two dataframes look like new_df2_record1 and new_df2_record2 and the expected output dataframe I want is like new_df2:

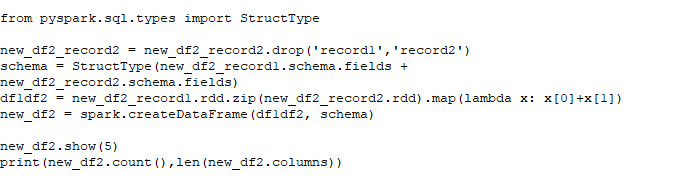

The code I have tried is the following:

If I print the top 5 rows of new_df2, it gives the output as expected but I cannot print the total count or the number of total number of columns it contains. Gives the error:

"ERROR Executor: Exception in task 2.0 in stage 6.0 (TID 😎org.apache.spark.api.python.PythonException: Traceback (most recent call last): File "D:\Spark\python\lib\pyspark.zip\pyspark\worker.py", line 604, in main File "D:\Spark\python\lib\pyspark.zip\pyspark\worker.py", line 596, in process File "D:\Spark\python\lib\pyspark.zip\pyspark\serializers.py", line 259, in dump_stream vs = list(itertools.islice(iterator, batch)) File "D:\Spark\python\lib\pyspark.zip\pyspark\serializers.py", line 326, in _load_stream_without_unbatching " in batches: (%d, %d)" % (len(key_batch), len(val_batch)))ValueError: Can not deserialize PairRDD with different number of items in batches: (4096, 8192)"from pyspark.sql.types import StructTypenew_df2_record2 = new_df2_record2.drop('record1','record2') schema = StructType(new_df2_record1.schema.fields + new_df2_record2.schema.fields) df1df2 = new_df2_record1.rdd.zip(new_df2_record2.rdd).map(lambda x: x[0]+x[1]) new_df2 = spark.createDataFrame(df1df2, schema)

new_df2.show(5) print(new_df2.count(),len(new_df2.columns))

Labels:

- Labels:

-

Pyspark Dataframes

2 REPLIES 2

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-15-2021 08:21 AM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-06-2022 11:54 PM

Hi @Trina De, Did you get the desired output?

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Help with Identifying and Parsing Varying Date Formats in Spark DataFrame in Data Engineering

- Clarification Needed: Ensuring Correct Pagination with Offset and Limit in PySpark in Data Engineering

- Pyspark Dataframes orderby only orders within partition when having multiple worker in Data Engineering

- Databricks Pyspark Dataframe error while displaying data read from mongodb in Data Engineering

- How to send dataframe output from Notebook to my angular app? in Data Engineering