Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Is there a way to create a non-temporary Spark Vie...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-14-2021 07:18 AM

Hi,

When creating a Spark view using SparkSQL ("CREATE VIEW AS SELCT ...") per default, this view is non-temporary - the view definition will survive the Spark session as well as the Spark cluster.

In PySpark I can use DataFrame.createOrReplaceTempView or DataFrame.createOrReplaceGlobalTempView to create a temporary view for a DataFrame.

Is there a way to create a non-temporary Spark View with PySpark for a DataFrame programatically?

spark.sql('CREATE VIEW AS SELCT ...') doesn't count 😉

I did not find a DataFrame method to do so...

Labels:

- Labels:

-

Pyspark

-

Spark

-

Spark view

-

Spark-sql

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-10-2021 11:17 AM

ok now I got it finally 🙂 so whole question is just to create CREATE VIEW AS SELECT via PySpark API.

we can see that in dataframe.py that all views are temp:

this PySpark API is routing to Dataset.scala:

in Dataset.scala we can see condition which should be rebuild-ed. It is not allowing PersistedView

val viewType = if (global) GlobalTempView else LocalTempViewit should be something like in SparkSqlParser.scala

val viewType = if (ctx.TEMPORARY == null) {

PersistedView

} else if (ctx.GLOBAL != null) {

GlobalTempView

} else {

LocalTempView

}so:

1)

private def createTempViewCommand in Dataset.scala need additional viewType param and rather should be renamed (already name is wrong as Global was added)

2) than functions like createGlobalPersistantCommand etc. ould be added in Dataset.scala

3) than de fcreateGlobalPersistantView etc. could be added to dataframe.py

after it is done in Spark X (? :- ) it will be possible.

Maybe someone want to contribute and create commits 🙂

8 REPLIES 8

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-14-2021 08:07 AM

Just create Table instead

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-14-2021 09:45 AM

Creating a table would imply data persistance, wouldn't it?

I don't want that.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-14-2021 10:18 AM

hi @Martin B. ,

There are 2 types of views. TEMPORARY views are session-scoped and is dropped when session ends because it skips persisting the definition in the underlying metastore, if any. GLOBAL TEMPORARY views are tied to a system preserved temporary database global_temp. If you would like to know more about it, please refer to the docs

If none of these two options work for you, then the other option will be to create a physical table like @Hubert Dudek mentioned.

Thanks

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-16-2021 01:37 AM

Hi @Jose Gonzalez ,

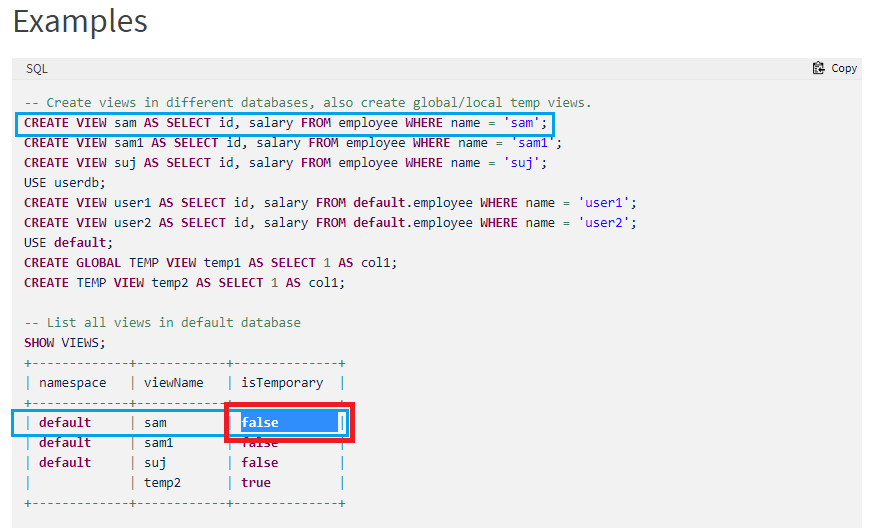

I would argue, there are 3 types of views:

- TEMPORARY VIEWS

- CREATE TEMPORARY VIEW sam AS SELECT * FROM ...

- GLOBAL TEMPORARY VIEWS

- CREATE GLOBAL TEMPORARY VIEW sam AS SELECT * FROM ...

- NON-TEMPORARY VIEWS

- CREATE VIEW sam AS SELECT * FROM ...

Please see the example here: https://docs.databricks.com/spark/latest/spark-sql/language-manual/sql-ref-syntax-aux-show-views.htm...

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-10-2021 05:20 AM

@Jose Gonzalez or @Piper Wilson any ideas?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-10-2021 05:29 AM

why not to create manage table?

dataframe.write.mode(SaveMode.Overwrite).saveAsTable("<example-table>")

# later when we need data

resultDf = spark.read.table("<example-table>")Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-10-2021 08:24 AM

@Hubert Dudek, creating a managed table means persisting the data frame (writing the content of the dataframe to storage) .

Imagine you have a Spark table (in delta lake format) containing your raw data. Every 5 Minutes there is new data appended to that raw table.

I want to refine the raw data: filter it, select just specific columns and convert some columns to a better data type (e.g string to date). With VIEWSs you can apply this transformations virtually. Every time you access the view, the data are transformed at access time - but you always get the current data from the underlying table.

When creating a managed table you basically create a copy of the content of the raw table with the transformations applied. Persisting data comes at performance and storage costs.

Moreover, every time, I want to access my "clean" version of the data I have to specify the transformation logic again. VIEWs allow me to just access my transformed raw data without the need of manual refresh or persistence.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-10-2021 11:17 AM

ok now I got it finally 🙂 so whole question is just to create CREATE VIEW AS SELECT via PySpark API.

we can see that in dataframe.py that all views are temp:

this PySpark API is routing to Dataset.scala:

in Dataset.scala we can see condition which should be rebuild-ed. It is not allowing PersistedView

val viewType = if (global) GlobalTempView else LocalTempViewit should be something like in SparkSqlParser.scala

val viewType = if (ctx.TEMPORARY == null) {

PersistedView

} else if (ctx.GLOBAL != null) {

GlobalTempView

} else {

LocalTempView

}so:

1)

private def createTempViewCommand in Dataset.scala need additional viewType param and rather should be renamed (already name is wrong as Global was added)

2) than functions like createGlobalPersistantCommand etc. ould be added in Dataset.scala

3) than de fcreateGlobalPersistantView etc. could be added to dataframe.py

after it is done in Spark X (? :- ) it will be possible.

Maybe someone want to contribute and create commits 🙂

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- DLT run filas with "com.databricks.cdc.spark.DebeziumJDBCMicroBatchProvider not found" in Data Engineering

- Pyspark execution error in Data Engineering

- Unity catalog issues in Data Engineering

- Ephemeral storage how to create/mount. in Data Engineering

- Configure Service Principle access to GiLab in Data Engineering