Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Reply on inline runtime commands

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-25-2021 02:04 AM

I feel like the answer to this question should be simple, but none the less I'm struggling.

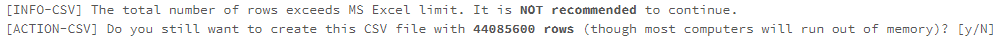

I run a python code that prompts me with the following warning:

I'm missing some kind of terminal to type 'y' into, or I am missing something else entirely 🙂

Can someone help me out?

Labels:

- Labels:

-

Python Code

-

Runtime

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-26-2021 01:41 PM

Hi @Nickels Köhling ,

In Databricks, you will only be able to see the output in the driver logs. If you go to your driver logs, you will be able to see 3 windows that are displaying the output of "stdout", "stderr" and "log4j".

If in your code you do any print() statements, then it will be displayed in the "stdout" window from your driver logs.

If you are using a Spark logger, then it will be displayed in the "log4j" output window.

If you would like to provide a value dynamically, then I will recommend to use the input widgets from your notebook.

4 REPLIES 4

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-25-2021 03:05 AM

Typically, Spark (Databricks) is not made for downloading files locally to your laptop.

It is a distributed computing system optimized for parallel writes to some kind of storage (DBFS for Databricks).

I do not know what your use case is exactly, but if you want to download data, it might be a good idea to let Databricks write to a data lake/blob storage etc (something mounted in DBFS).

From there on, you can download it to your computer if necessary.

There is the possibility to download data from within the notebooks (display command), but I think there is a hard limit in the amount of data which can be transferred that way.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-25-2021 03:11 AM

Thank you for your reply, Werners. Actually, the thing with regard to my local computer was only to see if the code would run on a local instance by use of VS Code. So it was only for testing purposes.

What I'm trying to do in Databricks is to convert a NC-file to CSV and store it in my datalake. In this process I receive the warning/exception as showed above. Hence, my 'only' problem is that I don't now how to reply to the warning/exception from within a Databricks notebook at runtime...

I guess an alternative would be to suppress the warning/exception in the first place?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-25-2021 05:26 AM

Ok, the nc-file is of a file type not known to spark.

So that is why you read it in python, to convert it, I suppose.

Thing is that if you use plain python, Databricks behaves in the same way as your local computer with the same limits.

The power of spark is the parallel processing. But spark does not know this file format so here we are.

I do not know this nc-file format, but what you need is a reader which will work on Spark

(pyspark if you use python).

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-26-2021 01:41 PM

Hi @Nickels Köhling ,

In Databricks, you will only be able to see the output in the driver logs. If you go to your driver logs, you will be able to see 3 windows that are displaying the output of "stdout", "stderr" and "log4j".

If in your code you do any print() statements, then it will be displayed in the "stdout" window from your driver logs.

If you are using a Spark logger, then it will be displayed in the "log4j" output window.

If you would like to provide a value dynamically, then I will recommend to use the input widgets from your notebook.

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Help - org.apache.spark.SparkException: Job aborted due to stage failure: Task 47 in stage 2842.0 in Machine Learning

- Container Service Docker images fail when a pip package is installed in Data Engineering

- Problem with tables not showing in Data Engineering

- Databricks Error while executing this line of code in Data Engineering

- OPTIMIZE: Exception thrown in awaitResult: / by zero in Data Engineering