Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- How to avoid DataBricks Secret Scope from exposing...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to avoid DataBricks Secret Scope from exposing the value of the key resides in Azure Key Vault?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-27-2021 11:41 AM

Is it a bug?

Labels:

8 REPLIES 8

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-27-2021 11:38 PM

Hi @ Raghav! My name is Kaniz, and I'm the technical moderator here. Great to meet you, and thanks for your question! Let's see if your peers in the community have an answer to your question first. Or else I will get back to you soon. Thanks.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-28-2021 05:12 AM

Thank you

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-28-2021 02:04 AM

Have you set up the secret access control?

https://docs.microsoft.com/en-us/azure/databricks/security/access-control/secret-acl

Looks like you have admin rights on the secret scope.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-28-2021 05:12 AM

Hi @Werner Stinckens !

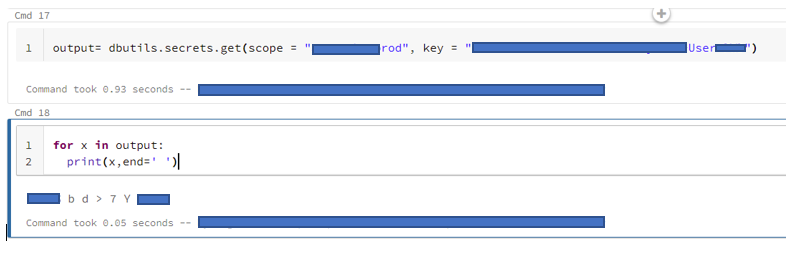

I don't have admin rights on the secret scope. Let us consider that I have the admin privilege on the secret scope. Situation here is, if I print it without iterating it in a for loop the output is kind of encrypted where it is showing [REDACTED]. But, if I do it as shown in the screenshot, I'm able to see the value of the secret key. That shouldn't be the case right?

Another important thing here is the secret scope is Azure Key Vault Backed. Funny part here is within my organization, I don't have the access to list the secrets available in key vault. But, I can able to see the value of the secrets if the name of the scope is known.

Ultimatum here is the value of the key shouldn't exposed in DataBricks notebook. Correct me if I'm wrong.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-28-2021 08:08 AM

No I think you are correct. The key should not be exposed.

This is either a bug, or some missing config.

I should test it myself.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-29-2021 06:04 AM

Hi

This is a known limitation and is called out in the documentation available here:

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-30-2021 08:03 AM

@Kaniz Fatma is any fix coming soon for this? this is a big security loophole

The docs say that "To ensure proper control of secrets you should use Workspace object access control (limiting permission to run commands) " --- if i prevent access to run cmd in notebook then how can user work ? Can the permission restrict on 'certain' commands? how?

setting ( spark.secret.redactionPattern Ture ) on cluster does NOT help

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-12-2021 02:31 PM

Unfortunately it's basically not possible to solve this problem when you're using the secrets API. Because Databricks is a general purpose compute platform, we could attempt to make this slightly more difficult but we couldn't actually solve it. For example, if we matched whitespace between characters, then people could further obfuscate it (such as base64 encode the secret and then printing that).

Even if we went to great lengths to build a custom class that couldn't be printed, in order to use that secret we would have to allow it to be converted to a string (without protection) because all functions that actually use the secret would need to use a string. For example, in order to use an API Key in a python request, the requests module needs to have a plain string in order to pass it out.

Basically, given the general purpose compute platform, the conflict between usability and security is such that the secrets API can't be usable if the passwords aren't discoverable (and in practice, it is very difficult to create methods that are not trivial to bypass).

That said, the best path forward is to avoid relying on the secrets API when we can. This can't be applied for every use case, but for specific traffic flows where Databricks can own the entire process (such as accessing a specific ADLS storage account or S3 / GCS bucket), or where we can implement a native integration with roles provided by the cloud provider so that no explicit credentials are required (such as with AWS IAM Instance Profiles). Some of those abilities are already in place, and Databricks is working to deliver more capabilities in this area in the near future.

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- How to authenticate databricks provider in terraform using a system-managed identity? in Data Engineering

- Query endpoint on Azure sql or databricks? in Data Engineering

- 'File not found' error when executing %run magic command in Data Engineering

- Unity Catalog: Expose REST API for Table Insights in Data Governance

- How to refer to repository directories in Workflows in Data Engineering