Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- i am trying to find different between two dates bu...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-09-2021 10:32 PM

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2021 01:03 AM

13 REPLIES 13

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-09-2021 11:51 PM

Hi @ gayatri ! My name is Kaniz, and I'm the technical moderator here. Great to meet you, and thanks for your question! Let's see if your peers in the community have an answer to your question first. Or else I will get back to you soon. Thanks.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-10-2021 01:23 AM

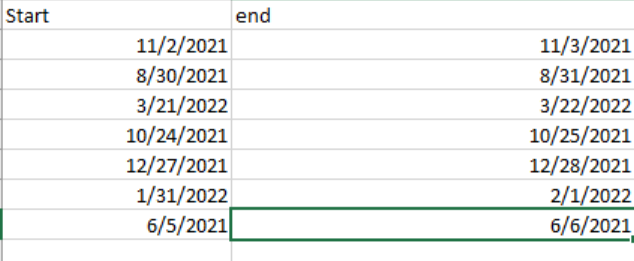

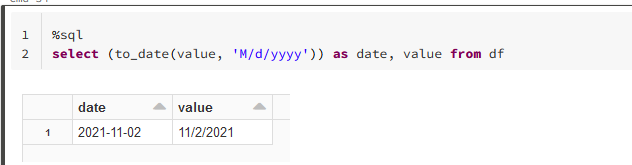

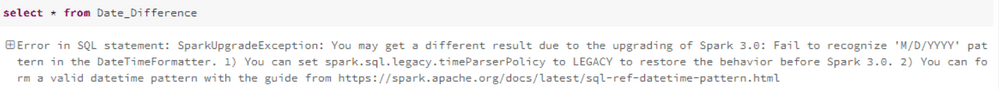

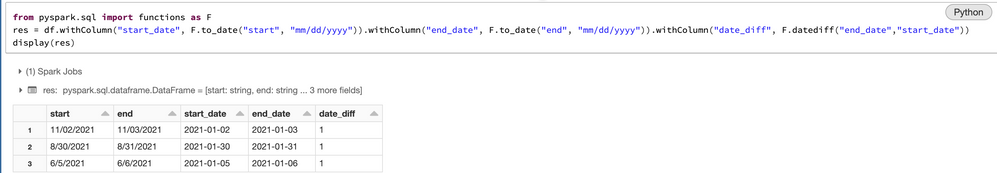

The trick is to make sure your columns are recognized as dates, otherwise they will be evaluated as strings.

"to_date(<datecol>, <date_format>) " does exactly this.

datecol is your column containing the date values, and date_format is the format in which your existing date is formatted. In your case "MM/dd/yyyy".

This will return values of spark dateType.

When they are recognized as dates, you can start calculating with them.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-14-2021 11:11 PM

i tried all way but it is still showing null

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-14-2021 11:19 PM

I notice you do not have leading zeroes in your data format,

try M/d/yyyy instead.

https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html

Maybe you have leading spaces too, so trim() might also help.

For sure this is not a bug because the date functions in Spark are rock solid.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-14-2021 11:54 PM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2021 01:03 AM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2021 03:19 AM

thank u thank u soooo much it works thank u😊

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2021 03:34 AM

Wow! Amazing.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-10-2021 03:17 AM

Exactly as @Werner Stinckens said. Additionally you can share your file and script so we can help better.

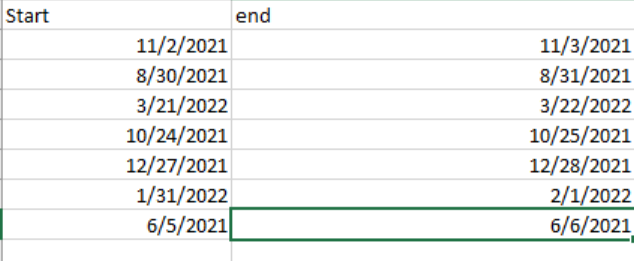

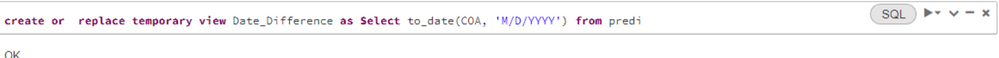

Your screenshot looks like excel. If it is excel format please check is all fields a data format (you can change also to number as every date is number of days from 31st December 1899). If it is csv format as werners said you need to specify format because for example 6/5/2021 can crush as it can be 5th June or 6th May.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-12-2021 05:39 AM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2021 12:17 AM

no its nt working i tried below function

datediff(concat(

split(start_date, '/') [2],

'-',case

when split(start_date, '/') [0] < 10 then concat('0', split(start_date, '/') [0])

else split(start_date, '/') [0]

end,

'-',

case

when split(start_date, '/') [1] < 10 then concat('0', split(start_date, '/') [1])

else split(start_date, '/') [1]

end), concat(split(end_date, '/') [2],

'-',case

when split(end_date, '/') [0] < 10 then concat('0', split(end_date, '/') [0])

else split(end, '/') [0]

end,

'-',

case

when split(end_date, '/') [1] < 10 then concat('0', split(end_date, '/') [1])

else split(end_date, '/') [1]

end

) )as diff

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-12-2021 03:49 PM

Hi @ahana ahana ,

Did any of the replies helped you solve this issue? would you be happy to mark their answer as best so that others can quickly find the solution?

Thank you

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2021 12:19 AM

no i am not satisfied with the given answer

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Passing Parameters from Azure Synapse in Data Engineering

- Databricks Model Registry Notification in Data Engineering

- Variables passed from ADF to Databricks Notebook Try-Catch are not accessible in Data Engineering

- I am getting NoneType error when running a query from API on cluster in Data Engineering

- Trying to run databricks academy labs, but execution fails due to method to clearcache not whilelist in Data Engineering