Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Runtime SQL Configuration - how to make it simple

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-17-2021 07:00 AM

Hi, I'm running couple of Notebooks in my pipeline and I would like to set fixed value of 'spark.sql.shuffle.partitions' - same value for every notebook. Should I do that by adding spark.conf.set.. code in each Notebook (Runtime SQL configurations are per-session) or is there any other, easier way to set this?

Labels:

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-17-2021 07:30 AM

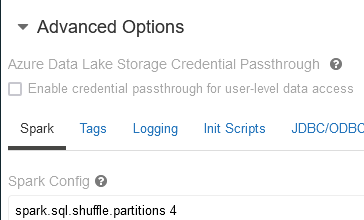

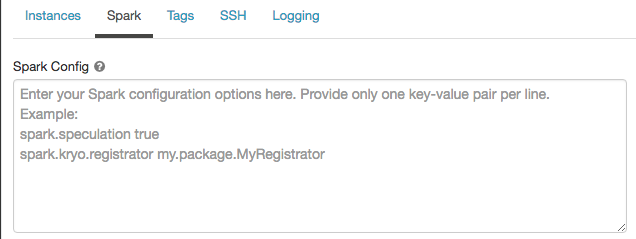

Easiest is to use spark config in advanced options in cluster settings:

more info here https://docs.databricks.com/clusters/configure.html#spark-configuration

5 REPLIES 5

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-17-2021 07:30 AM

Easiest is to use spark config in advanced options in cluster settings:

more info here https://docs.databricks.com/clusters/configure.html#spark-configuration

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-17-2021 08:01 AM

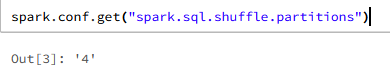

Setting it either in the notebook or the cluster, both should work. But the better option would be to go with the cluster's spark config.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-17-2021 10:21 AM

Hi @Leszek ,

Like @Hubert Dudek mentioned, I will recommend to add your setting to the cluster Spark configurations.

The difference between notebook vs cluster, is that:

- In the Notebook, the Spark configurations will only apply to the notebook Spark context itself. The Spark configurations only will apply to the notebook

- In the cluster, the Spark setting will be global and it will be apply to all the notebooks that are attach to the cluster.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-17-2021 11:41 PM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-18-2021 04:00 AM

Great that it is working. Any chance to be selected as best answer? 🙂

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Databricks Runtime 13.3 - can I use Databricks Connect without Unity Catalog? in Data Engineering

- Getting SparkConnectGrpcException: (java.io.EOFException) error when using foreachBatch in Data Engineering

- Concurrency issue with append only writed in Data Engineering

- Connect Timeout - Error when trying to run a cell in Data Engineering

- Can not change databricks-connect port in Data Engineering