Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Apart from notebook , is it possible to deploy an ...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Apart from notebook , is it possible to deploy an application (Pyspark , or R+spark) as a package or file and execute them in Databricks ?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-23-2021 08:04 AM

Hi,

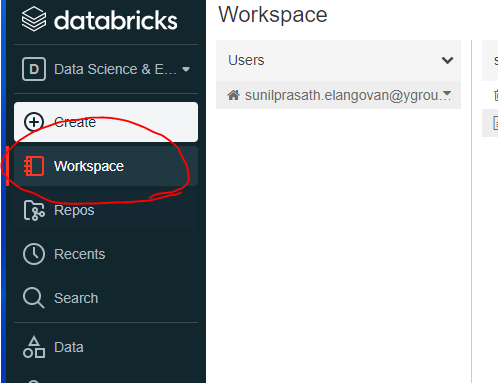

With the help of Databricks-connect i was able to connect the cluster to my local IDE like Pycharm and Rstudio desktop version and able to develop the application and committed the code in Git.

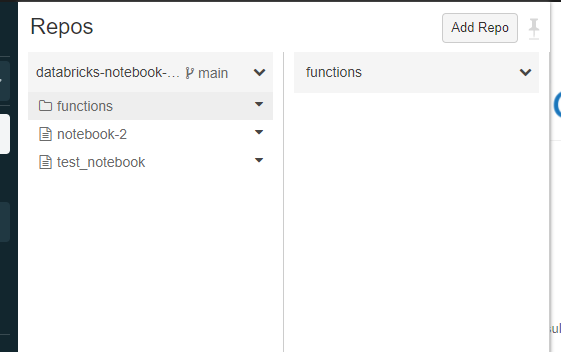

When i try to add that repo to the Databricks workspace , i noticed that python files which i created in Pycharm are not getting displayed. I see only the notebooks file.

Is there any option , to deploy those python files in databricks cluster and execute those files.

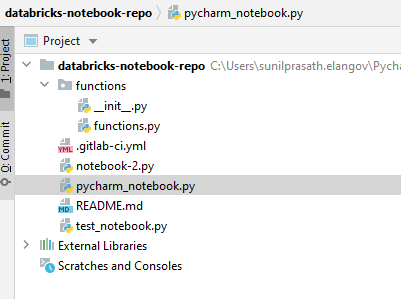

files present in pycharm

Most of my developers prefer to work in the IDE which they are familiar and comfortable. It helps them to develop and debug them quickly.

Kindly suggest , how i can address this problem.

Labels:

- Labels:

-

Databricks workspace

-

Deploy

-

Help

-

Notebook

-

Spark

5 REPLIES 5

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-23-2021 08:59 AM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-24-2021 12:51 AM

@Hubert Dudek Thanks for your response.

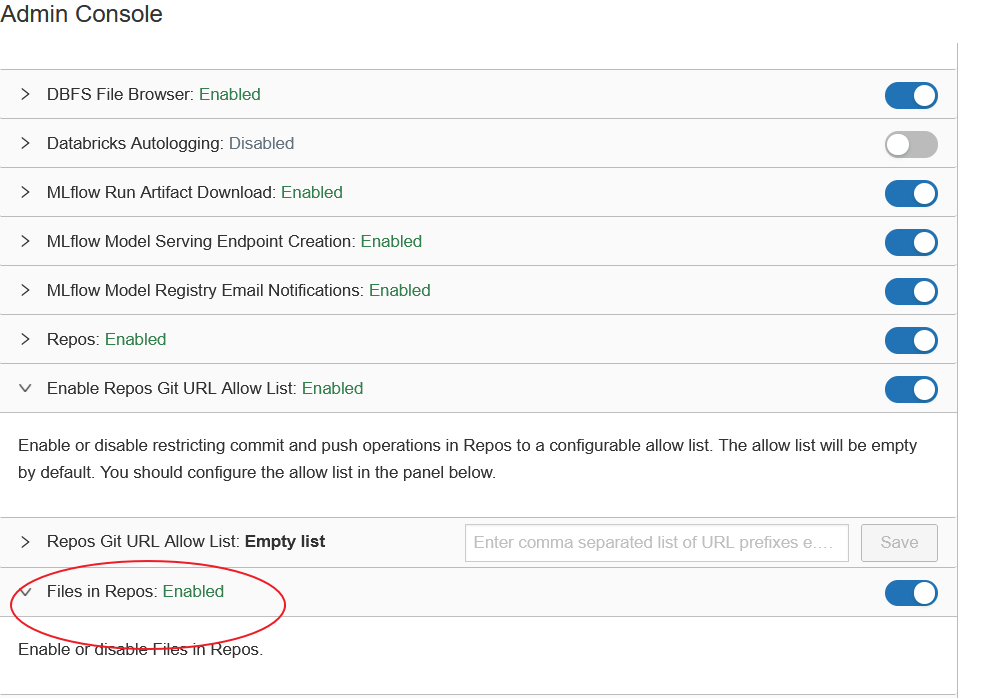

Yes now i can able to see the python files.

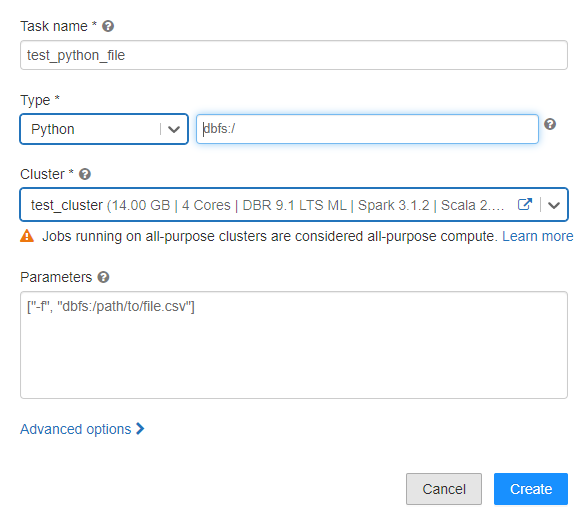

But how i can execute that python file , because i see the Job accepts only DBFS file location..

Is there any other way to execute that python file?

Our requirement is to do the development in IDEs Pycharm or Rstudio using the Databricks-connect and deploy the final version of code in databricks and execute them as Job. Is there any option available in Databricks

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-24-2021 03:23 AM

I just import class from .py in notebook and then use it:

from folder.file_folder.file_name import class

in notebook I initiate class.

I have everything in repos (notebook, py files). I have repo opened in Visual Studio and I can edit there notebooks as well. I haven't tested other IDE but it is just git repo with notebook so you can edit as well in PyCharm. Than in job you can set just notebook so it will be much easier.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-24-2021 08:06 AM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-29-2021 10:52 PM

may be you will be interested our db connect . not sure if that resolve your issue to connect with 3rd party tool and setup ur supported IDE notebook server

https://docs.databricks.com/dev-tools/databricks-connect.html

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Optimal Cluster Configuration for Training on Billion-Row Datasets in Machine Learning

- Nulls in Merge in Data Engineering

- Ephemeral storage how to create/mount. in Data Engineering

- Configure Service Principle access to GiLab in Data Engineering

- DataBricks Rust client and/or OpenAPI spec in Data Engineering