Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- I want to compare two data frames. In output I wis...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-20-2021 01:14 AM

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-11-2022 10:00 AM

Hi @vinita shinde

I don't know if It meets your requirement, but you can try something like that

import pyspark.sql.functions as F

from pyspark.sql import Row

def is_different(col1, col2, cols):

if col2 in cols:

return F.col(col1) == F.col(col2)

else:

return F.col(col1).alias(col1)

def find_difference(data1, data2, id_columns):

cols = data1.columns

data_difference = data1.subtract(data2) # First you find the difference in rows

data_difference = data_difference.join(data2.select(*[F.col(i).alias(f'b_{i}') if i not in id_columns else F.col(i).alias(i) for i in cols]), on = id_columns, how = 'left')

# you join the differences with the second dataframe

return data_difference.select(*[is_different(x, f"b_{x}",data_difference.columns) for x in cols]) # then you find out which columns cause the differences

# df1_spark first dataframe

# df2_spark second datafame

# third option is a list of ids of the Row

display(find_difference(df1_spark, df2_spark, ['ID'] ))

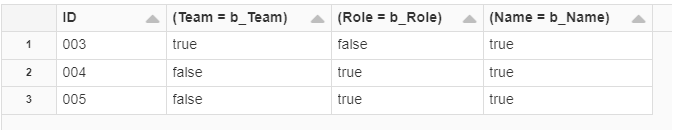

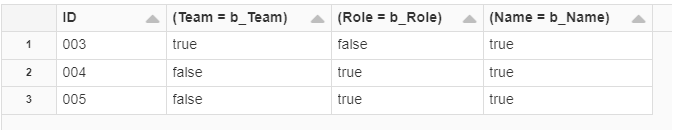

The results will be somethong like that

6 REPLIES 6

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-20-2021 02:55 AM

Hi @Databricks POC! My name is Kaniz, and I'm the technical moderator here. Great to meet you, and thanks for your question! Let's see if your peers in the community have an answer to your question first. Or else I will get back to you soon. Thanks.

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-20-2021 11:26 AM

Are you trying to do change data capture between an older and newer version of the same table?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-21-2021 12:59 AM

No, I wish to compare two tables. Same Scenario as in case of Minus/Except query. However apart from the mismatched rows, I wish to also know which are those columns leading to the difference.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-11-2022 10:00 AM

Hi @vinita shinde

I don't know if It meets your requirement, but you can try something like that

import pyspark.sql.functions as F

from pyspark.sql import Row

def is_different(col1, col2, cols):

if col2 in cols:

return F.col(col1) == F.col(col2)

else:

return F.col(col1).alias(col1)

def find_difference(data1, data2, id_columns):

cols = data1.columns

data_difference = data1.subtract(data2) # First you find the difference in rows

data_difference = data_difference.join(data2.select(*[F.col(i).alias(f'b_{i}') if i not in id_columns else F.col(i).alias(i) for i in cols]), on = id_columns, how = 'left')

# you join the differences with the second dataframe

return data_difference.select(*[is_different(x, f"b_{x}",data_difference.columns) for x in cols]) # then you find out which columns cause the differences

# df1_spark first dataframe

# df2_spark second datafame

# third option is a list of ids of the Row

display(find_difference(df1_spark, df2_spark, ['ID'] ))

The results will be somethong like that

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-26-2023 10:32 AM

How to print only what columns changed instead of displaying all columns?

I just want to print for every row PRIMARY Key (ID) and only column names that changed in the second data frame as comma separated.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-28-2022 01:53 AM

@vinita shinde are you Cracked this Code?

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.