Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Machine Learning

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Machine Learning

- How to access databricks feature store outside dat...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-04-2022 09:21 PM

We are building the feature store using databricks API. Few of the machine learning engineers are using Jupyter notebooks. Is it possible to use feature store outside databricks?

Labels:

- Labels:

-

databricks

-

Feature Store

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-10-2022 04:49 AM

Hi @Ariel Cedola , I repeat - The Databricks Feature Store library is available only on Databricks Runtime for Machine Learning and is accessible through notebooks and jobs.

14 REPLIES 14

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-04-2022 11:26 PM

Hi @ Nithin! My name is Kaniz, and I'm the technical moderator here. Great to meet you, and thanks for your question! Let's see if your peers in the community have an answer to your question first. Or else I will get back to you soon. Thanks.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-10-2022 07:14 AM

Hi @Nithin Anil , The Databricks Feature Store library is available only on Databricks Runtime for Machine Learning and is accessible through notebooks and jobs.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-11-2022 08:25 PM

Thanks true.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-11-2022 09:46 PM

I see databricks plugin in Visual Studio. Is that of any use? I am interested because if I get the things in Visual Studio I will be able to use GitCopilot there. That's amazing.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-31-2022 12:42 AM

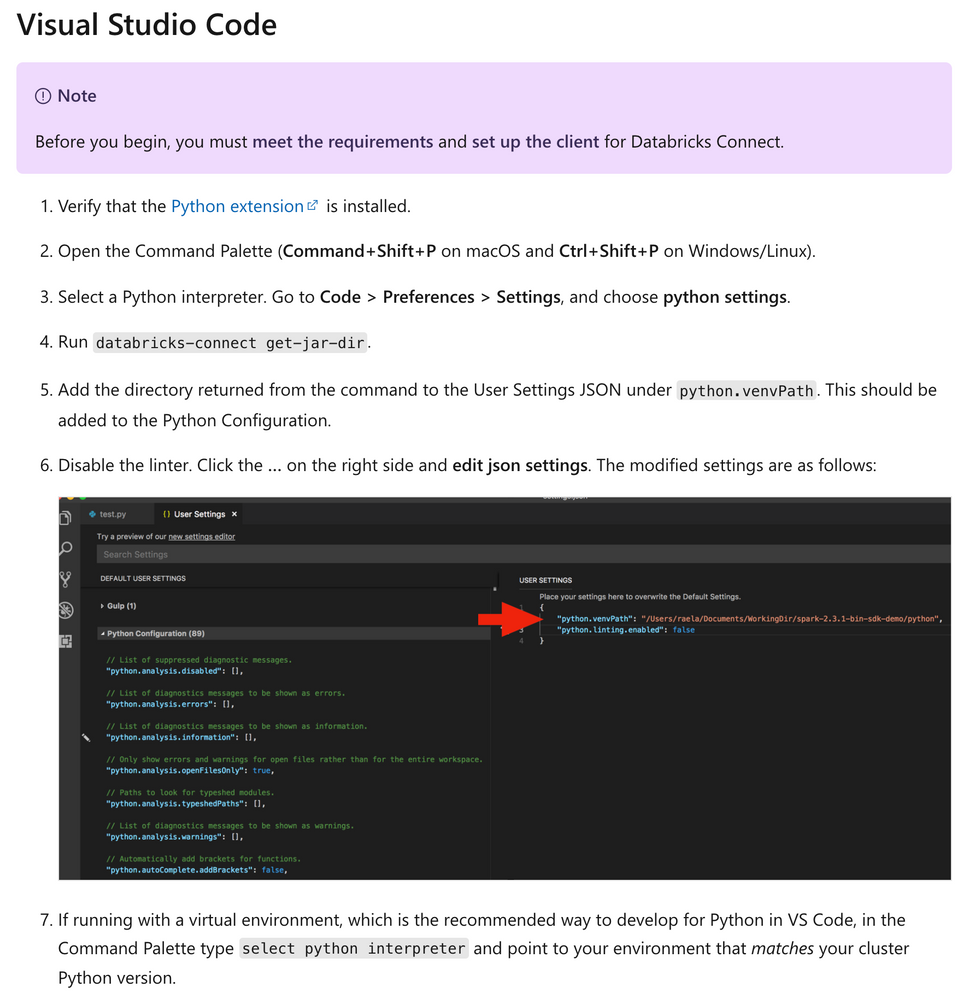

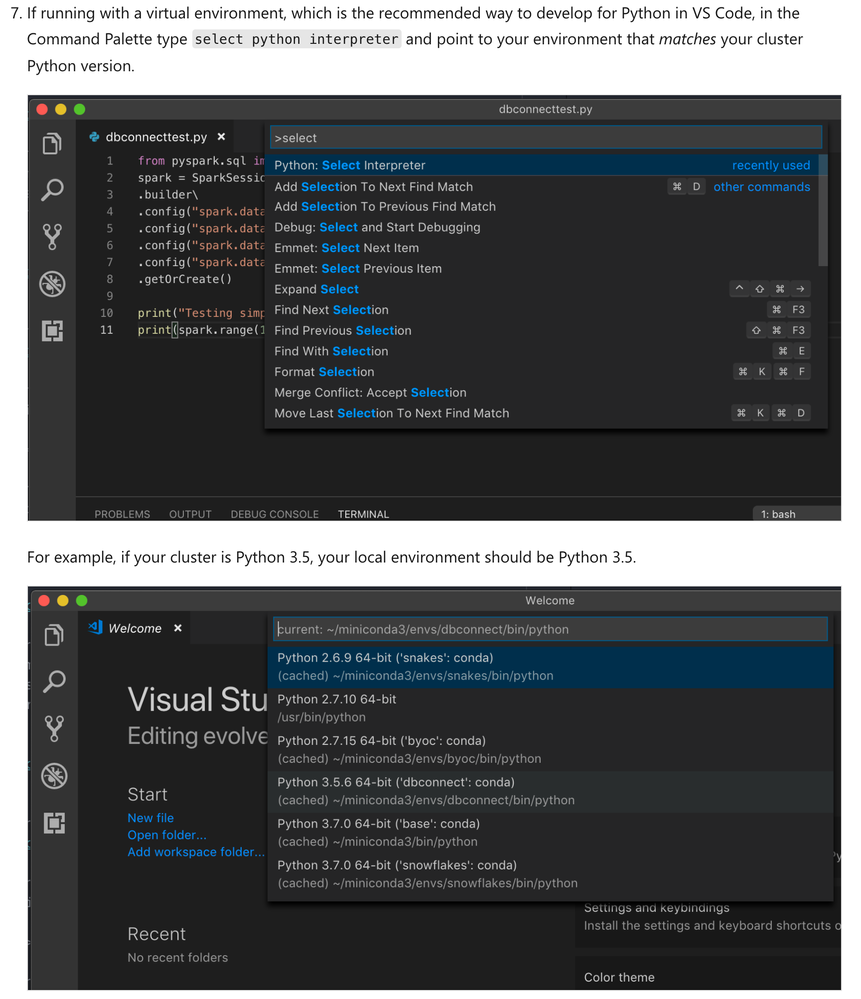

Hi @CHANDAN NANDY , Databricks Connect allows you to connect your favourite IDE (Eclipse, IntelliJ, PyCharm, RStudio, Visual Studio Code), notebook server (Jupyter Notebook, Zeppelin), and other custom applications to Azure Databricks clusters.

This article explains how Databricks Connect works, walks you through the steps to get started with Databricks Connect, explains how to troubleshoot issues that may arise when using Databricks Connect, and the differences between running using Databricks Connect versus running in an Azure Databricks notebook.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-08-2022 10:08 PM

from databricks import feature_store

from databricks.feature_store import FeatureStoreClient,feature_table

we used to use them in databricks notebooks trying to replicate the same in pycharm however it doesn't seem to work . some say u can patch code using below link

Tips and Tricks for using Python with Databricks Connect - DEV Community

what's your suggestion on this and i read your above response it says accessible through jobs what does that mean ... did u mean trigger it through job (adf) and databricks notebook's code run on databricks cluster . How about running a job through IDE .

please explain

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-12-2022 12:43 AM

Hi @akanksha pandya ,

Note:-

Databricks recommends that you use dbx by Databricks Labs for local development instead of Databricks Connect. Databricks plans no new feature development for Databricks Connect at this time. Also, be aware of the limitations of Databricks Connect.

Before you begin to use Databricks Connect, you must meet the requirements and set up the client for Databricks Connect.

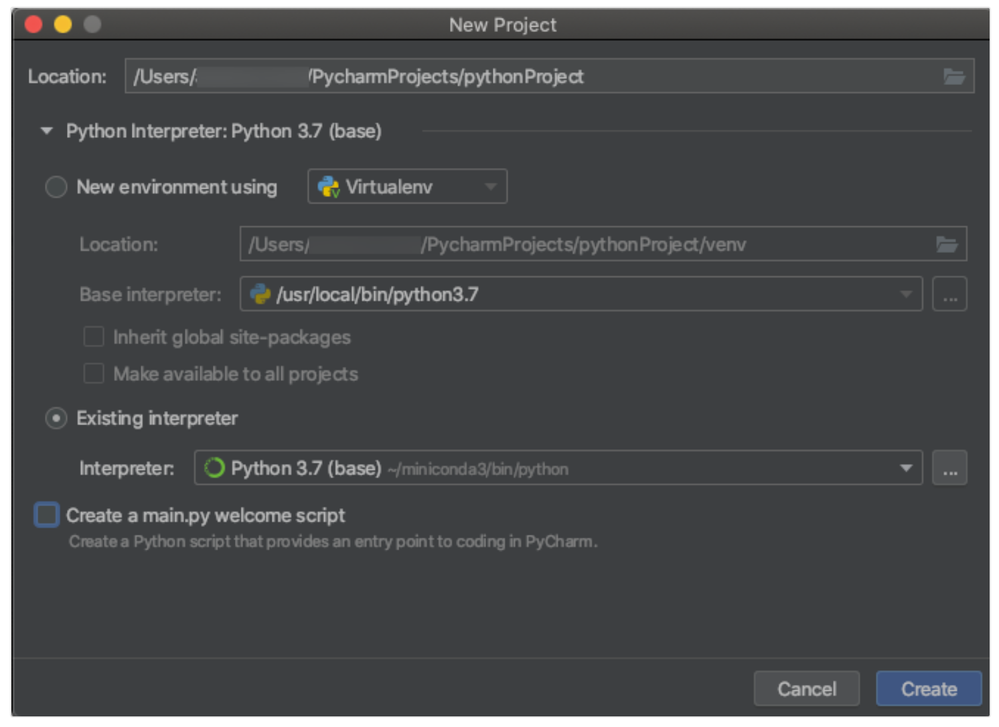

The Databricks Connect configuration script automatically adds the package to your project configuration.

Python 3 clusters

- When you create a PyCharm project, select Existing Interpreter. From the drop-down menu, select the Conda environment you created (see Requirements).

- Go to Run > Edit Configurations.

- Add PYSPARK_PYTHON=python3 as an environment variable.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-25-2022 03:02 AM

Hi @akanksha pandya , Did you get the solution for your proposed question, or do you need help?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2022 10:02 AM

@Kaniz Fatma I have a question regarding accessing feature tables created in databricks via DBFS.

I see that the data has to reside in dbfs to read and run the feature store on top of it. In my environment, dbfs is locked for security reasons. Is there a workaround or another way to write and read access the feature table maybe from an azure blob container?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-12-2022 12:38 AM

Hi @Uc A , Please go through the doc. This might be helpful to you. Please let me know if this helps you.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-25-2022 11:54 AM

Hi @Uc A ,

Just a friendly follow-up. Do you still need help or @Kaniz Fatma 's response help you to find the solution? Please let us know.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-18-2022 08:17 AM

still need help

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-28-2022 08:58 AM

Hi @Kaniz Fatma and @Jose Gonzalez ,

turning back to the original question, and considering that one of the main benefits of the Feature Store is the removal of the online/offline skew, how could I access to the features from a client application like a web app for instance, external to Databricks? Is there any way?

Thanks!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-10-2022 04:49 AM

Hi @Ariel Cedola , I repeat - The Databricks Feature Store library is available only on Databricks Runtime for Machine Learning and is accessible through notebooks and jobs.

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Optimal Cluster Configuration for Training on Billion-Row Datasets in Machine Learning

- Nulls in Merge in Data Engineering

- Ephemeral storage how to create/mount. in Data Engineering

- Configure Service Principle access to GiLab in Data Engineering

- DataBricks Rust client and/or OpenAPI spec in Data Engineering