Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- messages from event hub does not flow after a time

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-01-2022 03:01 AM

Hi Team,

I'm trying to build a Real-time solution using Databricks and Event hubs.

Something weird happens after a time that the process start.

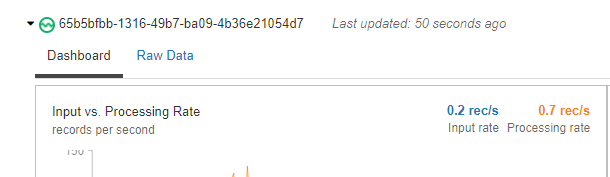

At the begining the messages flow through the process as expected with this rate:

please, note that the last updated time is 50Sec.

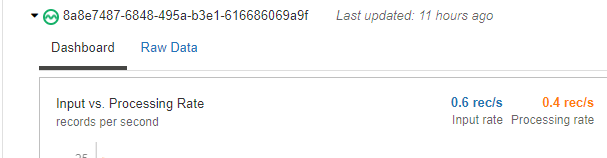

However, after a time, the messages don't flow:

If I restart the job, the messages flow again as expected (even recovering the messages that does not were processed in the las 11 hours, for this case)

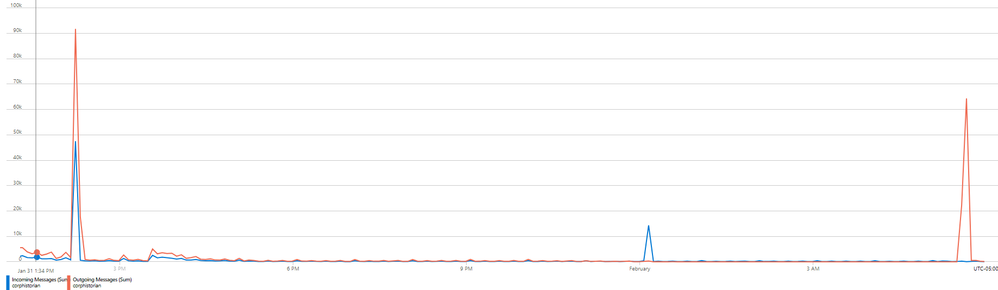

This is a graph of the example of the issue:

Any idea what could happens?

Labels:

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-01-2022 07:01 AM

- please check is .option("checkpointLocation", "/mnt/your_storage/") specified for structured streaming,

- it can also depend what is than done with stream (writeStream),

- connection to eventhub is quite straight forward, please verify also flow on Azure side as there we can see streaming messages in real time (go to entities, select Even Hub, in "Futures" select "process data" than "Explore" than "Create")

6 REPLIES 6

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-01-2022 07:01 AM

- please check is .option("checkpointLocation", "/mnt/your_storage/") specified for structured streaming,

- it can also depend what is than done with stream (writeStream),

- connection to eventhub is quite straight forward, please verify also flow on Azure side as there we can see streaming messages in real time (go to entities, select Even Hub, in "Futures" select "process data" than "Explore" than "Create")

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-01-2022 07:18 AM

Thanks for your answer @Hubert Dudek ,

- Is already specified

- What do youn mean with this?

- This is the weird part of this, bucause the data is flowing good, but at any time is like the Job stop the reading or somethign like that and if I restart the job, all continues working well

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-01-2022 10:38 AM

I mean that you read stream in some purpose, usually to transform it and write it somewhere. So problem can be not with reading but writing part.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-01-2022 11:52 AM

I'm assuming that the issue is not the Writing part because the DB does not present any kind of blockers or conflicts.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-08-2022 05:14 PM

hi @Jhonatan Reyes ,

Do you control/limit the max number of events process per trigger in your event hubs? check "maxEventsPerTrigger" or Whats your trigger internal? also, how many partitions are you reading from? whats your sink?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-16-2022 11:49 AM

@Jhonatan Reyes

Do you still need help with this or the issue has been mitigated/solved?

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Run failed with error message ContextNotFound in Data Engineering

- Show log4j messages in run output in Data Engineering

- Unable to create a record_id column via DLT - Autoloader in Data Engineering

- Join multiple streams with watermarks in Warehousing & Analytics

- Spark Driver failed due to DRIVER_UNAVAILABLE but not due to memory pressure in Data Engineering