Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Correct setup and format for calling REST API for ...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-07-2022 08:28 AM

I trained a basic image classification model on MNIST using Tensorflow, logging the experiment run with MLflow.

Model: "my_sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

reshape (Reshape) (None, 28, 28, 1) 0

conv2d (Conv2D) (None, 26, 26, 32) 320

max_pooling2d (MaxPooling (None, 13, 13, 32) 0

2D)

flatten (Flatten) (None, 5408) 0

dense (Dense) (None, 100) 540900

dense_1 (Dense) (None, 10) 1010

=================================================================

Total params: 542,230

Trainable params: 542,230

Non-trainable params: 0

_________________________________________________________________with mlflow.start_run() as run:

run_id = run.info.run_id

mlflow.tensorflow.autolog()

model.fit(trainX, trainY,

validation_data = (testX, testY),

epochs = 2,

batch_size = 64)I then registered the model and enabled model serving.

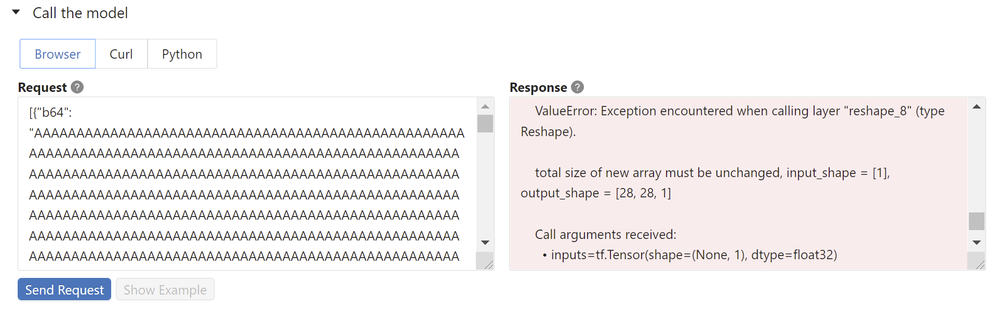

When trying to send the JSON text through the browser in the form

[{"b64": "AA...AA=="}]I'm getting errors like the following:

BAD_REQUEST: Encountered an unexpected error while evaluating the model. Verify that the serialized input Dataframe is compatible with the model for inference.

Traceback (most recent call last):

File "/databricks/conda/envs/model-10/lib/python3.8/site-packages/mlflow/pyfunc/scoring_server/__init__.py", line 306, in transformation

raw_predictions = model.predict(data)

File "/databricks/conda/envs/model-10/lib/python3.8/site-packages/mlflow/pyfunc/__init__.py", line 605, in predict

return self._model_impl.predict(data)

File "/databricks/conda/envs/model-10/lib/python3.8/site-packages/mlflow/keras.py", line 475, in predict

predicted = _predict(data)

File "/databricks/conda/envs/model-10/lib/python3.8/site-packages/mlflow/keras.py", line 462, in _predict

predicted = pd.DataFrame(self.keras_model.predict(data.values))

File "/databricks/conda/envs/model-10/lib/python3.8/site-packages/keras/utils/traceback_utils.py", line 67, in error_handler

raise e.with_traceback(filtered_tb) from None

File "/databricks/conda/envs/model-10/lib/python3.8/site-packages/tensorflow/python/framework/func_graph.py", line 1147, in autograph_handler

raise e.ag_error_metadata.to_exception(e)

ValueError: in user code:

File "/databricks/conda/envs/model-10/lib/python3.8/site-packages/keras/engine/training.py", line 1801, in predict_function *

return step_function(self, iterator)

File "/databricks/conda/envs/model-10/lib/python3.8/site-packages/keras/engine/training.py", line 1790, in step_function **

outputs = model.distribute_strategy.run(run_step, args=(data,))

File "/databricks/conda/envs/model-10/lib/python3.8/site-packages/keras/engine/training.py", line 1783, in run_step **

outputs = model.predict_step(data)

File "/databricks/conda/envs/model-10/lib/python3.8/site-packages/keras/engine/training.py", line 1751, in predict_step

return self(x, training=False)

File "/databricks/conda/envs/model-10/lib/python3.8/site-packages/keras/utils/traceback_utils.py", line 67, in error_handler

raise e.with_traceback(filtered_tb) from None

File "/databricks/conda/envs/model-10/lib/python3.8/site-packages/keras/layers/core/reshape.py", line 110, in _fix_unknown_dimension

raise ValueError(msg)

ValueError: Exception encountered when calling layer "reshape" (type Reshape).

total size of new array must be unchanged, input_shape = [1], output_shape = [28, 28, 1]

Call arguments received:

• inputs=tf.Tensor(shape=(None, 1), dtype=float32)

If I have an image with shape (28,28,1), called img, I am converting it to the required format like this

image_data = base64.b64encode(img)

json = {"b64": image_data.decode()}My question has two parts:

- How do I adjust my model to handle the b64 encoded string and convert it back to a 28x28 image first?

- What is the exact JSON format I need to send the image data to the REST endpoint?

Labels:

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-15-2022 09:40 PM

@Anthony Gibbons may be this git should work with your use case - https://github.com/mlflow/mlflow/issues/1661

5 REPLIES 5

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-07-2022 10:41 AM

Hi @Anthony Gibbons ! My name is Kaniz, and I'm the technical moderator here. Great to meet you, and thanks for your question! Let's see if your peers in the community have an answer to your question first. Or else I will get back to you soon. Thanks.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-17-2022 02:35 AM

Hi @Anthony Gibbons , Try to convert your input in base64

import base64

to_predict = test_images[0]

inputs = base64.b64encode(to_predict)then convert it to Dataframe and send a request

decode it back to original at the backend by

np.frombuffer(base64.b64decode(encoded), np.uint8)

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-18-2022 04:57 AM

Hi @Kaniz Fatma ,

Thanks for your answer!

My question is about this backend. You mean putting this line inside the predict() method?

When I'm defining a sequential model in TensorFlow, how do I incorporate what I want it to do to the input from a request?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-18-2022 06:32 AM

Hi @Anthony Gibbons , This link might help you as well.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-15-2022 09:40 PM

@Anthony Gibbons may be this git should work with your use case - https://github.com/mlflow/mlflow/issues/1661

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Error when running Spark-DL notebooks in Data Engineering

- Getting error while mounting storage account to databricks notebook in Data Engineering

- Databricks-connect configured with service principal token but unable to retrieve information to local machine in Data Engineering

- Order of a dataframe is not perserved after calling cache() and limit() in Data Engineering

- Unable to clear cache using a pyspark session in Data Engineering