Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Error writing a partitioned Delta Table from a mul...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-14-2022 07:55 AM

I have a notebook that writes a delta table with a statement similar to the following:

match = "current.country = updates.country and current.process_date = updates.process_date"

deltaTable = DeltaTable.forPath(spark, silver_path)

deltaTable.alias("current")\

.merge(

data.alias("updates"),

match) \

.whenMatchedUpdate(

set = update_set,

condition = condition) \

.whenNotMatchedInsert(values = values_set)\

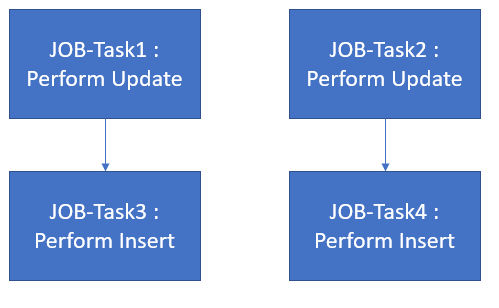

.execute()The multitask job has two tasks that are executed in parallel.

ConcurrentAppendException: Files were added to partition [country=Panamá, process_date=2022-01-01 00:00:00] by a concurrent update. Please try the operation again.In each task I send different countries (Panama, Ecuador) and the same date as a parameter, so when executing only the information corresponding to the country sent should be written. This delta table is partitioned by the country and process_date fields. Any ideas what I'm doing wrong? How should I specify the partition to be affected when using the "merge" statement?

I appreciate if you can clarify how I should work with the partitions in these cases, since this is new to me.

Update: I made an adjustment in the condition to specify the country and process date according to what is indicated here (ConcurrentAppendException). Now I get the following error message:

ConcurrentAppendException: Files were added to the root of the table by a concurrent update. Please try the operation again.I can't think what could cause the error. Keep investigating.

Labels:

- Labels:

-

Delta

-

Delta table

-

Spark

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-16-2022 11:20 AM

Initially, the affected table only had a date field as partition. So I partitioned it with country and date fields. This new partition created the country and date directories however the old directories of the date partition remained and were not deleted.

Apparently these directories were causing the conflict when trying to read them concurrently. I created a new delta on another path with the correct partitions and then replaced it on the original path. This allowed old partition directories to be removed.

The only consequence of performing these actions was that I lost the change history of the table (time travel).

3 REPLIES 3

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-16-2022 10:18 AM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-16-2022 11:20 AM

Initially, the affected table only had a date field as partition. So I partitioned it with country and date fields. This new partition created the country and date directories however the old directories of the date partition remained and were not deleted.

Apparently these directories were causing the conflict when trying to read them concurrently. I created a new delta on another path with the correct partitions and then replaced it on the original path. This allowed old partition directories to be removed.

The only consequence of performing these actions was that I lost the change history of the table (time travel).

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-16-2022 08:12 PM

Hi @Daniel Vera , Thanks for the details. I am not sure about your data, but I hope adding multiple columns in partition does not ended up with small files.

Lets see if databricks provides an option to apply explicit lock on tables for parallel operations.

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Pulsar Streaming (Read) - Benchmarking Information in Data Engineering

- Optimizing Delta Live Table Ingestion Performance for Large JSON Datasets in Data Engineering

- How to do perform deep clone for data migration from one Datalake to another? in Data Engineering

- Delta Live Tables consuming different files from the same path are combining the schema in Data Engineering

- Inconsistent Results When Writing to Oracle DB with Spark's dropDuplicates and foreachPartition in Data Engineering