Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Write Empty Delta file in Datalake

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-17-2022 06:36 AM

hi all,

Currently, i am trying to write an empty delta file in data lake, to do this i am doing the following:

- Reading parquet file from my landing zone ( this file consists only of the schema of SQL tables)

df=spark.read.format('parquet').load(landingZonePath)- After this, i convert this file into the delta

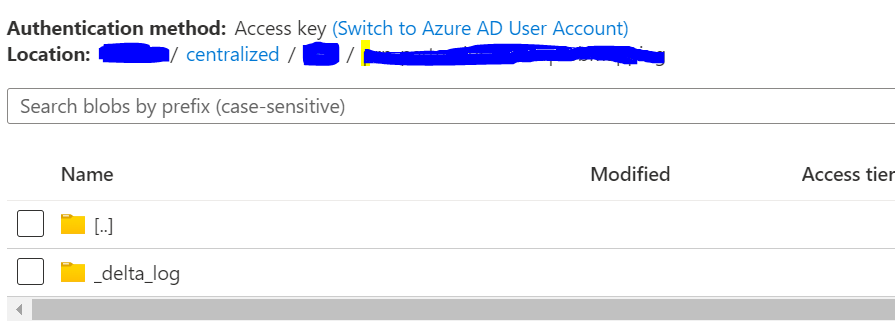

df.write.format("delta").save(centralizedZonePath)- But after checking data lake i see no file

Note: Parquet file in landingzone, has the schema

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-01-2022 08:20 PM

Hi @bhagya s Since your source file is empty, there is no data file inside the centralizedZonePath directory i.e .parquet file is not created in the target location. However, _delta_log is the transaction log that holds the metadata of the delta format data and has the schema of the table.

You may understand more about transaction logs here :https://databricks.com/discover/diving-into-delta-lake-talks/unpacking-transaction-log

4 REPLIES 4

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-17-2022 09:52 AM

@bhagya s , File schema is in _delta_log

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-18-2022 09:50 PM

Hi @bhagya s , Just a friendly follow-up. Do you still need help, or @Hubert Dudek (Customer) 's response help you to find the solution? Please let us know.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-01-2022 08:20 PM

Hi @bhagya s Since your source file is empty, there is no data file inside the centralizedZonePath directory i.e .parquet file is not created in the target location. However, _delta_log is the transaction log that holds the metadata of the delta format data and has the schema of the table.

You may understand more about transaction logs here :https://databricks.com/discover/diving-into-delta-lake-talks/unpacking-transaction-log

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-14-2022 09:23 AM

Hi @bhagya s , We haven’t heard from you on the last response from @Noopur Nigam , and I was checking back to see if you have a resolution yet. If you have any solution, please share it with the community as it can be helpful to others. Otherwise, we will respond with more details and try to help.

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Operations on Unity Catalog take too long in Administration & Architecture

- New delta log folder is not getting created in Data Engineering

- Malformed Input Exception while saving or retreiving Table in Data Engineering

- CREATE TABLE does not overwrite location whereas CREATE OR REPLACE TABLE does in Data Engineering

- Type 2 SCD when using Auto Loader in Data Engineering