Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Failing to install a library from dbfs mounted sto...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Failing to install a library from dbfs mounted storage (adls2) with pass through credentials cluster

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-22-2022 01:33 PM

We've setup a premium workspace with passthrough credentials cluster , while they do work and access my adls gen 2 storage

I can't make it install a library on the cluster from there. and keeping getting

"Library installation attempted on the driver node of cluster 0522-200212-mib0srv0 and failed. Please refer to the following error message to fix the library or contact Databricks support. Error Code: DRIVER_LIBRARY_INSTALLATION_FAILURE. Error Message: com.google.common.util.concurrent.UncheckedExecutionException: com.databricks.backend.daemon.data.client.adl.AzureCredentialNotFoundException: Could not find ADLS Gen2 Token

"

How can I actually install a "cluster wide" library? on those passthrough credentials clusters

(The general adls mount is using those credentials to mount the data lake)

This happens on both standard and high concurrency clusters

Labels:

- Labels:

-

Credential passthrough

-

DBFS

-

Library

14 REPLIES 14

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-24-2022 01:45 AM

Hi @Alon Nisser , Here is a similar issue on S.O. Please let us know if that helps.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-24-2022 01:28 PM

Nope @Kaniz Fatma it's not actually my question, I know how to install a library on a cluster and do it quite a lot. the question is how to install a library stored on the data lake (via dbfs wheel) for a "pass credentials" cluster

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-25-2022 01:28 AM

Hi @Alon Nisser , Thank you for the clarification. I might have misinterpreted the question.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-24-2022 01:29 PM

I currently got a hack, of copying the library from the data lake to root dbfs. and from there . but I don't like it

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-25-2022 04:14 AM

Hi @Alon Nisser , I'm glad you got a hack for the time being, and thank you for sharing it on our platform. Can you tell us the reason for your dissatisfaction with it?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-25-2022 06:34 AM

Hi @Kaniz Fatma Even I am facing the same issue. I am trying to use job cluster with credential passthrough enabled to deploy a job but library installation fails with the same exception:

"Message: com.google.common.util.concurrent.UncheckedExecutionException: com.databricks.backend.daemon.data.client.adl.AzureCredentialNotFoundException: Could not find ADLS Gen2 Token"

Where to add the token? or am I missing something?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-25-2022 01:29 PM

@Nancy Gupta , As far as I can trace this issue, it's about the token isn't set up yet when the cluster is starting; I assume it does work with pass-through credentials after starting the collection regularly?

My hack was to copy the library to the root dbfs (I've created a new folder there) using another group, and then install from this place does work

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-26-2022 02:26 AM

Hi @Nancy Gupta , Were you able to replicate the solution provided by @Alon Nisser ?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-26-2022 06:28 AM

@Kaniz Fatma , yes but that is just a workaround and it would be great if I can get a solution for this!

Also again in the job for any read from adls, it fails again with the same error.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-31-2022 04:08 AM

@Kaniz Fatma , any solutions pls?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-31-2022 06:16 AM

Hi @Nancy Gupta,

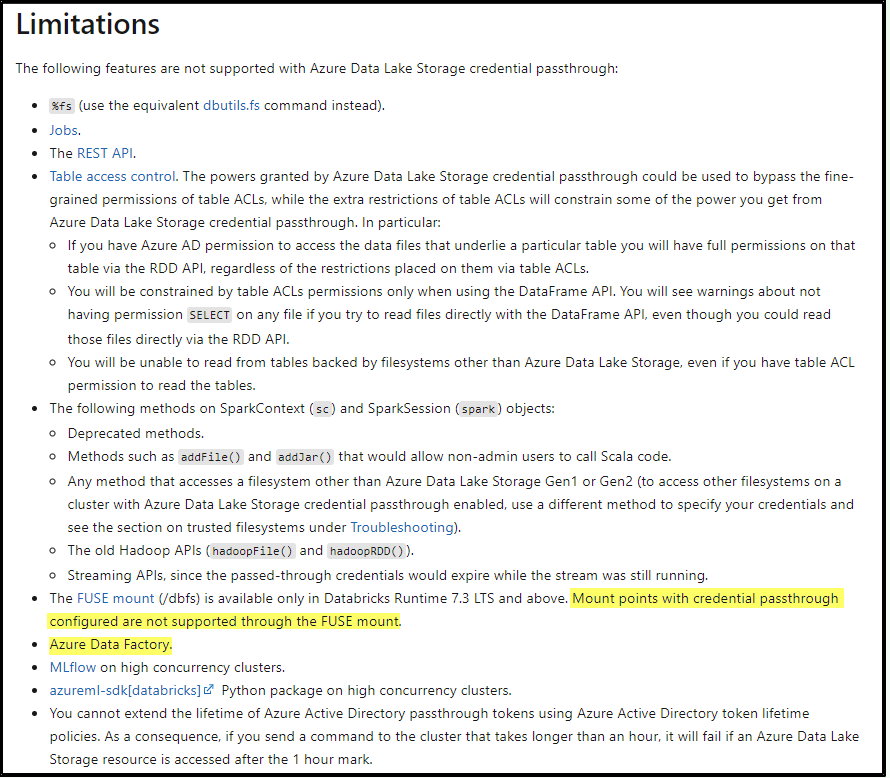

By design, it is a limitation that the ADF-linked service access token will not be passed through the notebook activity. It would help if you used the credentials inside the notebook activity or key vault store.

Reference: ADLS using AD credentials passthrough – limitations.

Hope this helps. Do let us know if you any further queries.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-14-2022 09:41 AM

Hi @Nancy Gupta , We haven’t heard from you on the last response from me, and I was checking back to see if you have a resolution yet. If you have any solution, please share it with the community as it can be helpful to others. Otherwise, we will respond with more details and try to help.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-16-2022 03:36 AM

Hello @Alon Nisser @Nancy Gupta

Installing libraries using passthrough credentials is currently not supported

You need below configs on the cluster

fs.azure.account...

We can file a feature request for this.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-16-2022 11:35 AM

Sorry I can't figure this out, the link you've added is irrelevant for passthrough credentials, if we add it the cluster won't be passthrough, Is there a way to add this just for a specific folder? while keeping passthrough for the rest?

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Databricks is taking too long to run a query in Administration & Architecture

- Azure Databricks with standard private link cluster event log error: "Metastore down"... in Administration & Architecture

- Using managed identities to access SQL server - how? in Data Engineering

- Ingest __databricks_internal catalog - PERMISSION DENIED in Data Engineering

- Performance Issue with XML Processing in Spark Databricks in Data Engineering