Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Using (python) import on azure databricks

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Using (python) import on azure databricks

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-29-2022 09:27 PM

Hello,

My team is currently working on azure databricks with a mid sized repo. When we wish to import pyspark functions and classes from other notebooks we currently use

%run <relpath>which is less than ideal.

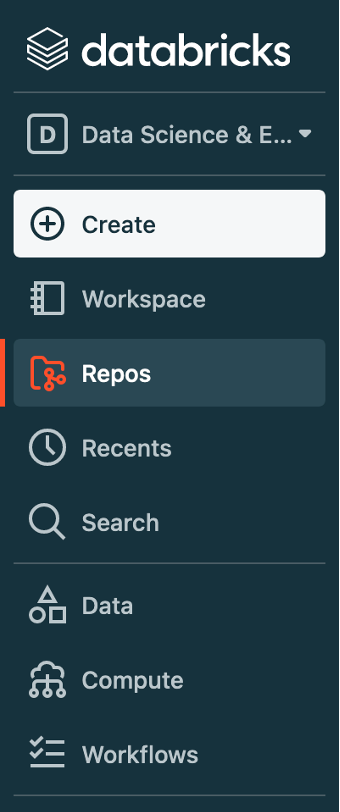

I would like to replicate the functionality of this repo. However, when I clone it into the "repos" (see screenshot) section on my azure databricks instance, it will not work.

The location of the repo is not

/Workspace/Repos/<username>/<repo_name>the best lock I can get on a location is an adb hyperlink

https://adb-<workspace_id>.13.azuredatabricks.net/?o=<workspace_id>#folder/<repo_id>;When I run sys.path(), I get the following output:

/databricks/python_shell/scripts

/local_disk0/spark-xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx/userFiles-xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx

/databricks/spark/python /databricks/spark/python/lib/py4j-0.10.9.1-src.zip

/databricks/jars/spark--driver--driver-spark_3.2_2.12_deploy.jar

/databricks/jars/spark--maven-trees--ml--10.x--graphframes--org.graphframes--graphframes_2.12--org.graphframes__graphframes_2.12__0.8.2-db1-spark3.2.jar

/databricks/python_shell

/usr/lib/python38.zip

/usr/lib/python3.8

/usr/lib/python3.8/lib-dynload

/databricks/python/lib/python3.8/site-packages

/usr/local/lib/python3.8/dist-packages

/usr/lib/python3/dist-packages

/databricks/.python_edge_libs

/databricks/python/lib/python3.8/site-packages/IPython/extensionsIt seems that the second path is where my notebook is being run from, but it is not persistent and the directory is empty.

So, my question is how can I use python's import functionality (as indicated in the linked example documentation) with my repo in adb?

Labels:

- Labels:

-

Azure databricks

-

Pyspark

-

Python

1 REPLY 1

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-30-2022 07:54 AM

Hi @Sebastian Gay , This section guides developing notebooks and jobs in Azure Databricks using Python. The first subsection provides links to tutorials for common workflows and tasks. The second subsection provides links to APIs, libraries, and critical tools.

To work with non-notebook files in Databricks Repos, you must be running Databricks Runtime 8.4 or above.

You need to enable files in Databricks Repos at first.

An admin can enable the Files in Repos feature as follows:

- Go to the Admin Console.

- Click the Workspace Settings tab.

- In the Repos section, click the Files in Repos toggle.

After the feature has been enabled, you must restart your cluster and refresh your browser before you can use Files in Repos.

Additionally, the first time you access a repo after Files in Repos is enabled, you must open the Git dialog. A dialog indicates that you must perform a pull operation to sync non-notebook files in the repo. Select Agree and Pull to sync files. If there are any merge conflicts, another dialog appears, giving you the option of discarding your conflicting changes or pushing your changes to a new branch.

To Confirm Files in Repos are enabled,

You can use the command

%sh pwdin a notebook inside a Repo to check if Files in Repos are enabled.

- If Files in Repos is not enabled, the response is

/databricks/driver- If Files in Repos is enabled, the response is

/Workspace/Repos/<path to notebook directory>Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Variables passed from ADF to Databricks Notebook Try-Catch are not accessible in Data Engineering

- I am getting NoneType error when running a query from API on cluster in Data Engineering

- Unit Testing with the new Databricks Connect in Python in Data Engineering

- Databricks connecting SQL Azure DW - Confused between Polybase and Copy Into in Data Engineering

- Databricks SDK for Python: Errors with parameters for Statement Execution in Data Engineering