I'm trying to create a new column that fills in the nulls below. I tried using leads and lags but isn't turning out right. Basically trying to figure out who is in "possession" of the record, given the TransferFrom and TransferTo columns and sequence of events. For instance, 35 was in possession of the record until they transferred it to 57. My current query will only populate 35 in record 2 since it only leads by one record. I need the query to populate all records prior to this as well if 35 is the first value found in the TransferFrom column. Any ideas? (btw, I also tried to use outer apply which works in SQL Server but isn't supported by spark SQL)

Create table script:

CREATE TABLE results (

OrderID int

,TransferFrom string

,TransferTo string

,ActionTime timestamp)

INSERT INTO results

VALUES

(1,null,null,'2020-01-01 00:00:00'),

(1,null,null,'2020-01-02 00:00:00'),

(1,null,null,'2020-01-03 00:00:00'),

(1,'35','57','2020-01-04 00:00:00'),

(1,null,null,'2020-01-05 00:00:00'),

(1,null,null,'2020-01-06 00:00:00'),

(1,'57','45','2020-01-07 00:00:00'),

(1,null,null,'2020-01-08 00:00:00'),

(1,null,null,'2020-01-09 00:00:00'),

(1,null,null,'2020-01-10 00:00:00')

Current query that doesn't work:

SELECT *

,coalesce(

lead(TransferFrom) over (partition by OrderID order by ActionTime)

,TransferFrom

,lag(TransferTo) over (partition by OrderID order by ActionTime)) as NewColumn

FROM results

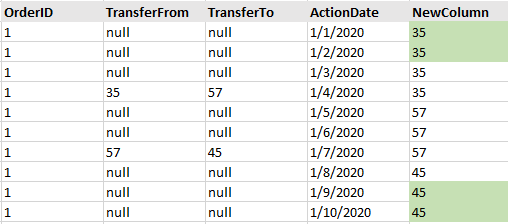

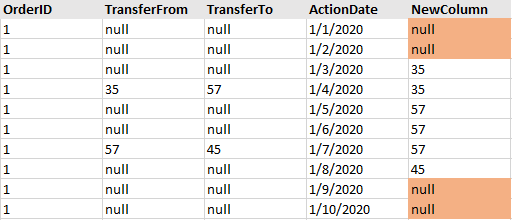

Current query result that is incorrect:

Desired query result: