Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Databricks cluster starts with docker

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Databricks cluster starts with docker

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-14-2022 07:09 AM

Hi there!

I hope u are doing well

I'm trying to start a cluster with a docker image to install all the libraries that I have to use.

I have the following Dockerfile to install only python libraries as you can see

FROM databricksruntime/standard

WORKDIR /app

COPY . .

RUN apt-get update && apt-get install -y python3-pip

RUN sudo apt-get install -y libpq-dev

RUN pip install -r /app/requirements.txt

CMD ["python3"]Does anybody knows how to install maven libraries from this same Dockerfile? I've tried and looked up for many solutions but I can't figure it out how to do that.

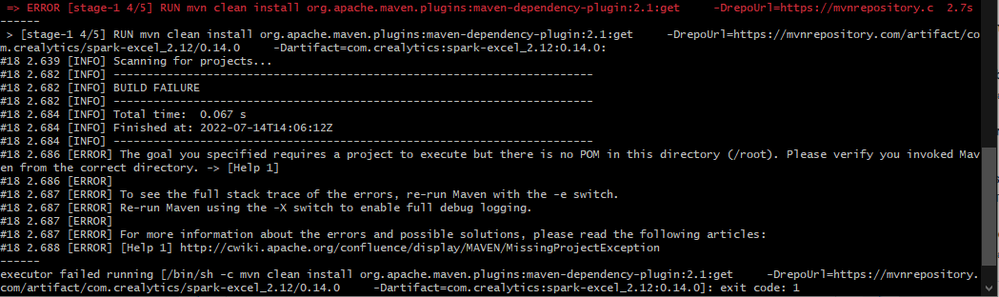

The last thing I've had tried is to use a Multi stage building using the Maven image but I had trouble with the dependencies (missing POM.xml file).

# MAVEN + PYTHON

FROM databricksruntime/standard

WORKDIR /app

COPY . .

RUN apt-get update && apt-get install -y python3-pip

RUN sudo apt-get install -y libpq-dev

RUN pip install -r /app/requirements.txt

CMD ["python3"]

FROM maven:latest

WORKDIR /root

COPY --from=0 /app .

RUN mvn clean install org.apache.maven.plugins:maven-dependency-plugin:2.1:get \

-DrepoUrl=https://mvnrepository.com/artifact/com.crealytics/spark-excel_2.12/0.14.0 \

-Dartifact=com.crealytics:spark-excel_2.12:0.14.0

RUN mvn clean install org.apache.maven.plugins:maven-dependency-plugin:2.1:get \

-DrepoUrl=https://mvnrepository.com/artifact/mysql/mysql-connector-java \

-Dartifact=mysql:mysql-connector-java:8.0.29

I don't get it how to install maven libraries from Dockerfile

If someone has knowledge about something like this and could help me I will appreciate it a lot.

Thanks!

Labels:

5 REPLIES 5

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-15-2022 04:26 AM

Hi @Ignacio Guillamondegui, This is an excellent S.O discussion on the topic you've raised a query on. Please let us know if that helps.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-15-2022 01:57 PM

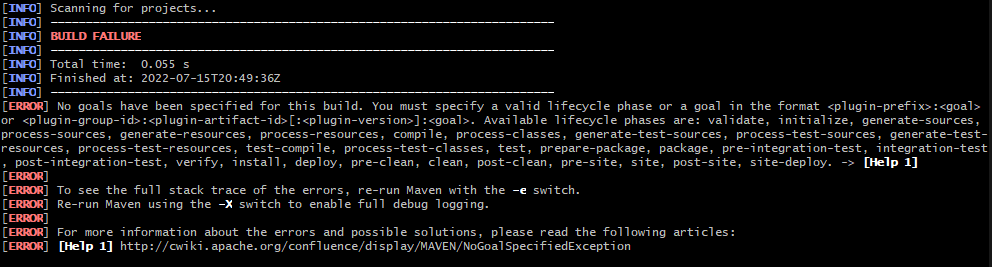

Thanks! I have tried some answers from the S.O discussion and I could build the image but I can't run it.

I can build the image using the flag dependency:solve

But still can't install it, when I have to run it I receive the next message, so the cluster can't start

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-17-2022 08:34 AM

1) Install your jars in a new layer, not in the same layer

2) installing with maven is more work than building the library in your jar layer

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-04-2022 12:04 AM

Hi @Ignacio Guillamondegui

Hope all is well! Just wanted to check in if you were able to resolve your issue and would you be happy to share the solution or mark an answer as best? Else please let us know if you need more help.

We'd love to hear from you.

Thanks!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-30-2023 01:50 AM

Hi! I am facing a similar issue.

I tried to use this one

FROM databricksruntime/standard:10.4-LTS

ENV DEBIAN_FRONTEND=noninteractive

RUN apt update && apt install -y maven && rm -rf /var/lib/apt/lists/*

RUN /databricks/python3/bin/pip install databricks-cli

RUN mkdir /databricks/jars

RUN mvn org.apache.maven.plugins:maven-dependency-plugin:2.8:get -Dartifact=com.microsoft.azure.kusto:kusto-spark_3.0_2.12:2.5.2 -Ddest=/databricks/jars/

RUN /databricks/python3/bin/pip install azure-kusto-data==2.1.1But it looks like it doesn't work. I get an error java.lang.NoClassDefFoundError: com/microsoft/azure/kusto/data/exceptions/DataServiceException

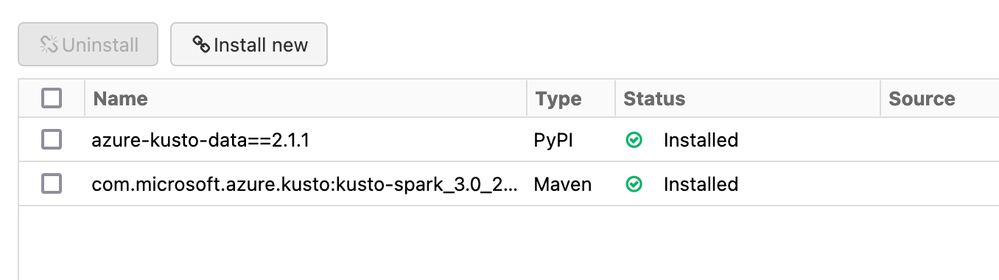

And if I install libraries using an interface like on the picture - everything works.

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Unity Catalog Enabled Clusters using PrivateNIC in Administration & Architecture

- SparkContext lost when running %sh script.py in Data Engineering

- Azure Databricks with standard private link cluster event log error: "Metastore down"... in Administration & Architecture

- Using managed identities to access SQL server - how? in Data Engineering

- Performance Issue with XML Processing in Spark Databricks in Data Engineering