Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Delta Live Table Pipeline - Azure cluster fail

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Delta Live Table Pipeline - Azure cluster fail

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-02-2022 10:22 AM

Failed to launch pipeline cluster 0802-171503-4m02lexd: The operation could not be performed on your account with the following error message: azure_error_code: OperationNotAllowed, azure_error_message: Operation could not be completed as it results in...

I've also noticed that it is not deploying in the same region as my Resource Group or the Databricks instance which is in Southcentral US. I've also specifying the specs of the VM/cluster as stated in the top solution in this forum.

{

"id": "faa79e00-1286-11ed-82b7-ea83de10642f",

"sequence": {

"control_plane_seq_no": 1658882570762832

},

"origin": {

"cloud": "Azure",

"region": "westus",

"org_id": 5389340109879859,

"pipeline_id": "24007e7f-15b9-45e8-9628-500ba30c826c",

"pipeline_name": "DLT-Demo-81-aaron@clevelanalytics.com",

"update_id": "23fff1f7-a765-46dc-82bc-43f04ef451c1"

},

"timestamp": "2022-08-02T17:17:25.856Z",

"message": "Update 23fff1 is FAILED.",

"level": "ERROR",

"error": {

"exceptions": [

{

"message": "Failed to launch pipeline cluster 0802-171503-4m02lexd: The operation could not be performed on your account with the following error message: azure_error_code: OperationNotAllowed, azure_error_message: Operation could not be completed as it results in..."

}

],

"fatal": true

},

"details": {

"update_progress": {

"state": "FAILED"

}

},

"event_type": "update_progress"

}

Labels:

9 REPLIES 9

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-02-2022 12:33 PM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-02-2022 12:35 PM

Yeah I checked that and it looks good so far. What I did find is if I flip it to Production it works. Then it seems I can flip to to Development and it works fine after that. Weird? Admittedly just learning this stuff so I don't really know what I'm doing yet.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-02-2022 01:09 PM

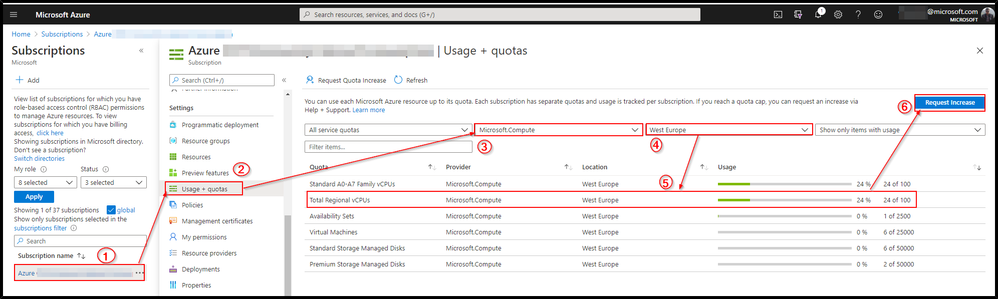

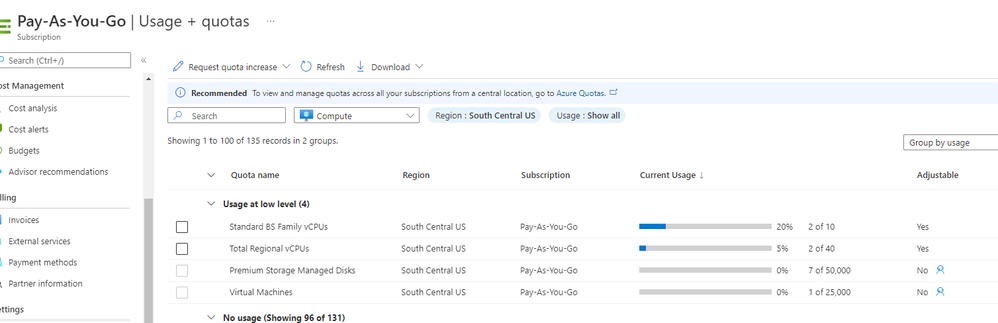

So I think you were correct but I think the quota data in my subscription was stale the first time I checked it or I just screwed up. I just checked and it was at the limit. Got that increased but now I'm getting another error which is related to quotas for Public IP address limits. I was able to update the quota for the cores without a ticket in Azure but this one requires a support request. Logging this for others.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-02-2022 01:11 PM

Here is the error I'm getting now:

{

"reason": {

"code": "CLOUD_PROVIDER_LAUNCH_FAILURE",

"type": "CLOUD_FAILURE",

"parameters": {

"azure_error_code": "PublicIPCountLimitReached",

"azure_error_message": "Cannot create more than 10 public IP addresses for this subscription in this region. (Azure request Id: 3f096c68-2d5d-4bcf-bb19-91b04ecd041e)",

"databricks_error_message": "Error code: PublicIPCountLimitReached, error message: Cannot create more than 10 public IP addresses for this subscription in this region. (Azure request Id: 3f096c68-2d5d-4bcf-bb19-91b04ecd041e)"

}

}

}

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-02-2022 03:10 PM

So I stood up a fresh instance of Databricks and now I'm back to this original error. All the quotas I can see are good to go except for maybe a NetworkWatcher. This is confusing.

{

"id": "d4246d80-12ae-11ed-82b7-ea83de10642f",

"sequence": {

"control_plane_seq_no": 1658882570791530

},

"origin": {

"cloud": "Azure",

"region": "westus",

"org_id": 2417202028545588,

"pipeline_id": "fd140f96-5ab6-4557-b9fe-ece2d1ff8e2c",

"pipeline_name": "LiveTablePipelineTest",

"update_id": "2b8b7f5b-2424-423d-bc3b-ed9257b37a5d"

},

"timestamp": "2022-08-02T22:02:41.112Z",

"message": "Update 2b8b7f is FAILED.",

"level": "ERROR",

"error": {

"exceptions": [

{

"message": "Failed to launch pipeline cluster 0802-220150-qaojk6yb: The operation could not be performed on your account with the following error message: azure_error_code: OperationNotAllowed, azure_error_message: Operation could not be completed as it results in..."

}

],

"fatal": false

},

"details": {

"update_progress": {

"state": "FAILED"

}

},

"event_type": "update_progress"

}

----------------------------------------------------------

{

"id": "fd140f96-5ab6-4557-b9fe-ece2d1ff8e2c",

"clusters": [

{

"label": "default",

"num_workers": 1

}

],

"development": true,

"continuous": false,

"channel": "CURRENT",

"edition": "ADVANCED",

"photon": false,

"libraries": [

{

"notebook": {

"path": "/Users/aaron@clevelanalytics.com/DeltaLiveTableTest"

}

}

],

"name": "LiveTablePipelineTest",

"storage": "dbfs:/mnt/land",

"target": "Netsuite"

}

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-02-2022 03:12 PM

What I still don't understand is why it is trying to create the cluster in the "westus" region. The resource group and DataBricks instance are in "southcentral us". I have no idea how I got it working earlier when flipping back and forth between Development and Production.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-02-2022 03:14 PM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-02-2022 03:34 PM

So it started working again. I'm not seeing a rhyme or reason to this. When it does work it picks the correct region.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-07-2022 12:16 AM

Hi there @Aaron LeBato

Hope all is well! Just wanted to check in if you were able to resolve your issue and would you be happy to share the solution or mark an answer as best? Else please let us know if you need more help.

We'd love to hear from you.

Thanks!

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Azure Databricks with standard private link cluster event log error: "Metastore down"... in Administration & Architecture

- Using managed identities to access SQL server - how? in Data Engineering

- Help - org.apache.spark.SparkException: Job aborted due to stage failure: Task 47 in stage 2842.0 in Machine Learning

- Bootstrap Timeout during job cluster start in Data Engineering

- Asset Bundles -> creation of Azure DevOps pipeline in Administration & Architecture