Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Lesson 6.1 of Data Engineering. Error when reading...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-02-2022 01:31 AM

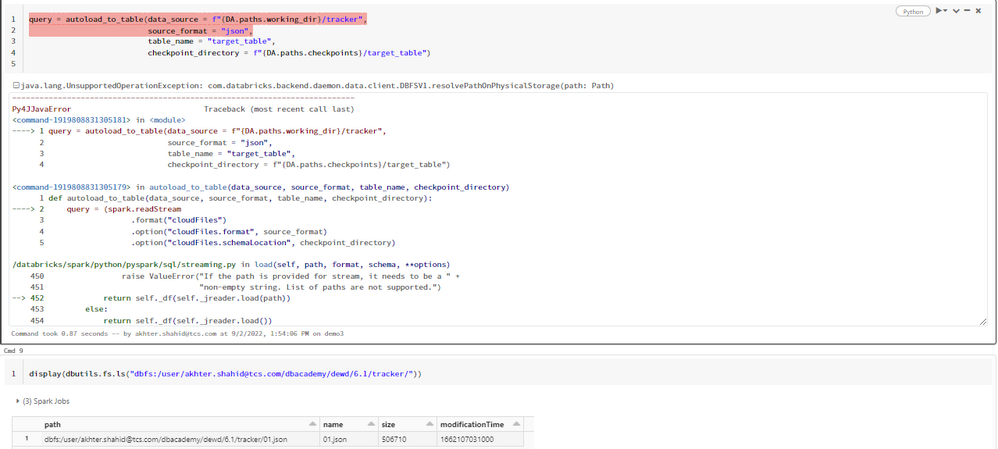

Below function executes fine:

def autoload_to_table(data_source, source_format, table_name, checkpoint_directory):

query = (spark.readStream

.format("cloudFiles")

.option("cloudFiles.format", source_format)

.option("cloudFiles.schemaLocation", checkpoint_directory)

.load(data_source)

.writeStream

.option("checkpointLocation", checkpoint_directory)

.option("mergeSchema", "true")

.table(table_name))

return query

Receiving Error while calling function. Please let me know where is the problem.

Labels:

1 ACCEPTED SOLUTION

Accepted Solutions

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-05-2022 04:48 AM

6 REPLIES 6

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-02-2022 01:33 AM

Hi @Shahid Akhter could you please copy the full error stack and paste it here.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-02-2022 01:52 AM

java.lang.UnsupportedOperationException: com.databricks.backend.daemon.data.client.DBFSV1.resolvePathOnPhysicalStorage(path: Path)

---------------------------------------------------------------------------

Py4JJavaError Traceback (most recent call last)

<command-1919808831305181> in <module>

----> 1 query = autoload_to_table(data_source = f"{DA.paths.working_dir}/tracker",

2 source_format = "json",

3 table_name = "target_table",

4 checkpoint_directory = f"{DA.paths.checkpoints}/target_table")

<command-1919808831305179> in autoload_to_table(data_source, source_format, table_name, checkpoint_directory)

1 def autoload_to_table(data_source, source_format, table_name, checkpoint_directory):

----> 2 query = (spark.readStream

3 .format("cloudFiles")

4 .option("cloudFiles.format", source_format)

5 .option("cloudFiles.schemaLocation", checkpoint_directory)

/databricks/spark/python/pyspark/sql/streaming.py in load(self, path, format, schema, **options)

450 raise ValueError("If the path is provided for stream, it needs to be a " +

451 "non-empty string. List of paths are not supported.")

--> 452 return self._df(self._jreader.load(path))

453 else:

454 return self._df(self._jreader.load())

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-02-2022 04:45 AM

Hi @LearnerShahid,

I've tested this on my own environment and I am able to reproduce this as well when I'm using DBR 7.3. Could you please try DBR 10.4+ and see if that solves the issue?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-02-2022 08:19 AM

Hi Cedric/Team,

I am using Databricks Community Edition - 10.4 LTS (includes Apache Spark 3.2.1, Scala 2.12).

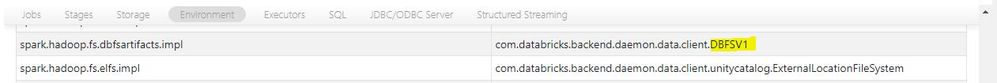

Can you please confirm if you have tried on Community Edition? As per details on Community Edition Cluster Environment Details, it runs on DBFSV1 (screenshot attached) and not the latest DBFSV2. In case, this is an issue due to DBFV1 and DBFSV2, kindly let me know how to fix this as this is causing issues in the subsequent lessons using Spark Streaming.

FYI, I have tried multiple times to execute the code by creating different clusters and it still shows the same error.

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-05-2022 04:48 AM

Autoloader is not supported on community edition.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-09-2022 04:21 PM

Thank you for sharing this. I will mark this as the best response of this thread

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Installing dlt causing error in Data Engineering

- Model flavour using feature store model training log_model() in Machine Learning

- DBAcademy DLT cluster policy missing, &No permission to run Workspace-Setup in Data Engineering

- Trouble on Accessing Azure Storage from Databricks (Python) in Data Engineering

- %run ../Includes/Classroom-Setup-02.1 in Data Engineering