Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Is anyone else experiencing intermittent "Failure ...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-23-2022 03:16 PM

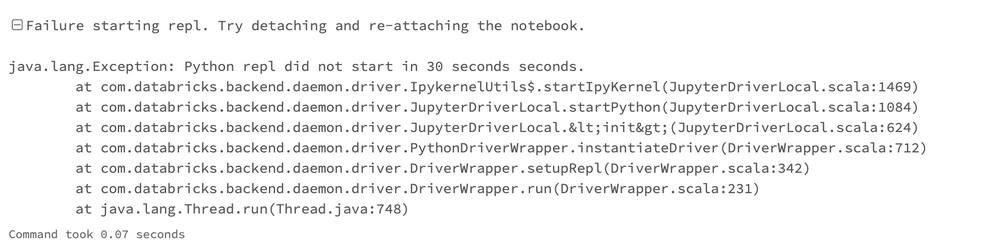

I have a Multi-Task Job that is running a bunch of PySpark notebooks and about 30-60% of the time, my jobs fail with the following error:

What's confusing the living daylights out of me is that this isn't an interactive cluster so I'm not sure what the cause is. Any help would be appreciated.

Labels:

- Labels:

-

Multi-Task Job

-

PySpark Jobs

-

Repl

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-28-2022 11:34 AM

I was going to assume it has something to do with the runtime. Please bear with us as we work to improve on our end. I am glad this work-around is efficient for now.

7 REPLIES 7

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-28-2022 09:07 AM

Hi @Jordan Yaker are you using DCS (Databricks Container Services)? Ands also, what runtime are you using?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-28-2022 11:07 AM

@Pearl Ubaru I'm not using DCS and I was using 11.3. My account rep talked to some people internally and suggested rolling back to 10.4. I ended up doing that and the problem seems to have gone away. Unfortunately this leaves me without the ability to utilize the `availableNow`, but I'd rather have a stable system than that trigger.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-28-2022 11:34 AM

I was going to assume it has something to do with the runtime. Please bear with us as we work to improve on our end. I am glad this work-around is efficient for now.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-21-2022 04:05 AM

Hi. Did you ever got a resolution to this problem outside of rolling back to 10.4? I have recently moved some workloads over to runtime 11.3 and am experiencing intermittent "repl did not start in 30 seconds." errors.

I have increased the repl timeout as per Microsoft advice to 150 seconds but this hasn't fixed the issue. They have also suggested increasing the size of the cluster, but this doesn't feel like the right solution.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-21-2022 04:57 AM

I did not. 11.3 still seems to have stability issues despite it being the next LTS. I still get the REPL errors along with "The Python kernel is unresponsive." It's really annoying.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-21-2022 06:20 AM

Hi Jordan. Thanks for the response! Annoying that there isn't an official answer. I have an open ticket with Microsoft who are also looking into it for me, I will update here if I get anything concrete!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-24-2022 01:53 AM

Had the following update from Databricks support.

"We can see the below error just before the repls started failing -

22/11/17 05:32:07 ERROR WSFSDriverManager$: Failed to get associated pid for WSFS

In the driver logs we could see several repls being initialized during that time. Going through similar scenarios with other customers in our backlogs we have seen reducing the concurrency helps mitigate the problem. Increasing the driver size will help as well since it will provide more cores for concurrent execution."

Still not convinced this gets to the root of the problem as everything seems stable now we have rolled clusters back to 10.4...

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Azure Databricks with standard private link cluster event log error: "Metastore down"... in Administration & Architecture

- Performance Issue with XML Processing in Spark Databricks in Data Engineering

- Intermittent SQL Failure on Databricks SQL Warehouse in Warehousing & Analytics

- databricks notebook cell doesn't show the output intermittently in Warehousing & Analytics

- ModuleNotFoundError: No module named 'pulp' in Data Engineering