Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- python wheel cannot be installed as library.

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-12-2022 01:08 PM

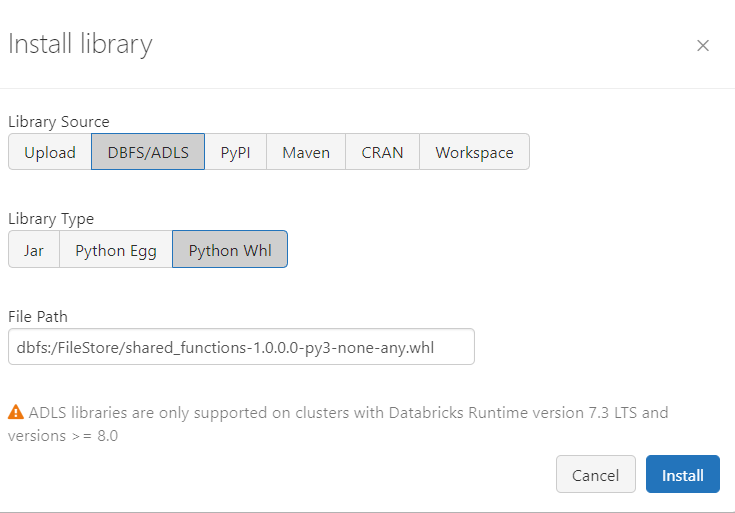

When I try to install the python whl library, I get the below error. However I can install it as a jar and it works fine. One difference is that I am creating my own cluster by cloning an existing cluster and copying the whl to a folder called testing in dbfs.

Is it mandatory that the whl should be in dbfs:/FileStore/jars/ folder?

Library installation attempted on the driver node of cluster 0930-154215-fcm3m6pc and failed. Please refer to the following error message to fix the library or contact Databricks support. Error Code: DRIVER_LIBRARY_INSTALLATION_FAILURE. Error Message: org.apache.spark.SparkException: Process List(/databricks/python/bin/pip, install, --upgrade, --find-links=/local_disk0/spark-1032c562-aec3-4228-b221-6a5b507e6b65/userFiles-5ea00ae0-697e-4804-98e4-987fd932aebe, /local_disk0/spark-1032c562-aec3-4228-b221-6a5b507e6b65/userFiles-5ea00ae0-697e-4804-98e4-987fd932aebe/shared_functions-1.0.0.0-py3-none-any.whl, --disable-pip-version-check) exited with code

1. ERROR: shared-functions has an invalid wheel, .dist-info directory 'shared_functions-1.0.0.0.dist-info' does not start with 'shared-functions'

Labels:

- Labels:

-

Process List

-

Python

-

Python Wheel

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-19-2022 05:52 AM

The issue was that the package was renamed after it was installed to the cluster and hence it was not recognized.

5 REPLIES 5

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-13-2022 05:51 AM

@Vikas B : What DBR version you are using?

Could you please do

%sh

ls -l /dbfs/FileStore/shared_functions-1.0.0.0-py3-none-any.whl

from a notebook

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-13-2022 08:02 AM

@Sivaprasad C S This is what I get when I run that command.

-rwxrwxrwx 1 root root 20951 Oct 12 16:51 /dbfs/FileStore/shared_functions-1.0.0.0-py3-none-any.whl

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-16-2022 12:02 PM

I think you have - (dash) in some places and _ (low dash) in others. Please unify.

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-19-2022 10:49 PM

Hi @Vikas B

Hope all is well!

Just wanted to check in if you were able to resolve your issue and would you be happy to share the solution or mark an answer as best? Else please let us know if you need more help.

We'd love to hear from you.

Thanks!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-19-2022 05:52 AM

The issue was that the package was renamed after it was installed to the cluster and hence it was not recognized.

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- I am getting NoneType error when running a query from API on cluster in Data Engineering

- Differences between Spark SQL and Databricks in Data Engineering

- AssertionError Failed to create the catalog in Machine Learning

- keyrings.google-artifactregistry-auth fails to install backend on runtimes > 10.4 in Administration & Architecture

- Installing dlt causing error in Data Engineering