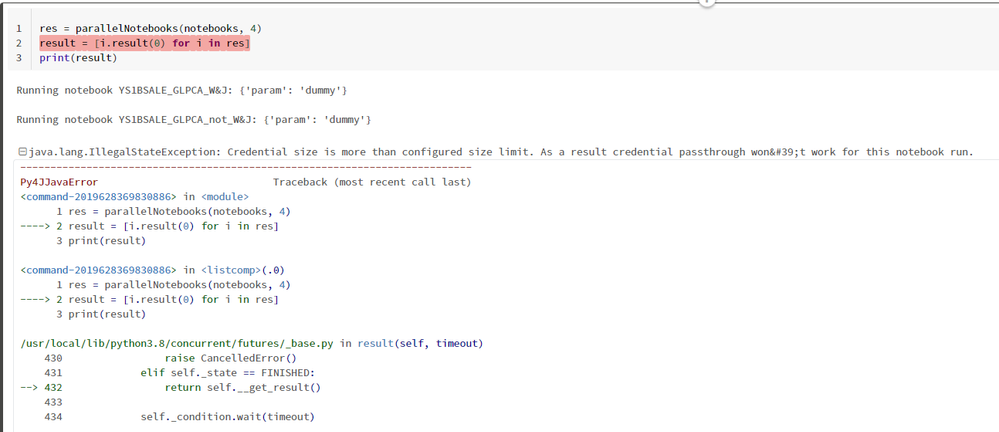

I get this error when trying to execute parallel slave notebook from a Pyspark "master notebook".

note 1: I use same class, functions, cluster, credential for another use case of parallel notebook in the same databricks instance and it works fine.

note 2: the command works fine if the "master notebook" is launched from a Job, while it returns the error above when the notebook is launched manually.

So far couldn't find similar errors in docs or forums.