Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Orchestrate run of a folder

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-03-2022 05:36 AM

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-03-2022 07:26 AM

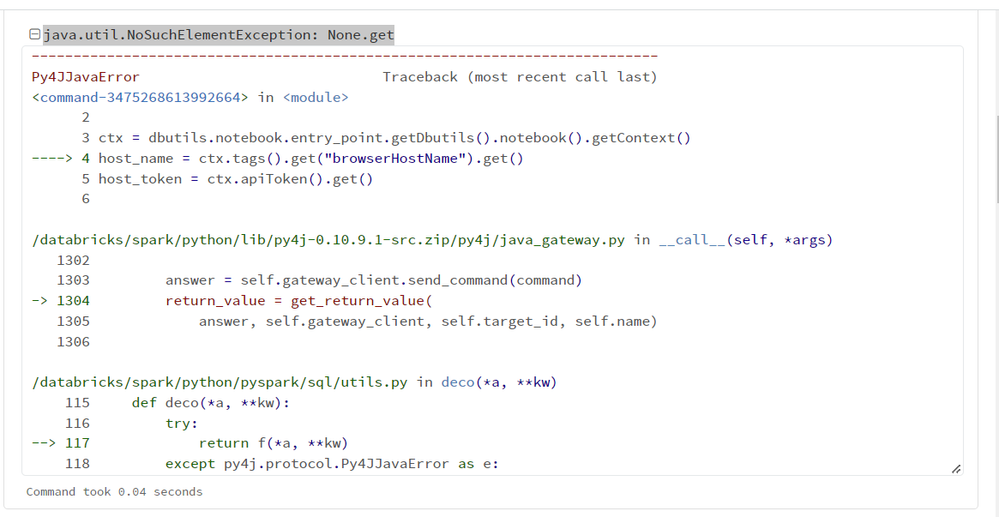

List all notebooks by making API call and then run them by using dbutils.notebook.run:

import requests

ctx = dbutils.notebook.entry_point.getDbutils().notebook().getContext()

host_name = ctx.tags().get("browserHostName").get()

host_token = ctx.apiToken().get()

notebook_folder = '/Users/hubert.dudek@databrickster.com'

response = requests.get(

f'https://{host_name}/api/2.0/workspace/list',

headers={'Authorization': f'Bearer {host_token}'},

json={'path': notebook_folder}

).json()

for notebook in response['objects']:

if notebook['object_type'] == 'NOTEBOOK':

dbutils.notebook.run(notebook['path'], 1800)

3 REPLIES 3

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-03-2022 05:49 AM

hello, could you detail what the file type would be? To run several files you could enter the *(asterisk)

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-03-2022 07:26 AM

List all notebooks by making API call and then run them by using dbutils.notebook.run:

import requests

ctx = dbutils.notebook.entry_point.getDbutils().notebook().getContext()

host_name = ctx.tags().get("browserHostName").get()

host_token = ctx.apiToken().get()

notebook_folder = '/Users/hubert.dudek@databrickster.com'

response = requests.get(

f'https://{host_name}/api/2.0/workspace/list',

headers={'Authorization': f'Bearer {host_token}'},

json={'path': notebook_folder}

).json()

for notebook in response['objects']:

if notebook['object_type'] == 'NOTEBOOK':

dbutils.notebook.run(notebook['path'], 1800)Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-03-2022 12:17 PM

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- What is the bestway to handle huge gzipped file dropped to S3 ? in Data Engineering

- value is null after loading a saved df when using specific type in schema in Data Engineering

- Move files in Data Engineering

- delete non empty folders from workspace in Data Engineering

- Import from repo in Data Engineering