Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Spark SQL output multiple small files

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Spark SQL output multiple small files

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-08-2022 10:30 PM

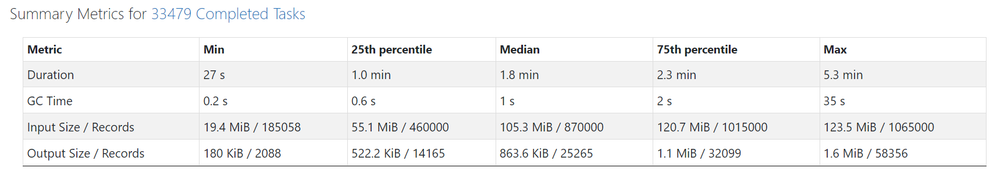

We are having multiple joins involving a large table (about 500gb in size). The output of the joins is stored into multiple small files each of size 800kb-1.5mb. Because of this the job is split into multiple tasks and taking a long time to complete. We have tried using spark tuning configurations like using broadcast join, changing partition size, changing max records per file etc., But there is no performance improvement with this methods and the issue is also not fixed. Using coalesce makes the job struck at the stage and there is no progress.

Labels:

- Labels:

-

Multiple Tasks

-

Small Files

-

SQL Output

2 REPLIES 2

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-08-2022 11:32 PM

Hi @Arun Balaji , Could you please provide the error message you are receiving?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-09-2022 12:35 AM

Hi @Debayan Mukherjee , We don't receive any error. But it is writing several small files thereby increasing the runtime of job. We can't reduce the number of output files with any tuning configurations (We have tried using broadcast join, changing partition size, changing max records per file etc., )

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Spacy Retraining failure in Machine Learning

- Help with Identifying and Parsing Varying Date Formats in Spark DataFrame in Data Engineering

- How can I throw an exception when a .json.gz file has multiple roots? in Data Engineering

- Writing to multiple files/tables from data held within a single file through autoloader in Data Engineering

- DLT - runtime parameterisation of execution in Data Engineering