Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- My DLT pipeline return ACL Verification Failed

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-14-2022 04:36 AM

- Python Command

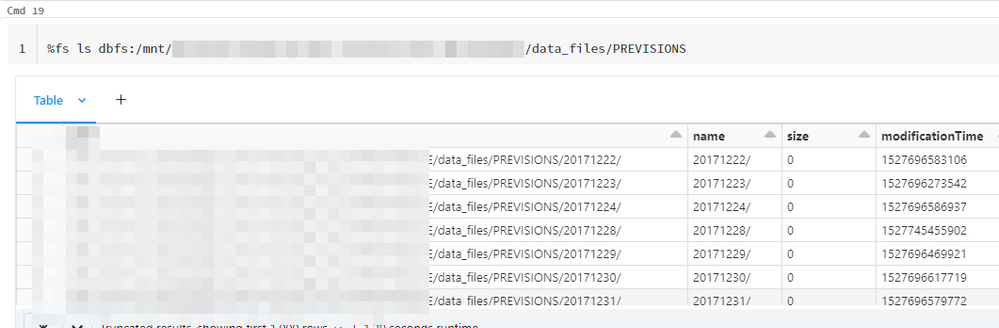

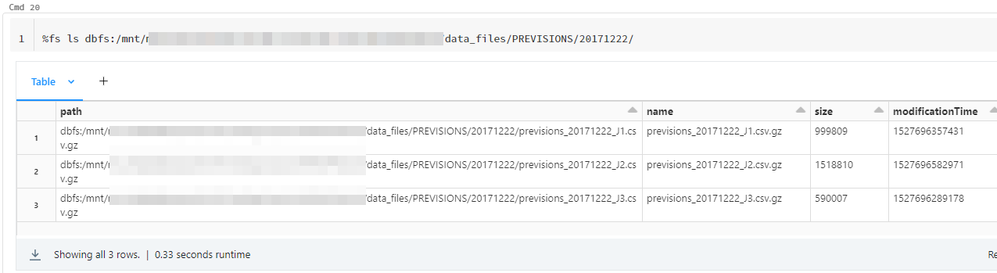

df = spark.read.format('csv').option('sep', ';').option("recursiveFileLookup", "true").load('dbfs:/***/data_files/PREVISIONS/')- Here is the content of the folder

- Each folder contain the following files:

- Full log

org.apache.spark.sql.streaming.StreamingQueryException: Query table_bronze_previsions [id = 3656e25a-9467-4d69-ba21-9398418c2834, runId = 99be5641-dad8-4a8b-a649-dfd15df1bc80] terminated with exception: LISTSTATUS failed with error 0x83090aa2 (Forbidden. ACL verification failed. Either the resource does not exist or the user is not authorized to perform the requested operation.). [acb21291-fb11-4176-af44-71f212877bad] failed with error 0x83090aa2 (Forbidden. ACL verification failed. Either the resource does not exist or the user is not authorized to perform the requested operation.). [acb21291-fb11-4176-af44-71f212877bad][2022-11-14T04:31:18.1255273-08:00] [ServerRequestId:acb21291-fb11-4176-af44-71f212877bad]

at org.apache.spark.sql.execution.streaming.StreamExecution.org$apache$spark$sql$execution$streaming$StreamExecution$$runStream(StreamExecution.scala:381)

at org.apache.spark.sql.execution.streaming.StreamExecution$$anon$1.run(StreamExecution.scala:250)

org.apache.hadoop.security.AccessControlException: LISTSTATUS failed with error 0x83090aa2 (Forbidden. ACL verification failed. Either the resource does not exist or the user is not authorized to perform the requested operation.). [acb21291-fb11-4176-af44-71f212877bad] failed with error 0x83090aa2 (Forbidden. ACL verification failed. Either the resource does not exist or the user is not authorized to perform the requested operation.). [acb21291-fb11-4176-af44-71f212877bad][2022-11-14T04:31:18.1255273-08:00] [ServerRequestId:acb21291-fb11-4176-af44-71f212877bad]

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(NativeConstructorAccessorImpl.java:-2)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.microsoft.azure.datalake.store.ADLStoreClient.getRemoteException(ADLStoreClient.java:1299)

at com.microsoft.azure.datalake.store.ADLStoreClient.getExceptionFromResponse(ADLStoreClient.java:1264)

at com.microsoft.azure.datalake.store.ADLStoreClient.enumerateDirectoryInternal(ADLStoreClient.java:636)

at com.microsoft.azure.datalake.store.ADLStoreClient.enumerateDirectory(ADLStoreClient.java:610)

at com.microsoft.azure.datalake.store.ADLStoreClient.enumerateDirectory(ADLStoreClient.java:473)

at com.microsoft.azure.datalake.store.ADLStoreClient.enumerateDirectory(ADLStoreClient.java:459)

at com.databricks.adl.AdlFileSystem.listStatus(AdlFileSystem.java:495)

at com.databricks.backend.daemon.data.client.DBFSV2.$anonfun$listStatusAsIterator$2(DatabricksFileSystemV2.scala:214)

at com.databricks.s3a.S3AExceptionUtils$.convertAWSExceptionToJavaIOException(DatabricksStreamUtils.scala:66)

at com.databricks.backend.daemon.data.client.DBFSV2.$anonfun$listStatusAsIterator$1(DatabricksFileSystemV2.scala:178)

at com.databricks.logging.UsageLogging.$anonfun$recordOperation$1(UsageLogging.scala:413)

at com.databricks.logging.UsageLogging.executeThunkAndCaptureResultTags$1(UsageLogging.scala:507)

at com.databricks.logging.UsageLogging.$anonfun$recordOperationWithResultTags$4(UsageLogging.scala:528)

at com.databricks.logging.Log4jUsageLoggingShim$.$anonfun$withAttributionContext$1(Log4jUsageLoggingShim.scala:32)

at scala.util.DynamicVariable.withValue(DynamicVariable.scala:62)

at com.databricks.logging.AttributionContext$.withValue(AttributionContext.scala:94)

at com.databricks.logging.Log4jUsageLoggingShim$.withAttributionContext(Log4jUsageLoggingShim.scala:30)

at com.databricks.logging.UsageLogging.withAttributionContext(UsageLogging.scala:283)

at com.databricks.logging.UsageLogging.withAttributionContext$(UsageLogging.scala:282)

at com.databricks.backend.daemon.data.client.DatabricksFileSystemV2.withAttributionContext(DatabricksFileSystemV2.scala:512)

at com.databricks.logging.UsageLogging.withAttributionTags(UsageLogging.scala:318)

at com.databricks.logging.UsageLogging.withAttributionTags$(UsageLogging.scala:303)

at com.databricks.backend.daemon.data.client.DatabricksFileSystemV2.withAttributionTags(DatabricksFileSystemV2.scala:512)

at com.databricks.logging.UsageLogging.recordOperationWithResultTags(UsageLogging.scala:502)

at com.databricks.logging.UsageLogging.recordOperationWithResultTags$(UsageLogging.scala:422)

at com.databricks.backend.daemon.data.client.DatabricksFileSystemV2.recordOperationWithResultTags(DatabricksFileSystemV2.scala:512)

at com.databricks.logging.UsageLogging.recordOperation(UsageLogging.scala:413)

at com.databricks.logging.UsageLogging.recordOperation$(UsageLogging.scala:385)

at com.databricks.backend.daemon.data.client.DatabricksFileSystemV2.recordOperation(DatabricksFileSystemV2.scala:512)

at com.databricks.backend.daemon.data.client.DBFSV2.listStatusAsIterator(DatabricksFileSystemV2.scala:178)

at com.databricks.tahoe.store.EnhancedDatabricksFileSystemV2.listStatus(EnhancedFileSystem.scala:350)

at com.databricks.tahoe.store.AzureLogStore.listFrom(AzureLogStore.scala:36)

at com.databricks.tahoe.store.DelegatingLogStore.$anonfun$listFrom$1(DelegatingLogStore.scala:142)

at com.databricks.spark.util.FrameProfiler$.record(FrameProfiler.scala:80)

at com.databricks.tahoe.store.DelegatingLogStore.listFrom(DelegatingLogStore.scala:142)

at com.databricks.sql.transaction.tahoe.util.DeltaFileOperations$.list$1(DeltaFileOperations.scala:164)

at com.databricks.sql.transaction.tahoe.util.DeltaFileOperations$.$anonfun$listUsingLogStore$4(DeltaFileOperations.scala:178)

at scala.collection.Iterator$$anon$11.nextCur(Iterator.scala:486)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:492)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:491)

at scala.collection.Iterator$ConcatIterator.advance(Iterator.scala:199)

at scala.collection.Iterator$ConcatIterator.hasNext(Iterator.scala:227)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:490)

at scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:513)

at scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:513)

at scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:513)

at scala.collection.Iterator.foreach(Iterator.scala:943)

at scala.collection.Iterator.foreach$(Iterator.scala:943)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1431)

at com.databricks.sql.fileNotification.autoIngest.FileEventBackfiller.runInternal(FileEventWorkerThread.scala:982)

at com.databricks.sql.fileNotification.autoIngest.FileEventBackfiller.run(FileEventWorkerThread.scala:910)

Labels:

- Labels:

-

ACL

-

Delta Live Tables

-

DLT

-

Forbidden

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2022 01:54 AM

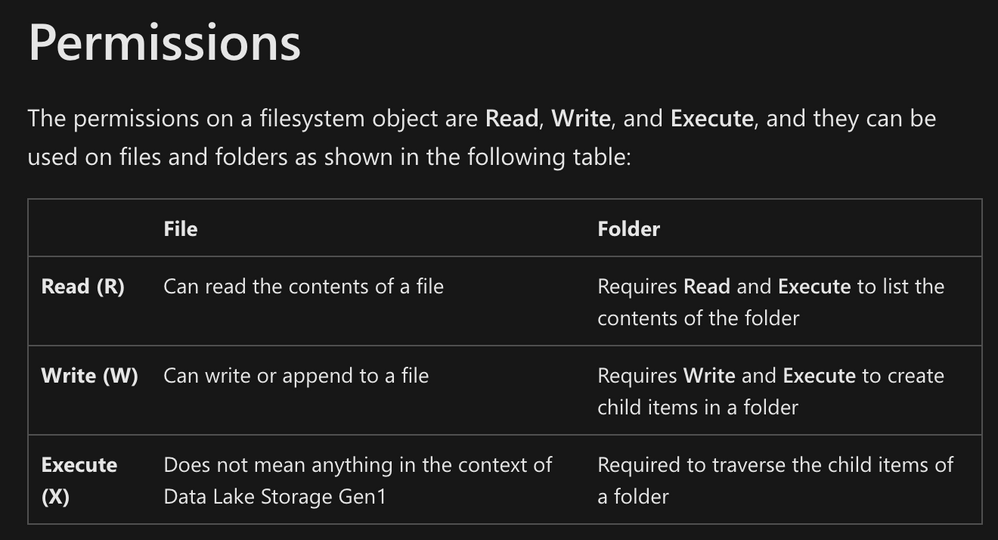

Hi @Amine HADJ-YOUCEF, As the error say LISTSTATUS failed with error, it might be missing the READ permission . Can you check and let us know as to what permission are set ?

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-access-control#permissions

2 REPLIES 2

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2022 01:54 AM

Hi @Amine HADJ-YOUCEF, As the error say LISTSTATUS failed with error, it might be missing the READ permission . Can you check and let us know as to what permission are set ?

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-access-control#permissions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-16-2022 05:17 AM

Yes some of the files I don't have the right to access (mistakenly)

In this case, how do you think I can tell DTL to handle this exception and ignore the file, since I can read some files but not all?

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Help - org.apache.spark.SparkException: Job aborted due to stage failure: Task 47 in stage 2842.0 in Machine Learning

- Delta Live Table : [TABLE_OR_VIEW_ALREADY_EXISTS] Cannot create table or view in Data Engineering

- DLT DatePlane Error in Data Engineering

- Unable to upload a wheel file in Azure DevOps pipeline in Data Engineering

- Error with mosaic.enable_mosaic() when created DLT Pipeline with Mosaic lib in Data Engineering