Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Failed to launch pipeline cluster

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-01-2022 07:31 PM

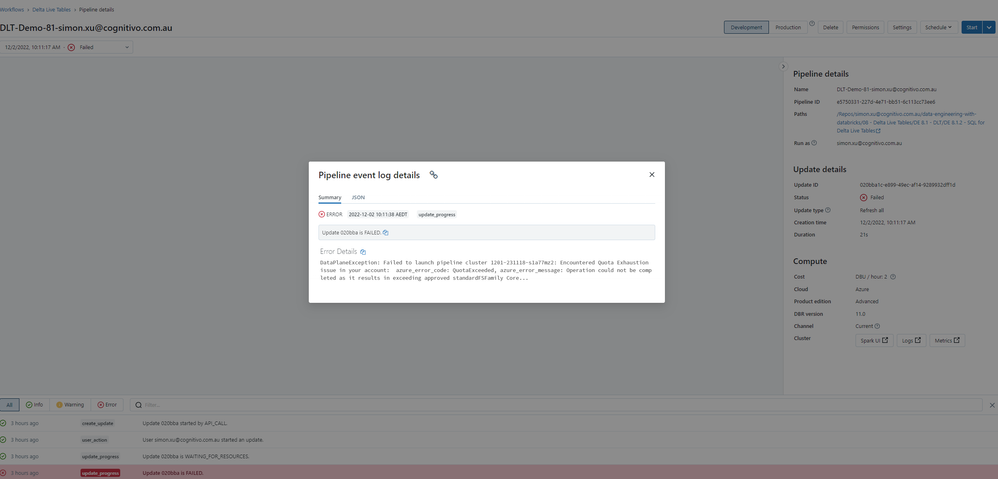

The error is "DataPlaneException: Failed to launch pipeline cluster 1202-031220-urn0toj0: Could not launch cluster due to cloud provider failures. azure_error_code: OperationNotAllowed, azure_error_message: Operation could not be completed as it results in exceeding approved standardFSF...".

However, when I checked my azure subscription, it showed that I had much enough quota space. I don't how to fix this issue as I'm new to the delta live table.

Labels:

- Labels:

-

Cluster Failed To Launch

-

DLT Pipeline

1 ACCEPTED SOLUTION

Accepted Solutions

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-02-2022 12:01 AM

Hi @Simon Xu,

You're not alone.

I encountered this issue before. The issue comes from Azure site not Databricks.

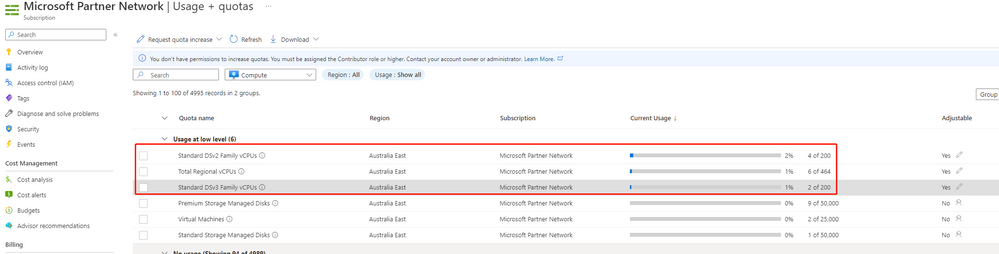

You need to check the number of cores, ram, CPU in your Warehouse cluster then compare the resources in Azure resource group hosted Databricks workspace then if you don't have

enough resource you need to increase the quota to higher number.

BR,

Jensen Nguyen

6 REPLIES 6

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-01-2022 11:53 PM

Hi @Simon Xu

I hope this thread might solve your issue..

Cheers

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-02-2022 12:01 AM

Hi @Simon Xu,

You're not alone.

I encountered this issue before. The issue comes from Azure site not Databricks.

You need to check the number of cores, ram, CPU in your Warehouse cluster then compare the resources in Azure resource group hosted Databricks workspace then if you don't have

enough resource you need to increase the quota to higher number.

BR,

Jensen Nguyen

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-30-2023 03:18 PM

Thanks, Jensen. It works for me!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-30-2023 07:32 AM

Unfortunately, I just encountered this error too, and followed your solution but it's still not working. My Usage + quota on Azure is 4 out of 10 (6) and the required DBs compute is 4 cores. However in my case, I used a single node. I strongly suspect I have to switch to a multi-node cluster, and then request for an increase in cores from Azure. I'll be back with an update!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-26-2023 11:20 AM

@Simon Xu

I suspect that DLT is trying to grab some machine types that you simply have zero quota for in your Azure account. By default, below machine type gets requested behind the scenes for DLT:

AWS: c5.2xlarge

Azure: Standard_F8s

GCP: e2-standard-8

You can also set them explicitly from here.

Regards,

Arpit Khare

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-21-2023 02:26 AM

I followed @arpit suggestion and set the cluster configuration explicitly in the JSON file and solved the issue.

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Migration of Synapse Data bricks activity executions from All purpose cluster to New job cluster in Data Engineering

- DBAcademy DLT cluster policy missing, &No permission to run Workspace-Setup in Data Engineering

- GCP - (DWH) Cluster Start-up Delayed - Failing to start in Administration & Architecture

- Installation of external libraries(wheel file) in Data bricks through synapse using new job cluster in Data Engineering

- User not authorised to copy files to dbfs in Administration & Architecture