Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- dataframe takes unusually long time to save as a d...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

dataframe takes unusually long time to save as a delta table using sql for a very small dataset with 30k rows. It takes around 2hrs. Is there a solution for this problem?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-12-2022 10:14 PM

I am trying to save a dataframe after a series of data manipulations using Udf functions to a delta table. I tried using this code

(

df

.write

.format('delta')

.mode('overwrite')

.option('overwriteSchema', 'true')

.saveAsTable('output_table')

)

but this is taking more than 2 hours. So I converted the dataframe into a sql local temp view and tried saving the df as a delta table from that temp view, this worked for one of the notebooks(14 minutes) but for other notebooks this is also taking around 2 hours to write to the delta table. Not sure why this is happening for a very small dataset. Any solution is appreciated.

code:

df.createOrReplaceTempView("sql_temp_view")

%sql

DROP TABLE IF EXISTS default.output_version_2;

create table default.output_version_2

select * from sql_temp_view

Labels:

- Labels:

-

Delta table

-

Long Time

-

Task Running Long

11 REPLIES 11

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-12-2022 10:43 PM

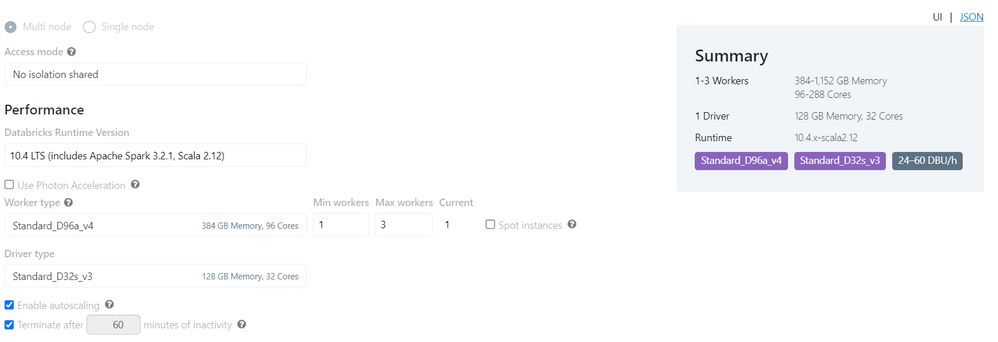

What is the cluster config you are using ? Also what sort of transformations are being done before your final dataframe is getting created ?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-12-2022 10:59 PM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-12-2022 11:23 PM

Can you also give the number of partitions the df has ?

you can use df.rdd.getNumPartitions()

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-12-2022 11:45 PM

96 partitions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-13-2022 02:23 AM

Since data is too low, try repartitioning that data before you write using repartition or coalesce.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-04-2023 01:40 AM

I too have similar issue, the no.of partition is 1 at table level and transformation only appyling like date, decimal(20, 2)..etc using withColumn. 5 worker nodes.

1,80,890 records taking 10min time. - how to improve the performance and what are the possible ways to find where it is taking time ?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-12-2022 11:31 PM

Hi @Suresh Kakarlapudi what is your file size??

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-13-2022 01:00 AM

35 MB

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-04-2023 09:15 PM

Is your databricks workspace set up as vnet injection by any chance?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-23-2023 01:35 PM

@Jfoxyyc i am having similar problem and cam across the post. Do vnet injection cause this as my workspace is set up like that

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-24-2023 07:57 AM

You should also look into the sql plan if the writing phase is indeed the part that is taking time. Since spark works on lazy evaluation, there might be some other phase that might be taking time

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Help with Identifying and Parsing Varying Date Formats in Spark DataFrame in Data Engineering

- Loading spark dataframe to Mongo collection isn't allowing nulls in Data Engineering

- Read large volume of parquet files in Data Engineering

- databricks-connect: PandasUDFs importing local packages: ModuleNotFoundError in Data Engineering

- Error while reading file <file path>. [DEFAULT_FILE_NOT_FOUND] in Data Engineering