Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- How i can add ADLS Gen2 - OAuth 2.0 as Cluster sco...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-04-2023 05:22 AM

Hi All,

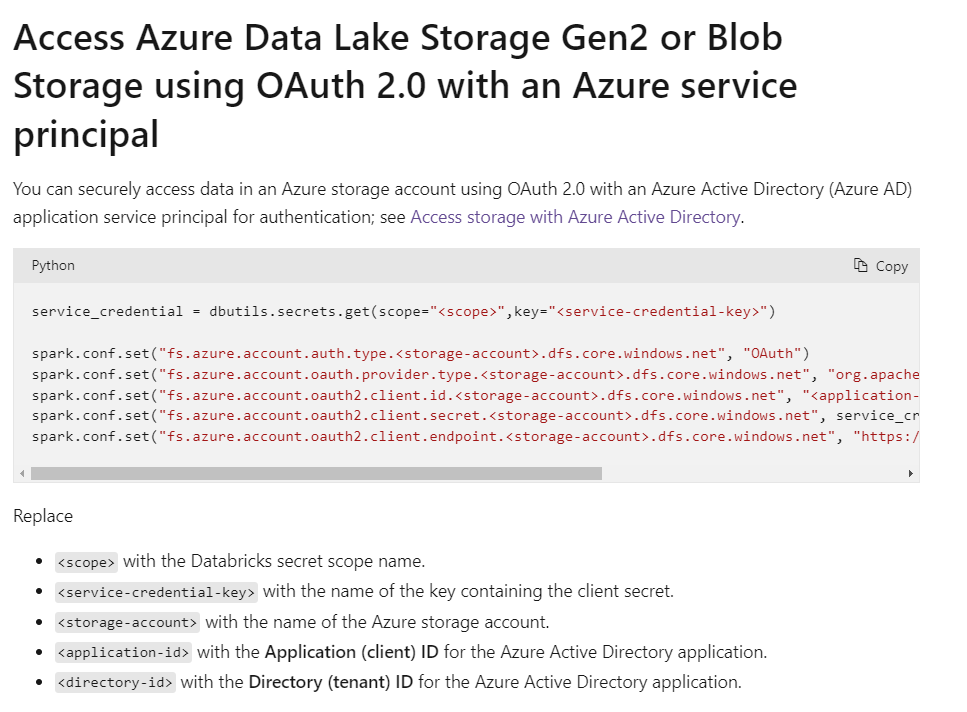

Kindly help me , how i can add the ADLS gen2 OAuth 2.0 authentication to my high concurrency shared cluster.

I want to scope this authentication to entire cluster not for particular notebook.

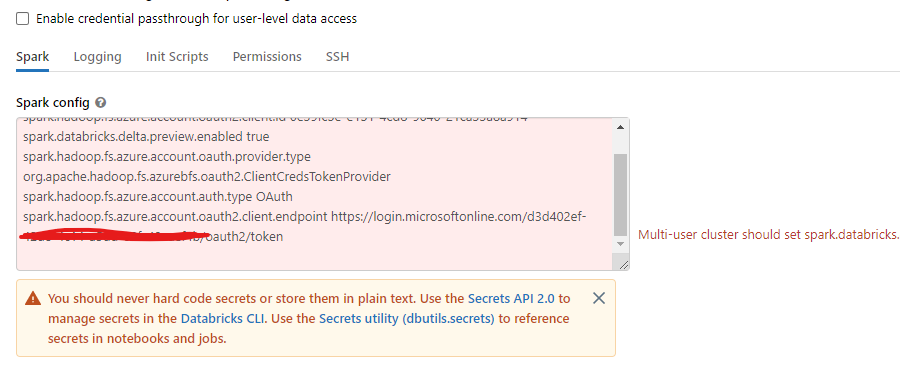

Currently i have added them as spark configuration of the cluster , by keeping my service principal credentials as Secrets. But still am getting this following warning.

Kindly advice me what's the better alternate secure solution.

Note: Am creating the cluster using Terraform

Regards,

Sunil

Labels:

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-12-2023 10:06 AM

Yes , i have configured them in spark configuration.

But i am yet to configure in cluster policy as he recommended

9 REPLIES 9

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-04-2023 05:33 AM

It looks like you've removed some config entries from Spark Config that are required for multi-user cluster to work.

Try to only add the required config rather than overwriting.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-04-2023 07:19 AM

Thanks for the response @Daniel Sahal

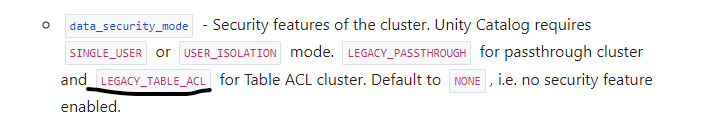

But that's not an issue , i have enabled the Access mode as Shared by setting this property for my highly concurrent cluster and its working

ADLS gen2 OAuth is also working.

But my question , is it secured or any other better option where i can store the Cluster level scope

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-04-2023 01:44 PM

Have you considered using session scopes instead of cluster scopes? I have a function stored at databricks. functions. azure. py that does this:

from pyspark.sql import SparkSession

def set_session_scope(scope: str, client_id: str, client_secret: str, tenant_id: str, storage_account_name: str, container_name: str) -> str:

"""Connects to azure key vault, authenticates, and sets spark session to use specified service principal for read/write to adls

Args:

scope: The azure key vault scope name

client_id: The key name of the secret for the client id

client_secret: The key name of the secret for the client secret

tenant_id: The key name of the secret for the tenant id

storage_account_name: The name of the storage account resource to read/write from

container_name: The name of the container resource in the storage account to read/write from

Returns:

Spark configs get set appropriately

abfs_path (string): The abfss:// path to the storage account and container

"""

spark = SparkSession.builder.getOrCreate()

try:

from pyspark.dbutils import DBUtils

dbutils = DBUtils(spark)

except ImportError:

import IPython

dbutils = IPython.get_ipython().user_ns["dbutils"]

client_id = dbutils.secrets.get(scope = scope, key = client_id)

client_secret = dbutils.secrets.get(scope = scope, key = client_secret)

tenant_id = dbutils.secrets.get(scope = scope, key = tenant_id)

spark.conf.set(f"fs.azure.account.auth.type.{storage_account_name}.dfs.core.windows.net", "OAuth")

spark.conf.set(f"fs.azure.account.oauth.provider.type.{storage_account_name}.dfs.core.windows.net", "org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider")

spark.conf.set(f"fs.azure.account.oauth2.client.id.{storage_account_name}.dfs.core.windows.net", client_id)

spark.conf.set(f"fs.azure.account.oauth2.client.secret.{storage_account_name}.dfs.core.windows.net", client_secret)

spark.conf.set(f"fs.azure.account.oauth2.client.endpoint.{storage_account_name}.dfs.core.windows.net", f"https://login.microsoftonline.com/{tenant_id}/oauth2/token")

abfs_path = "abfss://" + container_name + "@" + storage_account_name + ".dfs.core.windows.net/"

return abfs_pathAnd its usage is like this:

from databricks.functions.azure import set_session_scope

# Set session scope and connect to abfss to read source data

client_id = "databricks-serviceprincipal-id"

client_secret = "databricks-serviceprincipal-secret"

tenant_id = "tenant-id"

storage_account_name = "your-storage-account-name"

container_name = "your-container-name"

folder_path = "" #path/to/folder/

abfs_path = set_session_scope(

scope = scope,

client_id = client_id,

client_secret = client_secret,

tenant_id = tenant_id,

storage_account_name = storage_account_name,

container_name = container_name

)

file_list = dbutils.fs.ls(abfs_path + folder_path)Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-05-2023 03:25 AM

Thanks for the response. But in this case every time we have to execute this function right.

I am expecting something similar to Mount point (unfortunately -Databricks not recommends mount point for ADLS) , where at the time of cluster creation itself we will provide connection to our storage account.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-05-2023 03:42 AM

Yes, the approach to set it in the spark config you used is correct and according to best practices. Additionally, you can put it in cluster policy so it will be for all clusters.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-05-2023 04:49 AM

thanks hubert... could you kindly guide , how i can add that in the cluster policy ?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-05-2023 01:29 AM

- error is because of missing default settings (create new cluster and do not remove them),

- the warning is because secrets should be put in secret scope, and then you should reference secrets in settings

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-11-2023 07:10 AM

Hi @Sunilprasath Elangovan , We haven’t heard from you since the last response from @Hubert Dudek, and I was checking back to see if his suggestions helped you.

Or else, If you have any solution, please do share that with the community as it can be helpful to others.

Also, Please don't forget to click on the "Select As Best" button whenever the information provided helps resolve your question.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-12-2023 10:06 AM

Yes , i have configured them in spark configuration.

But i am yet to configure in cluster policy as he recommended

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Permission denied using patchelf in Administration & Architecture

- Errors When Using R on Unity Catalog Clusters in Data Engineering

- Connecting from Databricks to Network Path in Data Engineering

- Databricks Connection to Redash in Data Engineering

- Databricks SDK for Python: Errors with parameters for Statement Execution in Data Engineering