Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- How to delete duplicate tables?

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to delete duplicate tables?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-10-2023 05:08 PM

Hi Everyone,

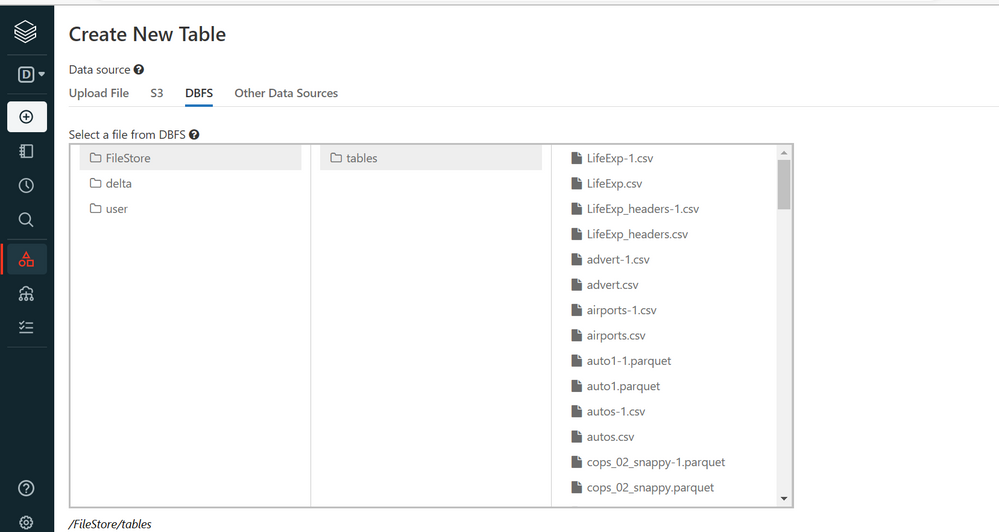

Accidently imported duplicate tables, guide me how to delete them

using data bricks community edition

Labels:

- Labels:

-

Tables

3 REPLIES 3

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-10-2023 09:25 PM

Hi @Mahesh Babu Uppala ,

You can use the below command to delete the particular file

dbutils.fs.rm("path of the file")If you want to delete the entire directory where it consists of sub-directories and files, you can use the below command to delete the files recursively

dbutils.fs.rm("path of the folder",True)After executing the above commands you will be getting the output below, to confirm file/directory got deleted successfully.

"Boolean = true"

Happy Learning!!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-11-2023 06:30 AM

Hi @Mahesh Babu Uppala

You can use the following method to delete only the duplicate tables

%scala

val tables = spark.sql("""SHOW TABLES""").createOrReplaceTempView("tables")

val temp_tables = spark.sql("""select tableName from tables where tableName like '%-1%' """)

temp_tables.collect().foreach(row => println("DROP TABLE IF EXISTS " + row.toString().replace("[", "").replace("]", "") + ";"))You will get the sql command in a string...you can either copy this cell output to another cell directly and run it or you can automate the process by storing this output into a variable and then calling it through a loop...Better way would be to simply copy the output and execute it.

Hope this helps..Cheers...

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-16-2023 07:07 AM

Hi @Mahesh Babu Uppala (Customer) , We haven’t heard from you on the last response from @Uma Maheswara Rao Desula and @Ratna Chaitanya Raju Bandaru , and I was checking back to see if their suggestions helped you.

Or else, If you have any solution, please do share that with the community as it can be helpful to others.

Also, Please don't forget to click on the "Select As Best" button whenever the information provided helps resolve your question.

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Unity catalog; how to remove tags completely? in Data Governance

- Expected size of managed Storage Accounts in Data Engineering

- Delta Live Table : [TABLE_OR_VIEW_ALREADY_EXISTS] Cannot create table or view in Data Engineering

- Accessing data from a legacy hive metastore workspace on a new Unity Catalog workspace in Data Engineering

- Consume updated data from the Materialized view and send it as append to a streaming table in Data Engineering