Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Cannot install com.microsoft.azure.kusto:kusto-spa...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Cannot install com.microsoft.azure.kusto:kusto-spark

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-26-2023 05:24 AM

Hello,

I'm trying to install/update the library com.microsoft.azure.kusto:kusto-spark_3.0_2.12:3.1.x

Tried to install with Maven central repository and using Terraform.

It was working previously and now the installation always ends with error:

│ Error: cannot create library: mvn:com.microsoft.azure.kusto:kusto-spark_3.0_2.12:3.1.7 failed: Library installation attempted on the driver node of cluster ...... and failed. Please refer to the following error message to fix the library or contact Databricks support. Error Code: DRIVER_LIBRARY_INSTALLATION_FAILURE. Error Message: java.util.concurrent.ExecutionException: java.io.FileNotFoundException: File file:/local_disk0/tmp/clusterWideResolutionDir/maven/ivy/jars/io.netty_netty-transport-native-kqueue-4.1.59.Final.jar does not exist

I'm not sure if it is Databricks or Maven or library issue.

Install other libraries e.g. com.microsoft.azure:azure-eventhubs-spark_2.12:2.3.22 works.

Clusters configuration:

{

"autoscale": {

"min_workers": 1,

"max_workers": 4

},

"cluster_name": "....",

"spark_version": "11.3.x-scala2.12",

"spark_conf": {

"spark.locality.wait": "1800s"

},

"azure_attributes": {

"first_on_demand": 1,

"availability": "ON_DEMAND_AZURE",

"spot_bid_max_price": -1

},

"node_type_id": "Standard_F8s",

"driver_node_type_id": "Standard_F8s",

"ssh_public_keys": [],

"custom_tags": {},

"cluster_log_conf": {

"dbfs": {

"destination": "dbfs:/cluster-logs"

}

},

"spark_env_vars": {},

"autotermination_minutes": 0,

"enable_elastic_disk": true,

"cluster_source": "UI",

"init_scripts": [],

"cluster_id": "....."

}Thanks for helping

Labels:

- Labels:

-

LIBRARY INSTALLATION FAILURE

-

Maven

5 REPLIES 5

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-26-2023 05:58 AM

From 11.3, the installation has changed. Before, it was in the control plane, and now it is in the data plane. So basically, installation is done entirely on the cluster. Maybe because of routing settings in your Azure environment, your cluster doesn't have access to https://repo.maven.apache.org/ (before it was in the control plane, so there was no problem as routing was from the databricks side, but that architecture was not correct. That'st's why it was changed)

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-26-2023 07:00 AM

But then I would not be able to install anything else, because other libraries are installed from the same repository (e.g. com.microsoft.azure:azure-eventhubs-spark_2.12:2.3.22)

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-01-2023 01:41 AM

I have the same problem with a slightly different version of the connector (change on the minor version). I have a job that runs every hour and specifically, this started to happen on the 23rd of January onwards. The error indeed does say the same:

Run result unavailable: job failed with error message

Library installation failed for library due to user error for maven {

coordinates: "com.microsoft.azure.kusto:kusto-spark_3.0_2.12:3.1.6"

}

. Error messages:

Library installation attempted on the driver node of cluster 0123-210011-798kk68l and failed. Please refer to the following error message to fix the library or contact Databricks support. Error Code: DRIVER_LIBRARY_INSTALLATION_FAILURE. Error Message: java.util.concurrent.ExecutionException: java.io.FileNotFoundException: File file:/local_disk0/tmp/clusterWideResolutionDir/maven/ivy/jars/io.netty_netty-transport-native-kqueue-4.1.59.Final.jar does not existI've tried installing "io.netty:netty-transport-native-kqueue:4.1.59.Final" from maven and it worked. However, when installing "com.microsoft.azure.kusto:kusto-spark_3.0_2.12:3.1.6" it shows the following error, pointing to a different jar file:

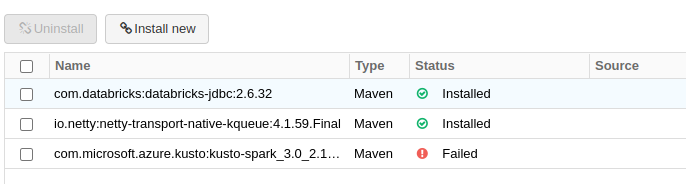

Library installation attempted on the driver node of cluster 0131-110547-yen1qo6z and failed. Please refer to the following error message to fix the library or contact Databricks support. Error Code: DRIVER_LIBRARY_INSTALLATION_FAILURE. Error Message: java.util.concurrent.ExecutionException: java.io.FileNotFoundException: File file:/local_disk0/tmp/clusterWideResolutionDir/maven/ivy/jars/io.netty_netty-resolver-dns-native-macos-4.1.59.Final.jar does not existAlso, I can confirm that other libraries can be installed via maven, e.g., "com.databricks:databricks-jdbc:2.6.32" (see screenshot below)

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-01-2023 01:57 AM

Hello @Pedro Heleno Isolani ,

my temporary workaround is manually install missing libs/jars:

io.netty:netty-transport-native-kqueue:4.1.59.Final

io.netty:netty-resolver-dns-native-macos:4.1.59.Final

io.netty:netty-transport-native-epoll:4.1.59.Final.

The main reason for the error is that during installation of kusto-spark-lib the "netty*" jars are downloaded with different file names:

netty-transport-native-kqueue-4.1.59.Final-osx-x86_64.jar

BR

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-01-2023 02:13 AM

Thanks @blackcoffee AR! The workaround did work for me!

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- DLT run filas with "com.databricks.cdc.spark.DebeziumJDBCMicroBatchProvider not found" in Data Engineering

- Use OF API from package enerbitdso 0.1.8 PYPI in Machine Learning

- keyrings.google-artifactregistry-auth fails to install backend on runtimes > 10.4 in Administration & Architecture

- Installing dlt causing error in Data Engineering

- Deploy python application with submodules - Poetry library management in Data Engineering