Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Large MERGE Statements - 500+ lines of code!

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-26-2023 06:20 AM

I'm new to databricks. (Not new to DB's - 10+ year DB Developer).

How do you generate a MERGE statement in DataBricks?

Trying to manually maintain a 500+ or 1000+ lines in a MERGE statement doesn't make much sense? Working with Large Tables of between 200 - 500 columns.

Labels:

- Labels:

-

MERGE Statement

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-27-2023 06:13 AM

don't know about order.

However, I always prepare the incoming data so that it has the same schema as the target. This makes merges easy. You indeed do not want to tinker around in a merge statement and typing tons of columns.

Using scala/python it is practically always possible to prepare your data.

Takes some time to learn, but it is worth it.

10 REPLIES 10

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-26-2023 08:40 AM

In my opinion, when possible MERGE statement should be on the primary key. If not possible you can create your own unique key (by concatenate some fields and eventually hashing them) and then use it in merge logic.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-26-2023 08:48 AM

Thanks.. but that's not really what I'm asking...

How many columns can a MERGE statement manage, before maintenance becomes a nightmare?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-26-2023 08:54 AM

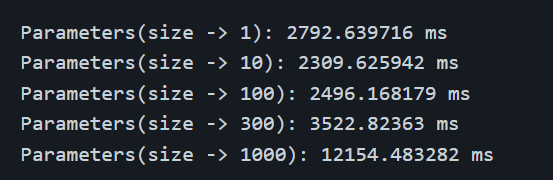

It was tested with up to 4000 columns. Here are tests up to 1000 columns after the update in December 2021 https://github.com/delta-io/delta/pull/584

Also, remember that stats are precalculated for the first 32 columns (you can change it to more in settings). So it would be good to have fields on which you merge conditions in the first 32 columns.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-27-2023 12:19 AM

Interesting...I had not yet considered the performance issues. I didn't think there would be any .. will clearly need to come back to this one... 🙂

I'm currently only concerned with the maintenance of a piece of code (the 500+ lines of the MERGE statement). Do you just "eyeball" the changes and hope for the best, or is there a more structured way to maintain large MERGE statements?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-27-2023 05:31 AM

I am still wondering what you mean by 500+ lines of code for a merge.

do you mean the list of columns which should be updated?

If you want to update a subset of columns that can become cumbersome indeed. But with some coding in scala/python you can create a list of column names which you can then pass to the query.

If you want to update all, use *

MERGE INTO table

USING updates

ON mgline.RegistrationYear IN ($yearlist) AND

table.key1 = updates.key1 and

table.key2 = updates.key2 and

table.key3 = updates.key3

WHEN MATCHED THEN

UPDATE SET *

WHEN NOT MATCHED THEN

INSERT *Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-27-2023 05:57 AM

Yes, I mean the list of columns becomes large. To then maintain a MERGE statement could be very cumbersome.

Would you happen to have an example of such Python code? This does actually make sense.. if such a list was generated dynamically, and then used for the UPD/INS statements..

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-27-2023 06:01 AM

I don't have an example at hand, but if you can do a df.columns, that gives you all the cols of the table (in dataframe format of course), then depending on the case you can drop columns or keep a few or ... and then try to use that list for the merge.

TBH I never did that though, I always use update * to avoid the hassle.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-27-2023 06:10 AM

Would UPDATE SET * not require the the source and target columns to have the same names, and the same column order ?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-27-2023 06:13 AM

don't know about order.

However, I always prepare the incoming data so that it has the same schema as the target. This makes merges easy. You indeed do not want to tinker around in a merge statement and typing tons of columns.

Using scala/python it is practically always possible to prepare your data.

Takes some time to learn, but it is worth it.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-27-2023 06:24 AM

Thanks, this does make sense.

I have a new lead to chase .. 🙂

Much appreciated. 😊

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Databricks Connect Passthrough in Data Engineering

- Cannot resolve due to data type mismatch: incompatible types ("STRING" and ARRAY<STRING> in Data Engineering

- Incremental load from source; how to handle deletes in Data Engineering

- Row-level Concurrency and Liquid Clustering compatibility in Data Engineering

- DeltaRuntimeException: Keeping the source of the MERGE statement materialized has failed repeatedly. in Data Engineering