Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Connecting to Snowflake using an SSO user from Azu...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Connecting to Snowflake using an SSO user from Azure Databricks

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-26-2023 05:33 PM

Hi all,

I'm just reaching out to see if anyone has information or can point me in a useful direction. I need to connect to Snowflake from Azure Databricks using the connector: https://learn.microsoft.com/en-us/azure/databricks/external-data/snowflake

The connector requires that users put their username and password in the connections details (or in a secret store), however these are the credentials for a Snowflake user and the Snowflake account is configured to use SSO with Azure as the IdP.

Looking through the documentation for the Snowflake JDBC connector to get the connector working when using SSO you need to configure:

.option("authenticator" , "externalbrowser")You can see that here: https://docs.snowflake.com/en/user-guide/admin-security-fed-auth-use.html#setting-up-browser-based-s...

And an example of configuring the connector with Spark is here: https://community.snowflake.com/s/article/How-to-connect-to-Snowflake-with-Spark-connector-via-SSO

When configuring the connector in this way I get indefinite hanging of the Spark job:

snowflake_table = (spark.read

.format("snowflake")

.option("dbtable", "blah")

.option("sfURL", "xxxxxxxx.snowflakecomputing.com/")

.option("sfUser", "***") #***** value

.option("sfPassword", "***") #***** value

.option("authenticator" , "externalbrowser")

.option("sfDatabase", "blah")

.option("sfSchema", "blah")

.option("sfWarehouse", "blah")

.load()

)With a message in the driver logs:

Initiating login request with your identity provider. A browser window should have opened for you to complete the login. If you can't see it, check existing browser windows, or your OS settings. Press CTRL+C to abort and try again...The browser window for logging in never actually surfaces.

I found a similar comment from 2020 describing the problem: https://stackoverflow.com/questions/64330577/connection-issue-databricks-snowflake#comment113795080_...

Is it still the case that Databricks doesn't support that method of authentication? Are there any alternatives or workarounds besides creating local non-SSO users in Snowflake?

Thanks

Labels:

- Labels:

-

Azure

-

Credential passthrough

-

Snowflake

-

Sso

5 REPLIES 5

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-31-2023 04:32 PM

The above question refers to SAML authentication for SSO in Snowflake with AAD.

I can see this isn't going to work without some proxying magic of the localhost session on the driver that serves the authentication redirect.

Since then, I tried to go down the external OAUTH route, again using AAD. One can configure an Application Registration in Azure AD and create the integration in Snowflake. The issue then becomes how does an end user get an OAUTH2 Access Token within a Databricks notebook session, since they will need to authenticate with the registration from within the running Databricks driver session.

Aside: Somehow Azure Databricks manages to create a valid OAUTH2 token for ADLS Gen1 and Gen2 with "aud": "https://storage.azure.com" for users when credential passthrough is enabled however I have no idea what the mechanism they use to do this is. I would love to read some technical documentation on how this mechanism works under the hood, how the refresh and access tokens are generated and where they are stored.

Looking at the list of authentication flows for OAUTH in msal, the only one that doesn't require a redirect flow (e.g. Authorization code) or credentials (e.g. Client credentials, ROPC) is Device code.

This involves running the following code in Databricks (the actual implementation can be abstracted away in a shared library) and authenticating in a different browser window / tab:

import msal

import logging

import json

import sys

config = {

"client_id": "0fedeef6-71c3-42e4-ba4e-d6e2b443bd17",

"authority": "https://login.microsoftonline.com/9c5da1da-3b7d-4eb6-a0db-b83ada116551",

"scope": ["api://5b427fec-4148-4dcb-b488-9006ef357fda/session:scope:analyst"]

}

app = msal.PublicClientApplication(

config["client_id"], authority=config["authority"],

)

result = None

accounts = app.get_accounts()

if accounts:

logging.info("Account(s) exists in cache, probably with token too. Let's try.")

print("Pick the account you want to use to proceed:")

for a in accounts:

print(a["username"])

chosen = accounts[0]

result = app.acquire_token_silent(config["scope"], account=chosen)

if not result:

logging.info("No suitable token exists in cache. Let's get a new one from AAD.")

flow = app.initiate_device_flow(scopes=config["scope"])

if "user_code" not in flow:

raise ValueError(

"Fail to create device flow. Err: %s" % json.dumps(flow, indent=4))

print(flow["message"])

sys.stdout.flush()

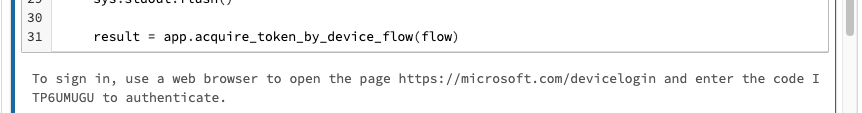

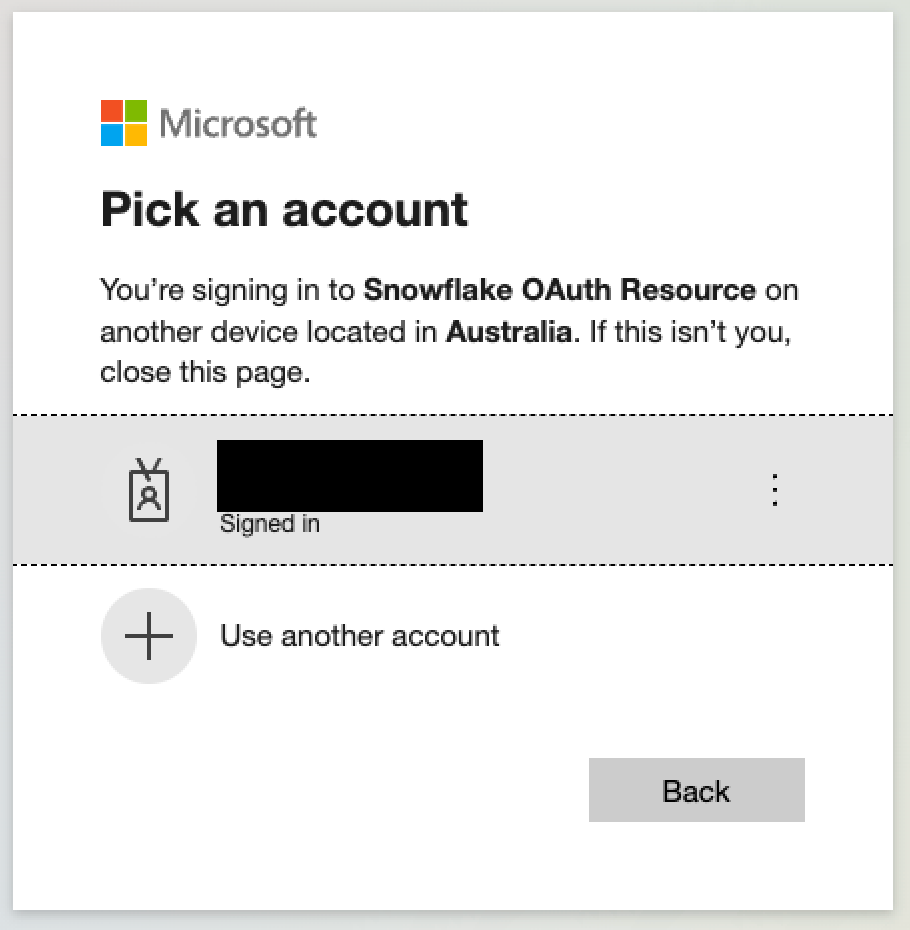

result = app.acquire_token_by_device_flow(flow)which presents the following message in the notebook cell:

snowflake_table1 = (spark.read

.format("snowflake")

.option("dbtable", "CALL_CENTER")

.option("sfURL", "xxxxxxxxxx.snowflakecomputing.com/")

.option("sfUser", "xxxxxxxxxxxxx")

.option("sftoken", result["access_token"])

.option("sfRole", "analyst")

.option("sfAuthenticator" , "oauth",)

.option("sfDatabase", "SNOWFLAKE_SAMPLE_DATA")

.option("sfSchema", "TPCDS_SF100TCL")

.option("sfWarehouse", "COMPUTE_WH")

.load()

)This approach is complex for end users and involves a convoluted authentication flow. Furthermore, if conditional access policies were introduced this method would no longer work:

To conclude: This is the closest I've come to authenticating to a Snowflake instance that uses AAD for authentication, however it still feels very far from SSO. Is this the only was to get OAUTH tokens to an application in the same tenancy as Databricks for the user logged into Databricks? How does credential passthrough achieve this? Is there anything else I can try?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-07-2023 03:28 PM

@Alex Bush did you ever find a better solution to this issue? I have the same problem and want to know the best practice for connecting to Snowflake from a Databricks notebook with SSO.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-08-2023 06:17 PM

We had confirmation from Databricks that the approach detailed in my reply was the only workable approach for user-based SSO. We ended up replicating data across from Snowflake into Databricks in the end. Another approach is to use a service account etc, however this might break some data governance and audit policies if your org. requires such things.

Something else to consider is that if your query can be pushed down into Snowflake then it gets executed on the Snowflake side and the results returned via the connector. As soon as a user executes a query in Databricks that can't be pushed down (e.g., uses a UDF) then the data for every table referenced in that query is pulled through to Databricks and the query is executed on the Databricks side. This will have a very significant impact on query performance, therefore depending on your use-case, data volume, and frequency of Snowflake being queried you might want to consider replication.

https://docs.snowflake.com/en/user-guide/spark-connector-use#pushdown

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-13-2023 03:56 AM

For people stumbling on this question, I just want to say the Single Sign On (SSO) method now works with Databricks runtime version 11.2 or newer. This is as interactive input is now possible, thus the external browser option works.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-31-2023 12:33 PM

@ludgervisser Do you have an example or some documentation you can point to that shows how to configure this?

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Unit Testing with the new Databricks Connect in Python in Data Engineering

- Databricks connecting SQL Azure DW - Confused between Polybase and Copy Into in Data Engineering

- Connecting from Databricks to Network Path in Data Engineering

- Databricks Connection to Redash in Data Engineering

- Databricks-connect OpenSSL Handshake failed on WSL2 in Data Engineering