I am running a parameterized autoloader notebook in a workflow.

I am running a parameterized autoloader notebook in a workflow.

This notebook is being called 29 times in parallel, and FYI UC is also enabled.

I am facing this error:

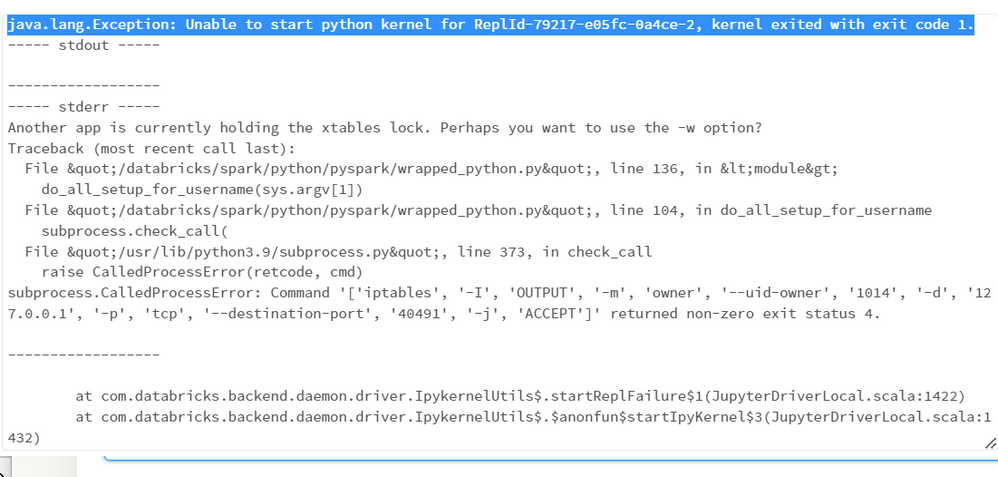

java.lang.Exception: Unable to start python kernel for ReplId-79217-e05fc-0a4ce-2, kernel exited with exit code 1.

----- stdout -----

------------------

----- stderr -----

Another app is currently holding the xtables lock. Perhaps you want to use the -w option?

Traceback (most recent call last):

File "/databricks/spark/python/pyspark/wrapped_python.py", line 136, in <module>

do_all_setup_for_username(sys.argv[1])

File "/databricks/spark/python/pyspark/wrapped_python.py", line 104, in do_all_setup_for_username

subprocess.check_call(

File "/usr/lib/python3.9/subprocess.py", line 373, in check_call

raise CalledProcessError(retcode, cmd)

subprocess.CalledProcessError: Command '['iptables', '-I', 'OUTPUT', '-m', 'owner', '--uid-owner', '1014', '-d', '127.0.0.1', '-p', 'tcp', '--destination-port', '40491', '-j', 'ACCEPT']' returned non-zero exit status 4.