Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Incorrect reading csv format with inferSchema

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Incorrect reading csv format with inferSchema

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-31-2023 09:16 PM

Hi All,

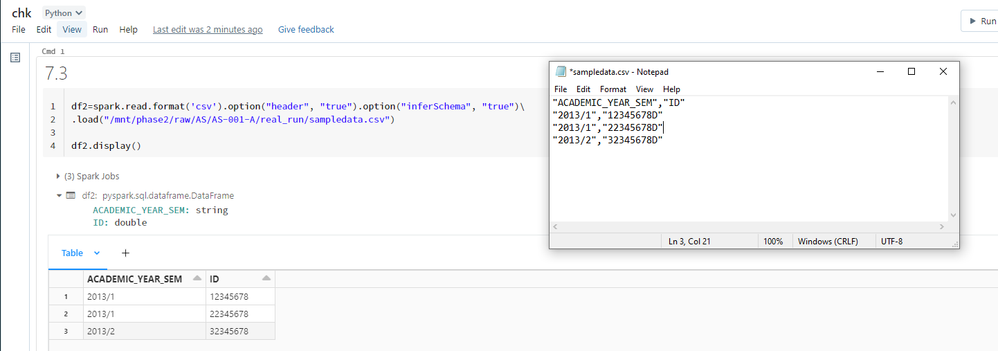

There is a CSV with a column ID (format: 8-digits & "D" at the end).

When trying to read a csv with .option("inferSchema", "true"), it returns the ID as double and trim the "D". Is there any idea (apart from inferSchema=False) to get correct result? Thanks for help!

Below options was tried and also failed.

options(delimiter=",", sep = ",", header=True, inferSchema=True,multiline=True, quote="\"", escape="\"")

5 REPLIES 5

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-31-2023 11:17 PM

Hi @tracy ng

By default, spark treating as a double value for all the numbers ending with D or F

I think you should connect with databricks regarding this.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-01-2023 06:49 PM

Thanks @Ajay Pandey.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-01-2023 01:33 AM

It can be solved, for example, by specifying the schema with ID as string.

If you don't want to specify schema, better would be autoloader as you can specify hint:

spark.readStream

.format("cloudFiles")

.option("cloudFiles.format", "csv")

.option("cloudFiles.schemaLocation", checkpoint_path)

.option("cloudFiles.schemaHints", "ID String")

.option("inferSchema", True)

.option("mergeSchema", True)

.load(folder)Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-01-2023 06:56 PM

Thanks @Hubert Dudek ,

Becasue the reading file is used to loop different source from different directory with different schema and dynamic column name (sometimes named ID, sometime named SID etc.), it seems the autoloader is not applicable to the case. I wonder is there any option to disable this feature (treating column as double when there is trailing D).

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-10-2023 09:52 PM

Hi @tracy ng

Thank you for posting your question in our community! We are happy to assist you.

To help us provide you with the most accurate information, could you please take a moment to review the responses and select the one that best answers your question?

This will also help other community members who may have similar questions in the future. Thank you for your participation and let us know if you need any further assistance!

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Spark CSV file read option to read blank/empty value from file as empty value only instead Null in Data Engineering

- SchemaEvolutionMode exception in Databricks 14.2 in Data Engineering

- Autoloader configuration with data type casting in Data Engineering

- Empty xml tag in Data Engineering

- MongoDB Spark Connector v10.x read error on Databricks 13.x in Data Engineering