Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- How to make spark-submit work on windows?

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-03-2023 01:04 PM

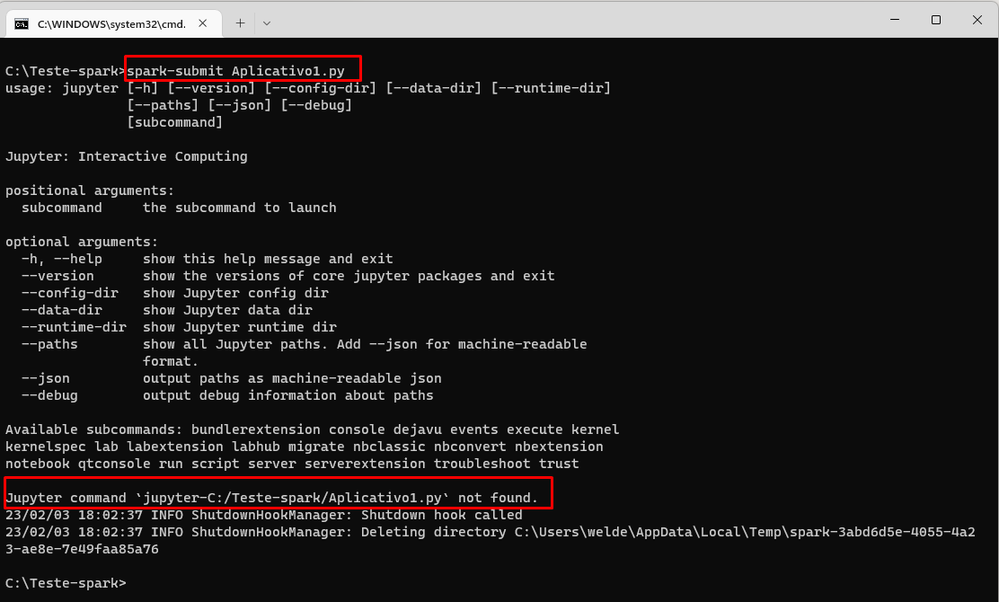

I have Jupyter Notebook installed on my machine working normally. I tested running a Spark application by running the spark-submit command and it returns the message that the file was not found. What do you need to do to make it work?

Below is a file with a simple example.

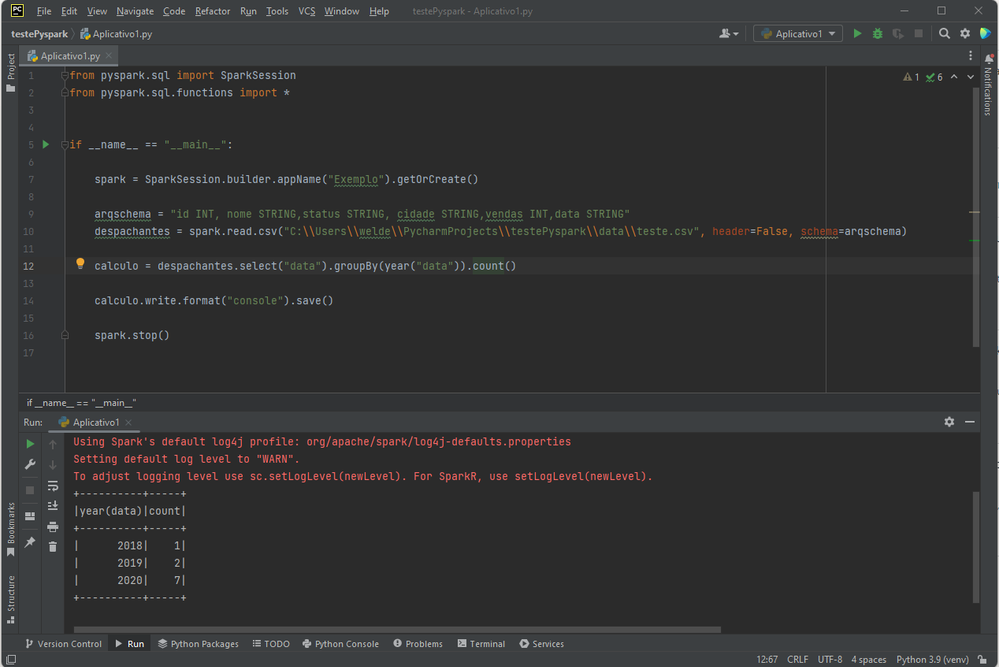

from pyspark.sql import SparkSession

from pyspark.sql.functions import *

if __name__ == "__main__":

spark = SparkSession.builder.appName("Exemplo").getOrCreate()

arqschema = "id INT, nome STRING,status STRING, cidade STRING,vendas INT,data STRING"

despachantes = spark.read.csv("C:\test-spark\despachantes.csv",header=False, schema=arqschema)

calculo = despachantes.select("date").groupBy(year("date")).count()

calculo.write.format("console").save()

spark.stop()

Labels:

- Labels:

-

Int

-

Spark

-

Spark application

-

Spark-submit

-

Windows

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-06-2023 05:38 PM

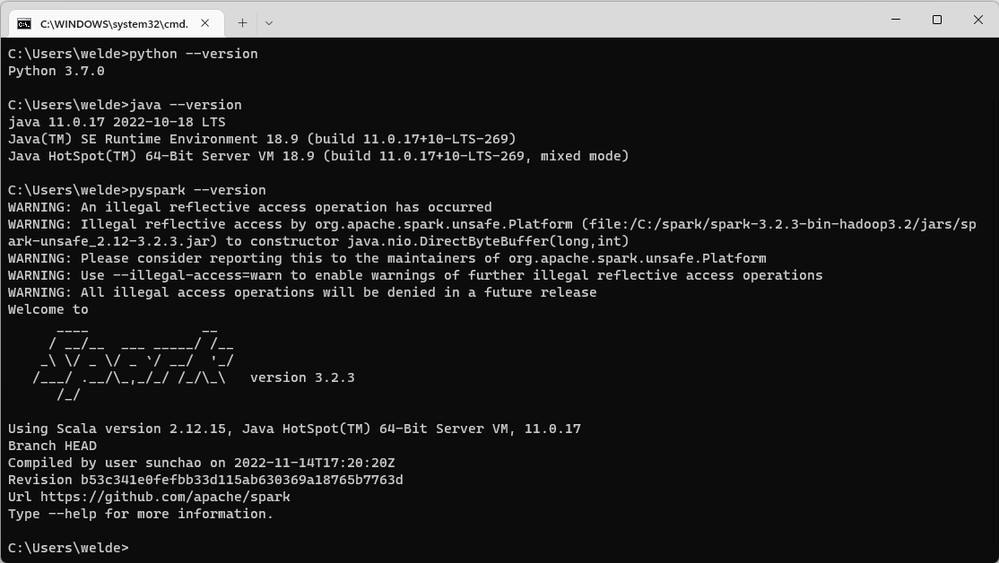

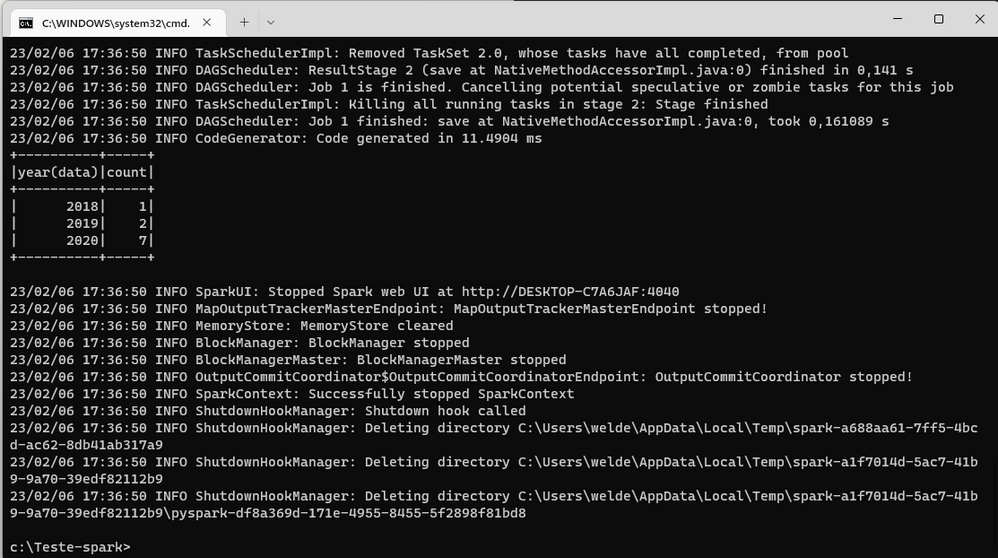

I managed to resolve. It was java and python incompatibility with the Spark version I was using.

I will create a video, explaining how to use Spark without Jupyter notebook.

2 REPLIES 2

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-05-2023 10:20 AM

Hi, yet this is not tested in my lab, but could you please check and confirm if this works: https://stackoverflow.com/questions/37861469/how-to-submit-spark-application-on-cmd

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-06-2023 05:38 PM

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Python UDF in Unity Catalog - spark.sql error in Data Engineering

- SQL Warehouse - Table does not support overwrite by expression: in Data Engineering

- Installing databricks CLI on windows . sh ??¿? in Administration & Architecture

- dbutils.fs.cp requires write permissions on the source in Data Engineering

- Struggling with UC Volume Paths in Administration & Architecture