Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Machine Learning

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Machine Learning

- Sample Datasets URL in Azure Databricks / access s...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Sample Datasets URL in Azure Databricks / access sample datasets when NPIP and Firewall is enabled

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-07-2023 07:51 PM

Hi,

I have an Azure Databricks instance configured to use VNet injection with secure cluster connectivity. I have an Azure Firewall configured and controlling all traffic ingress and egress locations as per this article: https://learn.microsoft.com/en-us/azure/databricks/resources/supported-regions#--dbfs-root-blob-stor...

I can access the Hive metastore, DBFS via the internal storage account etc etc, basically the cluster is up and running and I seem to have whitelisted every domain or IP for connectivity to work as per the article.

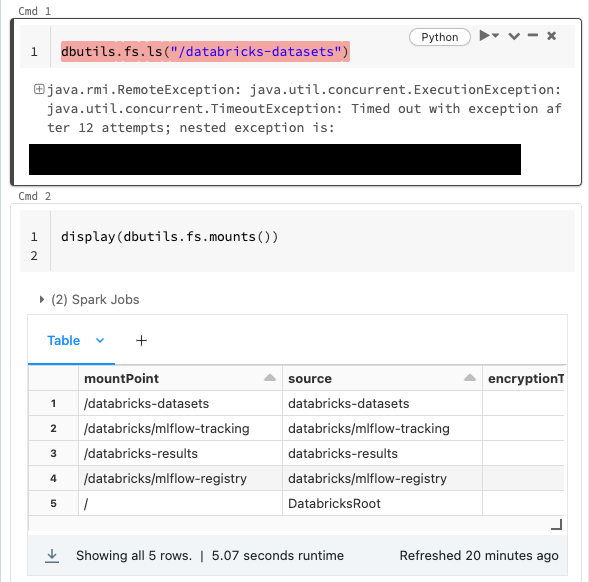

However, the one thing I can't get running is the sample-datasets mount on DBFS. Every time I try to access the mount it times out:

I'm going to assume that it's because I haven't whitelisted the underlying storage location of this dataset source. When I list the mounts it doesn't give me any more detail:

mountPoint source encryptionType

/databricks-datasets databricks-datasets

/databricks/mlflow-tracking databricks/mlflow-tracking

/databricks-results databricks-results

/databricks/mlflow-registry databricks/mlflow-registry

/ DatabricksRoot Looking at the exception, it seems to time out on an S3 client, so I assume it's actually reading an S3 bucket in AWS somewhere:

---------------------------------------------------------------------------

ExecutionError Traceback (most recent call last)

<command-3658692990033083> in <cell line: 1>()

----> 1 dbutils.fs.ls("/databricks-datasets")

/databricks/python_shell/dbruntime/dbutils.py in f_with_exception_handling(*args, **kwargs)

360 exc.__context__ = None

361 exc.__cause__ = None

--> 362 raise exc

363

364 return f_with_exception_handling

ExecutionError: An error occurred while calling o374.ls.

: java.rmi.RemoteException: java.util.concurrent.ExecutionException: java.util.concurrent.TimeoutException: Timed out with exception after 12 attempts; nested exception is:

java.util.concurrent.ExecutionException: java.util.concurrent.TimeoutException: Timed out with exception after 12 attempts

at com.databricks.backend.daemon.data.client.DbfsClient.send0(DbfsClient.scala:135)

at com.databricks.backend.daemon.data.client.DbfsClient.sendIdempotent(DbfsClient.scala:69)

at com.databricks.backend.daemon.data.client.RemoteDatabricksStsClient.getSessionTokenFor(DbfsClient.scala:311)

at com.databricks.backend.daemon.data.client.DatabricksSessionCredentialsProvider.startSession(DatabricksSessionCredentialsProvider.scala:56)

at com.databricks.backend.daemon.data.client.DatabricksSessionCredentialsProvider.getCredentials(DatabricksSessionCredentialsProvider.scala:46)

at com.databricks.backend.daemon.data.client.DatabricksSessionCredentialsProvider.getCredentials(DatabricksSessionCredentialsProvider.scala:34)

at com.amazonaws.http.AmazonHttpClient$RequestExecutor.getCredentialsFromContext(AmazonHttpClient.java:1266)

at com.amazonaws.http.AmazonHttpClient$RequestExecutor.runBeforeRequestHandlers(AmazonHttpClient.java:842)

at com.amazonaws.http.AmazonHttpClient$RequestExecutor.doExecute(AmazonHttpClient.java:792)

at com.amazonaws.http.AmazonHttpClient$RequestExecutor.executeWithTimer(AmazonHttpClient.java:779)

at com.amazonaws.http.AmazonHttpClient$RequestExecutor.execute(AmazonHttpClient.java:753)

at com.amazonaws.http.AmazonHttpClient$RequestExecutor.access$500(AmazonHttpClient.java:713)

at com.amazonaws.http.AmazonHttpClient$RequestExecutionBuilderImpl.execute(AmazonHttpClient.java:695)

at com.amazonaws.http.AmazonHttpClient.execute(AmazonHttpClient.java:559)

at com.amazonaws.http.AmazonHttpClient.execute(AmazonHttpClient.java:539)

at com.amazonaws.services.s3.AmazonS3Client.invoke(AmazonS3Client.java:5453)

at com.amazonaws.services.s3.AmazonS3Client.getBucketRegionViaHeadRequest(AmazonS3Client.java:6428)

at com.amazonaws.services.s3.AmazonS3Client.fetchRegionFromCache(AmazonS3Client.java:6401)

at com.amazonaws.services.s3.AmazonS3Client.invoke(AmazonS3Client.java:5438)

at com.amazonaws.services.s3.AmazonS3Client.invoke(AmazonS3Client.java:5400)

at com.amazonaws.services.s3.AmazonS3Client.invoke(AmazonS3Client.java:5394)

at com.amazonaws.services.s3.AmazonS3Client.listObjectsV2(AmazonS3Client.java:971)

at shaded.databricks.org.apache.hadoop.fs.s3a.EnforcingDatabricksS3Client.listObjectsV2(EnforcingDatabricksS3Client.scala:214)Is there any documentation on where this storage account actually is? Can it be accessed with an Azure Firewall configured to filter traffic?

Thanks,

Alex

Labels:

5 REPLIES 5

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-07-2023 09:11 PM

Hi,

Could you please reverify if you have followed the main steps i.e. (https://learn.microsoft.com/en-us/azure/databricks/administration-guide/cloud-configurations/azure/v...)

- Connect Azure Databricks to other Azure services (such as Azure Storage) in a more secure manner using service endpoints or private endpoints.

- Connect to on-premises data sources for use with Azure Databricks, taking advantage of user-defined routes.

- Connect Azure Databricks to a network virtual appliance to inspect all outbound traffic and take actions according to allow and deny rules, by using user-defined routes.

- Configure Azure Databricks to use custom DNS.

- Configure network security group (NSG) rules to specify egress traffic restrictions.

- Deploy Azure Databricks clusters in your existing VNet.

Also, the UDR related steps, https://learn.microsoft.com/en-us/azure/databricks/administration-guide/cloud-configurations/azure/u...

Also, mainly, for metastore, artifact blob storages and etc you have to allow the below DNS list as per the region: https://learn.microsoft.com/en-us/azure/databricks/resources/supported-regions#--metastore-artifact-...

Please let us know if this helps.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-08-2023 08:09 PM

Hi Debayan,

Thank you for the reply and the links.

I have configured the workspace and Azure infrastructure as described in the links. All the storage and clusters were working except for the sample datasets.

I did a little digging into the firewall logs and found the following logs:

HTTPS request from 10.1.2.4:58006 to sts.amazonaws.com:443. Action: Deny. No rule matched. Proceeding with default action

HTTPS request from 10.1.2.4:41590 to databricks-datasets-oregon.s3.amazonaws.com:443. Action: Deny. No rule matched. Proceeding with default action

HTTPS request from 10.1.2.5:59056 to databricks-datasets-oregon.s3.us-west-2.amazonaws.com:443. Action: Deny. No rule matched. Proceeding with default actionSo it seems the Azure workspace calls to AWS to read the sample datasets (I wouldn't want to be the one paying your data egress bill!).

I added the following rule to the firewall and it works:

"Sample Datasets" = {

action = "Allow"

target_fqdns = [

"sts.amazonaws.com",

"databricks-datasets-oregon.s3.amazonaws.com",

"databricks-datasets-oregon.s3.us-west-2.amazonaws.com"

]

protocol = {

type = "Https"

port = "443"

}

}Do you know what the mapping for Azure region to AWS region is? Looking at the docs here (https://docs.databricks.com/resources/supported-regions.html#control-plane-nat-and-storage-bucket-addresses) it seems you host the datasets across multiple regions. Do I have to whitelist all of them or does the Australia East region always go to Oregon to get the datasets?

Thanks,

Alex

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-09-2023 09:32 AM

Hi, you can refer to https://docs.databricks.com/administration-guide/cloud-configurations/aws/customer-managed-vpc.html#..., let me know if this helps.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-12-2023 03:09 PM

It might be useful to update the Azure docs to include the addresses of the sample Datasets in AWS so they can be accessed from clusters in Azure that are using a Firewall: https://learn.microsoft.com/en-us/azure/databricks/resources/supported-regions#control-plane-ip-addr...

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-08-2023 11:53 PM

Hi @Alex Bush

Hope everything is going great.

Just wanted to check in if you were able to resolve your issue. If yes, would you be happy to mark an answer as best so that other members can find the solution more quickly? If not, please tell us so we can help you.

Cheers!

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Azure Databricks with standard private link cluster event log error: "Metastore down"... in Administration & Architecture

- AssertionError Failed to create the catalog in Machine Learning

- [ Databricks - Delta sharing ] Issue with Delta Sharing in Databricks: Unable to Query Shared Views in Data Engineering

- OAuth U2M Manual token generation failing in Data Engineering

- Delta Live Table - Cannot redefine dataset in Data Engineering