Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Governance

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Governance

- Unity Catalog Shared Access Mode - dbutils.noteboo...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unity Catalog Shared Access Mode - dbutils.notebook.entry_point...getContext() not whitelisted

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-14-2023 08:02 AM

we are switching over to Unity Catalog and attempting to confirm the ability to run our existing notebooks. I have created a new Shared Unity Catalog Cluster and ran the notebook using the new cluster. Ran into an error attempting to execute a print statement. The information is displayed using the original cluster, and will also display the information when running the UC cluster in a single Access mode, but NOT "Shared"

print(dbutils.notebook.entry_point.getDbutils().notebook().getContext().toJson())

Error Returned:

py4j.security.Py4JSecurityException: Method public java.lang.String com.databricks.backend.common.rpc.CommandContext.toJson() is not whitelisted on class class com.databricks.backend.common.rpc.CommandContext

Has anyone determined the appropriate work around for this scenario?

Labels:

18 REPLIES 18

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-09-2023 08:21 AM

@Donal Kirwan :

It looks like the error you encountered is related to the Py4J security settings in Databricks. Py4J is a communication library used by Databricks to allow Python code to interact with Java code.

When running in Shared mode, Databricks applies stricter security settings to prevent users from executing potentially harmful code. In this case, it seems that the Py4J method you are trying to call is not on the whitelist of allowed methods in Shared mode.

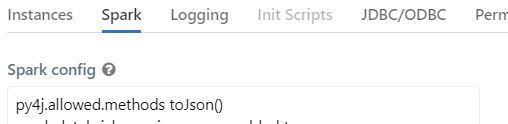

To work around this issue, you could try modifying the Py4J security settings to allow the method you need to use. This can be done by adding the method to the py4j.allowed.methods configuration property in your cluster's advanced configuration settings.

Once you've made the changes, restart your cluster and try running your notebook again in Shared mode to see if the issue is resolved.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-09-2023 02:14 PM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-09-2023 07:58 PM

@haiderpasha mohammed :

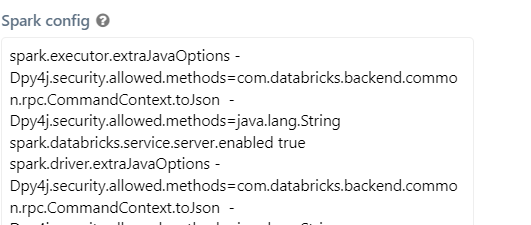

Here's an example of how you can modify the Py4J security settings in the Spark configuration to allow the method you need to use:

# Import the required modules

from pyspark import SparkConf, SparkContext

# Create a new Spark configuration

conf = SparkConf()

# Set the Py4J security settings to allow the required method

conf.set("spark.driver.extraJavaOptions", "-Dpy4j.security.allowed.methods=com.databricks.backend.common.rpc.CommandContext.toJson")

# Create a new Spark context using the modified configuration

sc = SparkContext(conf=conf)This example sets the py4j.security.allowed.methods property to include the toJson() method on the

com.databricks.backend.common.rpc.CommandContext class. You can modify this property to include any additional Py4J methods that you need to use.

Note that these changes will only affect the driver process. If you are running Spark on a cluster, you will need to ensure that these modifications are applied to all worker nodes as well. You can achieve this by setting the spark.executor.extraJavaOptions property to the same value as the spark.driver.extraJavaOptions property.

I hope this helps! Let me know if you have any further questions.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-10-2023 03:38 AM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-10-2023 05:47 AM

@haiderpasha mohammed : Okay, let me give you some more work arounds. Please let me know if that helps.

The error message suggests that the toJson() method of the CommandContext class is not whitelisted for use in Shared Unity Catalog mode. This could be due to security restrictions on the shared environment. To work around this issue, you can try accessing the context information in a different way. Instead of using

toJson() , you could try accessing the relevant information directly from the context object. For example, if you want to access the notebook ID, you could try the following code:

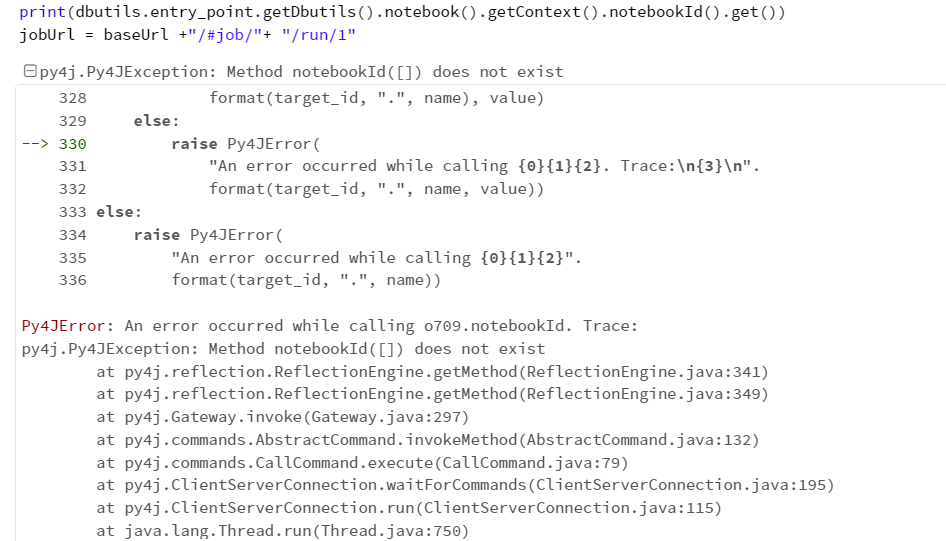

print(dbutils.entry_point.getDbutils().notebook().getContext().notebookId().get())This code should return the ID of the current notebook. You can replace

notebookId() with other methods to access different properties of the context object. If this workaround does not work, lets think of more options.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-10-2023 07:06 AM

@Suteja Kanuri

The notebookID method doesn't exist.

Py4JError: An error occurred while calling o709.notebookId. Trace:

py4j.Py4JException: Method notebookId([]) does not exist.

I tried currentRunId() and didn't use toJson() method but it didn't work out.

Py4JError: An error occurred while calling o709.currentRunId. Trace:

py4j.security.Py4JSecurityException: Method public scala.Option com.databricks.backend.common.rpc.CommandContext.currentRunId() is not whitelisted on class class com.databricks.backend.common.rpc.CommandContext

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-10-2023 07:18 AM

@haiderpasha mohammed : Can you please try the below and check.

As a workaround, you can try using the dbutils.notebook.entry_point.getDbutils().notebook().getContext().notebookId() method instead of the notebookId([]) method. This method returns the current notebook ID as a string, which you can use for your purposes.

Similarly, you can try using the dbutils.notebook.entry_point.getDbutils().notebook().getContext().currentRunId().get() method instead of the currentRunId() method. This method returns the current run ID as a string, which you can use for your purposes.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-10-2023 07:30 AM

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-14-2023 10:08 AM

@haiderpasha mohammed :

I see. In that case, it's possible that the getContext() method does not exist in the version of the Databricks runtime that the shared Unity Catalog cluster is running on.

One workaround you could try is to instead use the REST API to get the notebook ID. You can do this by sending an HTTP GET request to the following endpoint:

https://<databricks-instance>/api/2.0/workspace/get?path=<notebook-path>;Replace <databricks-instance> with the URL of your Databricks instance, and

<notebook-path> with the path to your notebook, including the notebook name and extension. The response from this endpoint will include a notebook_id field with the ID of the notebook. You can extract this ID from the JSON response and use it in your code. Here's an example of how you could make this request in Python:

import requests

import json

# Set the endpoint URL and path to your notebook

endpoint = "https://<databricks-instance>/api/2.0/workspace/get"

notebook_path = "/path/to/notebook"

# Set up the request parameters

params = {

"path": notebook_path

}

# Send the GET request

response = requests.get(endpoint, params=params)

# Extract the notebook ID from the response

response_json = json.loads(response.content)

notebook_id = response_json["object_id"]

# Print the notebook ID

print(notebook_id)Let me know if this helps!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-10-2023 11:17 PM

We are experiencing the same issue and no workaround is working so far. Do you have another idea? Is there a fix in the next DB runtime? Thanks!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-09-2023 02:34 PM

The below combination also not working.

py4j.allowed.methods com.databricks.backend.common.rpc.CommandContext.toJson()

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-20-2023 01:07 AM

This is how to get notebook_path correctly.

notebook_path = dbutils.notebook.entry_point.getDbutils().notebook().getContext().notebookPath().get()

I think the databricks community *****. The technical support never gives the proper solution and answer. To Databricks , you need to improve this community.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-22-2023 08:14 AM

Same issue here. Tested on shared clusters up to the latest DBR version 13.1. All give the same error:

py4j.security.Py4JSecurityException: Method public java.lang.String com.databricks.backend.common.rpc.CommandContext.toJson() is not whitelisted on class class com.databricks.backend.common.rpc.CommandContextWith a Single user cluster there are no issues

Related question: https://community.databricks.com/s/question/0D58Y00009DDCPOSA5/getcontext-in-dbutilsnotebook-not-wor...

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-03-2023 10:51 AM

For a cluster in shared-mode, you can access the notebook context via the databricks_utils library from the MLFlow git repo. You can retrieve the notebook_id, cluster_id, notebook_path, etc on the shared cluster. You will need to import Mlflow or use the ML DBR.

pip install mlflow

from mlflow.utils import databricks_utils

notebook_id = databricks_utils.get_notebook_id() #Extract the notebook ID

notebook_path = databricks_utils.get_notebook_path() #Extract the notebook path

print(notebook_id)

print(notebook_path)

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Permission denied using patchelf in Administration & Architecture

- Trying to run databricks academy labs, but execution fails due to method to clearcache not whilelist in Data Engineering

- Errors When Using R on Unity Catalog Clusters in Data Engineering

- Connecting from Databricks to Network Path in Data Engineering

- Databricks Connection to Redash in Data Engineering