Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- AmazonS3 with Autoloader consume "too many" reques...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-05-2023 04:41 AM

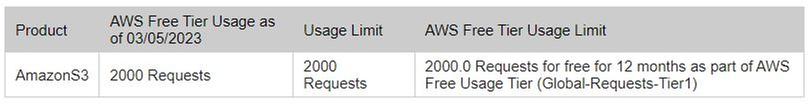

After successfully loading 3 small files (2 KB each) in from AWS S3 using Auto Loader for learning purposes, I got, few hours later, a "AWS Free tier limit alert", although I haven't used the AWS account for a while.

Does this streaming service on Databricks that runs all the time consume requests even if no files/data are uploaded?

Labels:

- Labels:

-

Amazon s3

-

AmazonS3

-

Api Requests

-

Autoloader

-

AWS

-

AWSFreeTier

-

S3

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-05-2023 10:56 PM

@Alexander Mora Araya

It somehow needs to check if there's a new file on the storage, so yes - it will consume request if it runs continuously.

3 REPLIES 3

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-05-2023 10:56 PM

@Alexander Mora Araya

It somehow needs to check if there's a new file on the storage, so yes - it will consume request if it runs continuously.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-06-2023 08:25 AM

Hi, Auto Loader incrementally and efficiently processes new data files as they arrive in cloud storage. Auto Loader can load data files from AWS S3 (s3://), Azure Data Lake Storage Gen2 (ADLS Gen2, abfss://), Google Cloud Storage (GCS, gs://), Azure Blob Storage (wasbs://), ADLS Gen1 (adl://), and Databricks File System (DBFS, dbfs:/). Auto Loader can ingest JSON, CSV, PARQUET, AVRO, ORC, TEXT, and BINARYFILE file formats.

Auto Loader provides a Structured Streaming source called cloudFiles. Given an input directory path on the cloud file storage, the cloudFiles source automatically processes new files as they arrive, with the option of also processing existing files in that directory. Auto Loader has support for both Python and SQL in Delta Live Tables.

You can use Auto Loader to process billions of files to migrate or backfill a table. Auto Loader scales to support near real-time ingestion of millions of files per hour.

Could you please reverify if the cloud storage is receiving any files or not?

Please refer: https://docs.databricks.com/ingestion/auto-loader/index.html

Please let us know if this helps.

Also please tag @Debayan with your next response which will notify me, Thank you!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-06-2023 08:42 AM

@Debayan Mukherjee

Thanks for this explanation. Everything worked fine when I tested it, as I mentioned above. The only thing is that it continuously makes requests to S3 to check if new data needs to be pull. Am I wrong here?

Announcements

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Ingesting Files - Same file name, modified content in Data Engineering

- Trigger a job on file update in Data Engineering

- Autoloader ingestion same top level directory different files corresponding to different tables in Data Engineering

- Unable to create a record_id column via DLT - Autoloader in Data Engineering

- Best Practices Near Real-time Processing in Data Engineering