Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- PyPI library sometimes doesn't install during work...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

PyPI library sometimes doesn't install during workflow execution

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-06-2023 07:34 AM

I have a workflow that is running upon a job cluster and contains a task that requires prophet library from PyPI:

{

"task_key": "my_task",

"depends_on": [

{

"task_key": "<...>"

}

],

"notebook_task": {

"notebook_path": "<...>",

"source": "WORKSPACE"

},

"job_cluster_key": "job_cluster",

"libraries": [

{

"pypi": {

"package": "prophet==1.1.2"

}

}

],

"timeout_seconds": 0,

"email_notifications": {}

},Sometimes it works fine but sometimes I got the error below:

Run result unavailable: job failed with error message

Library installation failed for library due to user error for pypi {

package: "prophet==1.1.2"

}

. Error messages:

Library installation attempted on the driver node of cluster <...> and failed. Please refer to the following error message to fix the library or contact Databricks support. Error Code: DRIVER_LIBRARY_INSTALLATION_FAILURE. Error Message: org.apache.spark.SparkException: Process List(/databricks/python/bin/pip, install, prophet==1.1.2, --disable-pip-version-check) exited with code 1. ERROR: Could not install packages due to an OSError: [Errno 2] No such file or directory: '/databricks/python3/bin/f2py'I saw suggestions to install in advance this library on a cluster. But I start my workflow in a job cluster (not an all-purpose cluster) so there is no ability to install something in advance. Weird thing is that sometimes it's ok and sometimes not.

So if there is a way to install library with a 100% guarantee on a shared job cluster it would be great!

Labels:

12 REPLIES 12

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-12-2023 05:34 PM

@Eugene Bikkinin :

OPTION 1:

The error message suggests that the installation of the `prophet` library failed on the driver node of your Databricks cluster. Specifically, it appears that the installation was unable to locate the file /databricks/python3/bin/f2py.

One possible solution is to try installing the library again, but with the

--no-binary flag. This can sometimes help if there are issues with the pre-built binary packages. This tells Databricks to use pip to install the prophet library, and to use the --no-binary flag

[

{

"classification": "pip",

"pipPackages": [

{

"package": "prophet",

"noBinary": true

}

]

}

]OPTION 2:

Steps to install PyPI packages on a Databricks shared job cluster are as below:

- Navigate to the Databricks workspace and click on the "Clusters" tab.

- Click on the name of the shared job cluster you want to install the PyPI packages on.

- Click on the "Libraries" tab and then click on the "Install New" button.

- In the "Install Library" dialog box, select "PyPI" as the library source.

- Enter the name of the PyPI package you want to install in the "Package" field.

- If you want to install a specific version of the package, enter it in the "Version" field. If you want to install the latest version, leave the "Version" field blank.

- Click on the "Install" button to install the PyPI package.

Once the PyPI package is installed, it will be available to all jobs running on that shared job cluster.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-13-2023 03:21 AM

Thank you @Suteja Kanuri

Just to be sure about the second option. I thought that job clusters work as k8s pods when you are given some spare CPU and memory on existing clusters side by side with other customers.

But if I can explicitly set up a library on the job cluster then my previous assumption is not correct. So what is the difference between a job and all-purpose clusters?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-13-2023 04:29 AM

@Eugene Bikkinin :

In Databricks, a job cluster is a temporary cluster that is created on-demand to run a specific job or task.

Similar to Kubernetes pods, job clusters are created on existing all-purpose clusters in Databricks. These job clusters are ephemeral and are terminated after the job completes. They are used to isolate the resources needed for a specific job or task from the resources of the main all-purpose cluster.

While it is possible to explicitly set up a library on a job cluster, the main purpose of a job cluster is to provide dedicated resources for a specific job or task. In contrast, all-purpose clusters in Databricks are long-lived and are used to run a wide variety of workloads, including interactive workloads, streaming, and batch processing jobs.

All-purpose clusters are optimized for general-purpose computing and typically include nodes that are optimized for CPU and memory-intensive workloads. They are designed to provide a flexible and scalable platform for running various types of workloads simultaneously.

Hope this explanation helps!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-13-2023 05:19 AM

Yes, thank you! It is really nice explanation.

But then I must return to my initial question - how to guarantee that the library will be installed on this ephemeral job cluster.

The solution to which I came now is to use Databricks Container Services

and run job cluster using my custom image with preinstalled library.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-13-2023 04:23 AM

I think I used not very correct wording in my initial message.

So the issue I am facing with is that sometimes job cluster (not all-purpose) cluster cannot install library during executing workflow.

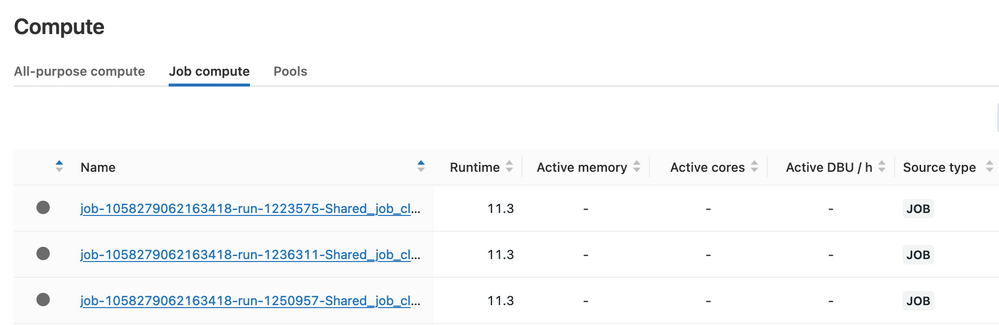

So, option 1 is valid, but option 2 is not because I cannot see job cluster in clusters tab. I can see them in "Job compute" tab but they are all different here.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-13-2023 04:34 AM

@Eugene Bikkinin : Can you try the below options.

To troubleshoot the issue, you can start by checking the job cluster logs to see if there are any error messages or exceptions related to the library installation. You can also try to manually install the library on the job cluster to see if it installs successfully. Additionally, you can check the network connectivity, dependencies, permissions, resources, compatibility, and package quality to ensure that they are not causing the issue.

Some of the most common reasons are:

- Network issues: If the job cluster is unable to connect to the internet or the library repository, it may not be able to download and install the required libraries.

- Dependency conflicts: If the library being installed has dependencies that conflict with existing dependencies on the job cluster, the installation may fail.

- Lack of permissions: If the job cluster does not have sufficient permissions to install the library, the installation may fail.

- Limited resources: If the job cluster does not have enough disk space, memory, or CPU resources to install the library, the installation may fail.

- Incompatibility: If the library being installed is not compatible with the version of the runtime environment in the job cluster, the installation may fail.

- Package quality: If the library package has bugs, errors, or issues, the installation may fail.

- Timeouts: If the installation process takes too long, the job cluster may timeout before the installation is complete.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-13-2023 05:17 AM

@Suteja Kanuri

> You can also try to manually install the library on the job cluster to see if it installs successfully.

So how can I manually install the library on the job cluster if it is ephemeral as you wrote above?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-13-2023 05:28 AM

@Eugene Bikkinin :

A way to install libraries on your job cluster is to use init scripts. Init scripts are scripts that run when a cluster is started, and can be used to install libraries or perform other initialization tasks. To use an init script to install a library, you can create a script that installs the library using pip or other package managers, and then attach this script to your cluster as an init script. Example is below

#!/bin/bash

/databricks/python/bin/pip install pandasYou can attach this script to your cluster by going to the "Advanced Options" tab when creating your job, and then adding the script to the "Init Scripts" field.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-31-2023 02:40 AM

Hey @Eugene Bikkinin

Thank you for your question! To assist you better, please take a moment to review the answer and let me know if it best fits your needs.

Please help us select the best solution by clicking on "Select As Best" if it does.

Your feedback will help us ensure that we are providing the best possible service to you. Thank you!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-31-2023 03:08 AM

Hi @Vartika Nain

I finally used a different solution - to use Databricks Container Services

and run job cluster using my custom image with preinstalled library.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-01-2023 09:52 PM

@Eugene Bikkinin : Thats great!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-24-2024 10:07 AM

Hi @xneg ,

Glad to hear this. I'm also facing the same issue. It would be great if you could elaborate it a bit more.

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Trying to run databricks academy labs, but execution fails due to method to clearcache not whilelist in Data Engineering

- Why is Dlt pipeline processing streaming data so slow? in Data Engineering

- Errors When Using R on Unity Catalog Clusters in Data Engineering

- Databricks SDK for Python: Errors with parameters for Statement Execution in Data Engineering

- Help - org.apache.spark.SparkException: Job aborted due to stage failure: Task 47 in stage 2842.0 in Machine Learning