Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Governance

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Governance

- Create Schema with Unity enabled

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Create Schema with Unity enabled

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-11-2023 09:15 AM

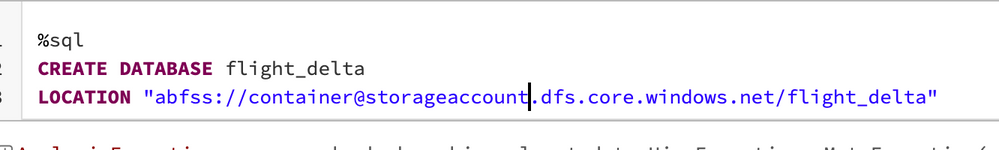

i have unit setup, and am trying to create a delta schema and table, i am getting an error on the schema creation, i am able to list the files and folders in the ADLS Gen2 storage account, i am able to write a parquet file to ADLS, but i cannot create a schema, did i miss a step in security somewhere?

"

AnalysisException: org.apache.hadoop.hive.ql.metadata.HiveException: MetaException(message:Got exception: shaded.databricks.azurebfs.org.apache.hadoop.fs.azurebfs.contracts.exceptions.KeyProviderException Failure to initialize configuration for storage account datalakeshepuc.dfs.core.windows.net: Invalid configuration value detected for fs.azure.account.key)"

Labels:

- Labels:

-

Delta Schema

-

Delta table

-

Schema

-

Unity

-

Unity Setup

12 REPLIES 12

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-11-2023 02:41 PM

The exception is from hive module and it seems you're trying to create the schema in hive metastore. Please review cluster configurations. UC is supported in DBR 11.1 onwards in single user and shared access modes. Run USE CATALOG command and then execute CREATE DATABASE command.

USE CATALOG <catalog>;

CREATE { DATABASE | SCHEMA } [ IF NOT EXISTS ] <schema_name>

[ MANAGED LOCATION '<location_path>' ]

[ COMMENT <comment> ]

[ WITH DBPROPERTIES ( <property_key = property_value [ , ... ]> ) ];

https://learn.microsoft.com/en-us/azure/databricks/data-governance/unity-catalog/create-schemas

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-12-2023 05:49 AM

Tried the USE Catalog, same error.

When you say "Please review cluster configurations." what do you meant, there hundreds of settings to be modified, is there something i need to do in addition to the standard Unity setup stuff?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-12-2023 07:01 AM

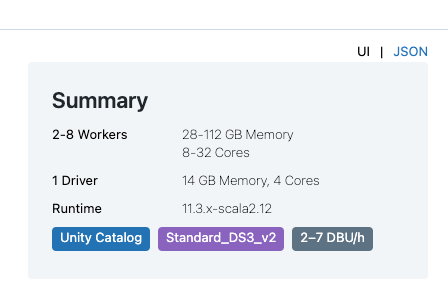

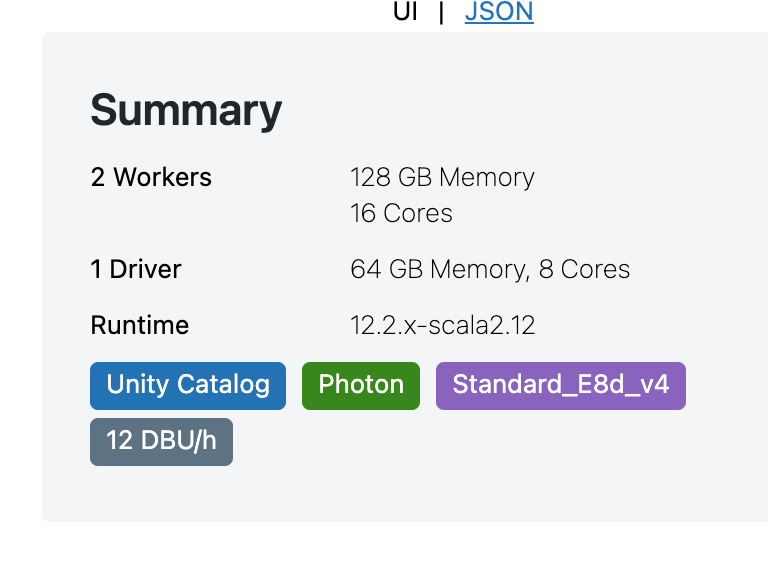

@Shep Sheppard As I mentioned, UC is supported in DBR 11.1 onwards in single user and shared access modes. So you should use DBR 11.1+ and use either single user or shared access mode. If the cluster has valid DBR version and access mode, then cluster summary should Unity Catalog enabled.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-12-2023 08:09 AM

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2023 12:01 AM

Hi @Shep Sheppard

Thank you for your question! To assist you better, please take a moment to review the answer and let me know if it best fits your needs.

Please help us select the best solution by clicking on "Select As Best" if it does.

Your feedback will help us ensure that we are providing the best possible service to you.

Thank you!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2023 04:02 AM

Still not solved i am unable to write to directly to ADLS Gen 2.

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2023 03:40 AM

@Shep Sheppard Since you're creating a schema with managed location, you should ensure a valid storage credential and external location is created in order to authenticate to the storage account. This path must be defined in an external location configuration, and you must have the CREATE MANAGED STORAGE privilege on the external location configuration. You can use the path that is defined in the external location configuration or a subpath

https://learn.microsoft.com/en-us/azure/databricks/data-governance/unity-catalog/create-schemas

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2023 04:04 AM

I went through all of this when i setup UC, and UC is working but still not able to write to ADLS Gen 2. I will review the links, again, but have all of that setup.

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2023 04:14 AM

@Shep Sheppard Can you share you cluster spark configuration, storage credential and external location configurations. Please mask the sensitive data. Do you initialize storage using any of below spark configurations, notebook or any init script? If so, please remove them

spark.conf.set("fs.azure.account.auth.type.<storage-account>.dfs.core.windows.net", "OAuth")

spark.conf.set("fs.azure.account.oauth.provider.type.<storage-account>.dfs.core.windows.net", "org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider")

spark.conf.set("fs.azure.account.oauth2.client.id.<storage-account>.dfs.core.windows.net", "<application-id>")

spark.conf.set("fs.azure.account.oauth2.client.secret.<storage-account>.dfs.core.windows.net", service_credential)

spark.conf.set("fs.azure.account.oauth2.client.endpoint.<storage-account>.dfs.core.windows.net", "https://login.microsoftonline.com/<directory-id>/oauth2/token")

spark.conf.set("fs.azure.account.auth.type.<storage-account>.dfs.core.windows.net", "SAS")

spark.conf.set("fs.azure.sas.token.provider.type.<storage-account>.dfs.core.windows.net", "org.apache.hadoop.fs.azurebfs.sas.FixedSASTokenProvider")

spark.conf.set("fs.azure.sas.fixed.token.<storage-account>.dfs.core.windows.net", "<token>")

spark.conf.set(

"fs.azure.account.key.<storage-account>.dfs.core.windows.net",

dbutils.secrets.get(scope="<scope>", key="<storage-account-access-key>"))

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2023 04:50 AM

I did not, i thought with using UC and the connector i would no have to provide that anymore. Without UC i store everything in Key Vault, and use secret scopes, i was hoping with UC that would solve the unmanaged adls access, but to be fair it is not documented either way.

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2023 05:05 AM

@Shep Sheppard Please refer to below doc on setting up a storage credential. You can use either an Azure managed identity or a service principal as the identity that authorizes access to your storage container. Managed identities are strongly recommended. They have the benefit of allowing Unity Catalog to access storage accounts protected by network rules, which isn’t possible using service principals, and they remove the need to manage and rotate secrets. Once you have valid storage credential and external location, you should be good.

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2023 10:46 PM

Hi @Shep Sheppard

Hope all is well! Just wanted to check in if you were able to resolve your issue and would you be happy to share the solution or mark an answer as best? Else please let us know if you need more help.

We'd love to hear from you.

Thanks!

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Configure Service Principle access to GiLab in Data Engineering

- Databricks connecting SQL Azure DW - Confused between Polybase and Copy Into in Data Engineering

- Infer schema eliminating leading zeros. in Data Engineering

- AssertionError Failed to create the catalog in Machine Learning

- Unity Catalog table management with multiple teams members in Machine Learning