Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- How to update value of a column with MAP data-type...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to update value of a column with MAP data-type in a delta table using a python dictionary and SQL UPDATE command?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-25-2023 09:46 PM

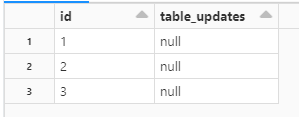

I have a delta table created by:

%sql

CREATE TABLE IF NOT EXISTS dev.bronze.test_map (

id INT,

table_updates MAP<STRING, TIMESTAMP>,

CONSTRAINT test_map_pk PRIMARY KEY(id)

) USING DELTA

LOCATION "abfss://bronze@Table Path"With initial values:

INSERT INTO dev.bronze.test_map

VALUES (1, null),

(2, null),

(3, null);Note that there is no value in column "table_updates".

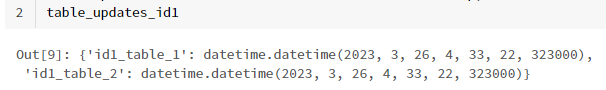

After processing other tables in our platform, I have table updates info as a python dictionary like below:

table_updates_id1 =

{'id1_table_1': datetime.datetime(2023, 3, 26, 4, 33, 22, 323000),

'id1_table_2': datetime.datetime(2023, 3, 26, 4, 33, 22, 323000)}

Now, I want to update the value of column "table_update " where id=1 using SQL UPDATE command (note that I want to update the table not dataframe).

I tried different methods but failed.

1st trial:

spark.sql(f"""

UPDATE dev.bronze.test_map

SET

table_updates = map({table_updates_id1})

WHERE

id = 1

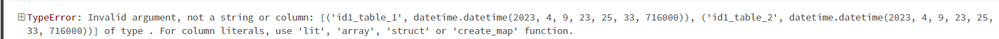

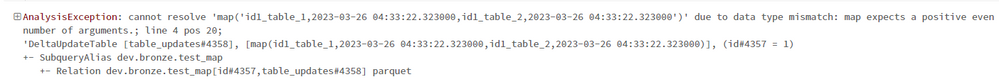

""")Error:

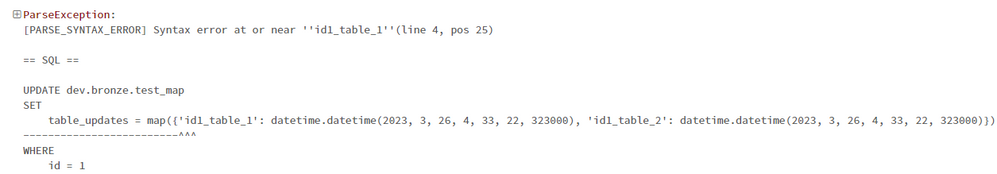

2nd trial:

spark.sql(f"""

UPDATE dev.bronze.test_map

SET

table_updates = map('{','.join([f'{k},{v}' for k,v in table_updates_id1.items()])}')

WHERE

id = 1

""")Error:

Any idea how to solve this issue? Thanks.

Labels:

- Labels:

-

Delta table

-

Map

-

Python Dictionary

-

SQL

3 REPLIES 3

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-02-2023 07:18 AM

@Mohammad Saber :

The error messages you received indicate that the SQL UPDATE command is expecting a column of type map<string,timestamp>, but is instead receiving a string or a non-matching datatype. To resolve this issue, you can convert the Python dictionary to a valid SQL map format using the map_from_entries function in Spark SQL.

Here's an example of how you can use the map_from_entries function to update the table_updates column in your delta table:

from pyspark.sql.functions import map_from_entries

# Convert Python dictionary to list of key-value pairs

table_updates_list = list(table_updates_id1.items())

# Convert list of key-value pairs to SQL map format

table_updates_map = map_from_entries(table_updates_list)

# Use SQL UPDATE command to update delta table

spark.sql(f"""

UPDATE dev.bronze.test_map

SET

table_updates = {table_updates_map}

WHERE

id = 1

""")In this example, the map_from_entries function converts the table_updates_id1 dictionary to a list of key-value pairs, which is then passed to the map_from_entries function to create a SQL map. The resulting SQL map is then used in the SQL UPDATE command to update the table_updates column for rows where id = 1,

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-09-2023 04:32 PM

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-03-2023 11:36 PM

Hi @Mohammad Saber

Thank you for your question! To assist you better, please take a moment to review the answer and let me know if it best fits your needs.

Please help us select the best solution by clicking on "Select As Best" if it does.

Your feedback will help us ensure that we are providing the best possible service to you. Thank you!

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- How to do perform deep clone for data migration from one Datalake to another? in Data Engineering

- Loading spark dataframe to Mongo collection isn't allowing nulls in Data Engineering

- Unsupported datatype 'TimestampNTZType' with liquid clustering in Data Engineering

- MongoDB Spark Connector v10.x read error on Databricks 13.x in Data Engineering

- AnalysisException : when attempting to save a spark DataFrame as delta table in Data Engineering