Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Engineering

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Engineering

- Using "FOR XML PATH" in Spark SQL in sql syntax

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-26-2023 09:50 PM

I'm using spark version 3.2.1 on databricks (DBR 10.4 LTS), and I'm trying to convert sql server sql query to a new sql query that runs on a spark cluster using spark sql in sql syntax. However, spark sql does not seem to support XML PATH as a function and I wonder if there is an alternative way to convert this sql server query into a sql query that spark sql will accept. The original sql server sql query looks like this:

DROP TABLE if exists UserCountry;

CREATE TABLE if not exists UserCountry (

UserID INT,

Country VARCHAR(5000)

);

INSERT INTO UserCountry

SELECT

L.UserID AS UserID,

COALESCE(

STUFF(

(SELECT ', ' + LC.Country FROM UserStopCountry LC WHERE L.UserID = LC.UserID FOR XML PATH (''))

, 1, 2, '')

, '') AS Country

FROM

LK_ETLRunUserID L

When I run the query above in databricks spark sql, I get the following error:

ParseException:

mismatched input 'FOR' expecting {')', '.', '[', 'AND', 'BETWEEN', 'CLUSTER', 'DISTRIBUTE', 'DIV', 'EXCEPT', 'GROUP', 'HAVING', 'IN', 'INTERSECT', 'IS', 'LIKE', 'ILIKE', 'LIMIT', NOT, 'OR', 'ORDER', 'QUALIFY', RLIKE, 'MINUS', 'SORT', 'UNION', 'WINDOW', EQ, '<=>', '<>', '!=', '<', LTE, '>', GTE, '+', '-', '*', '/', '%', '&', '|', '||', '^', ':', '::'}(line 6, pos 80)

== SQL ==

INSERT INTO UserCountry

SELECT

L.UserID AS UserID,

COALESCE(

STUFF(

(SELECT ', ' + LC.Country FROM UserStopCountry LC WHERE L.UserID = LC.UserID FOR XML PATH (''))

--------------------------------------------------------------------------------^^^

, 1, 2, '')

, '') AS Country

FROM

LK_ETLRunUserID L

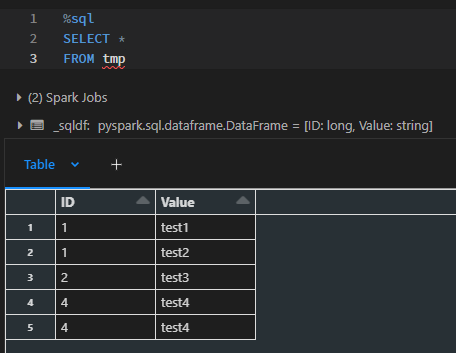

Given that the UserStopCountry looks like this:

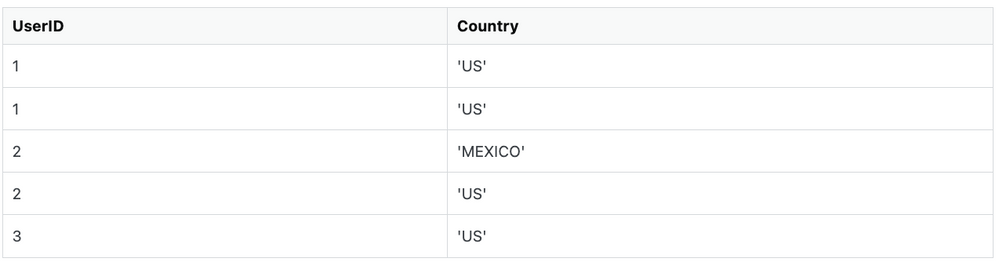

I believe the output will be:

1 ACCEPTED SOLUTION

Accepted Solutions

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-30-2023 05:59 AM

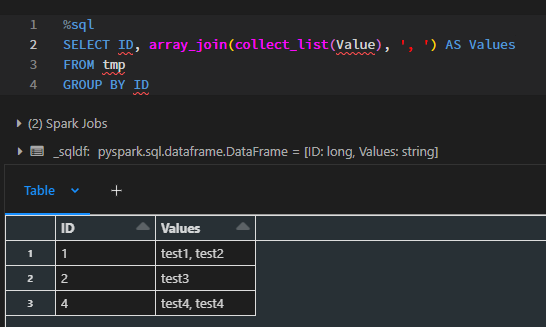

Posting the solution that I ended up using:

%sql

DROP TABLE if exists UserCountry;

CREATE TABLE if not exists UserCountry (

UserID INT,

Country VARCHAR(5000)

);

INSERT INTO UserCountry

SELECT

L.UserID AS UserID,

CONCAT_WS(',', collect_list(LC.Country)) AS Country

COALESCE(

STUFF(

(SELECT ', ' + LC.Country FROM UserStopCountry LC WHERE L.UserID = LC.UserID FOR XML PATH (''))

, 1, 2, '')

, '') AS Country

FROM LK_ETLRunUserID L

INNER JOIN UserStopCountry LC

ON L.UserID = LC.UserID

GROUP By L.UserID

3 REPLIES 3

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-29-2023 02:47 AM

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-30-2023 12:35 AM

Hi @Jay Yang

Thank you for posting your question in our community! We are happy to assist you.

To help us provide you with the most accurate information, could you please take a moment to review the responses and select the one that best answers your question?

This will also help other community members who may have similar questions in the future. Thank you for your participation and let us know if you need any further assistance!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-30-2023 05:59 AM

Posting the solution that I ended up using:

%sql

DROP TABLE if exists UserCountry;

CREATE TABLE if not exists UserCountry (

UserID INT,

Country VARCHAR(5000)

);

INSERT INTO UserCountry

SELECT

L.UserID AS UserID,

CONCAT_WS(',', collect_list(LC.Country)) AS Country

COALESCE(

STUFF(

(SELECT ', ' + LC.Country FROM UserStopCountry LC WHERE L.UserID = LC.UserID FOR XML PATH (''))

, 1, 2, '')

, '') AS Country

FROM LK_ETLRunUserID L

INNER JOIN UserStopCountry LC

ON L.UserID = LC.UserID

GROUP By L.UserIDWelcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Nulls in Merge in Data Engineering

- How can I throw an exception when a .json.gz file has multiple roots? in Data Engineering

- Delta Live Table : [TABLE_OR_VIEW_ALREADY_EXISTS] Cannot create table or view in Data Engineering

- I would like to Create a schedule in Databricks that runs a job on 1st working day of every month in Data Engineering

- Container Service Docker images fail when a pip package is installed in Data Engineering