Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Data Governance

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Databricks

- Data Governance

- Unable to Access external location on GCP from dat...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to Access external location on GCP from databricks on GCP.

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-14-2023 12:29 PM

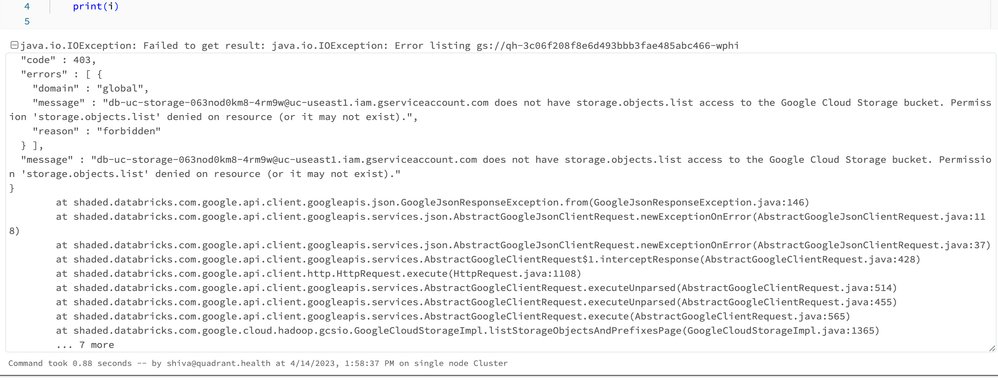

I am trying to add a GCP storage as an external location and read and write Unity catalog enabled delta tables to that external location in GCP databricks. I keep getting the error that the databricks instance service principal doesn't have access to the storage location. We have granted access to the service principal and also to the buckets but it doesn't seem to work. we dint face this kind of issue on AWS. Any guidance is appreciated.

Labels:

- Labels:

-

GCP

5 REPLIES 5

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-15-2023 05:44 PM

@shiva charan velichala :

If you have already granted access to the Databricks instance service principal and the GCP Storage buckets, there are a few additional things you can try to resolve this issue:

- Verify the access control settings: Make sure that you have granted the Databricks instance service principal the necessary IAM roles and permissions to access the GCP Storage buckets. You can check the access control settings by navigating to the IAM & Admin section of the GCP console.

- Check the network settings: Ensure that the network settings for the GCP Storage buckets are configured correctly. You may need to allow inbound traffic from the Databricks cluster or VPC network to the GCP Storage buckets.

- Verify the credentials: Double-check that you are using the correct credentials to authenticate with the GCP Storage buckets. If you are using a service account key file, make sure that the key file is valid and has the necessary permissions.

- Check the region settings: Ensure that the GCP Storage buckets and the Databricks cluster are located in the same region. If they are in different regions, you may need to configure cross-region access.

- Try a different approach: If the above steps do not work, you may want to try a different approach for accessing the GCP Storage buckets, such as using the gsutil command-line tool or the GCP Storage API directly.

Anonymous

Not applicable

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-15-2023 11:18 PM

Hi @shiva charan velichala

Hope everything is going great.

Just wanted to check in if you were able to resolve your issue. If yes, would you be happy to mark an answer as best so that other members can find the solution more quickly? If not, please tell us so we can help you.

Cheers!

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-17-2023 07:06 AM

adding to @Suteja Kanuri , @shiva charan velichala can you please recheck if reader access as shown below has been added to your externally created bucket

On the Permission tab, click + Grant access and assign the service account the following roles:

- Storage Legacy Bucket Reader

- Storage Object Admin

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-18-2023 03:16 AM

Have you found out the solution?

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-18-2023 07:08 AM

No, i tried the approachs from 1 to 4 and none of them worked. We want to use Dbutils and not GSutils as that would require a lot of code changes. Currently dbutils is not working

Welcome to Databricks Community: Lets learn, network and celebrate together

Join our fast-growing data practitioner and expert community of 80K+ members, ready to discover, help and collaborate together while making meaningful connections.

Click here to register and join today!

Engage in exciting technical discussions, join a group with your peers and meet our Featured Members.

Related Content

- Unity catalog issues in Data Engineering

- Workflow file arrival trigger - does it apply to overwritten files? in Data Engineering

- Configure Service Principle access to GiLab in Data Engineering

- Databricks connecting SQL Azure DW - Confused between Polybase and Copy Into in Data Engineering

- Connecting from Databricks to Network Path in Data Engineering